Slide 1

... The term AI was first time used in 1956 by John McCarthy. The term Computational Intelligence (CI) was first time used in 1994 to mainly cover areas such as neural networks, evolutionary algorithms and fuzzy logic. In this lecture we will focus only on neural network based algorithms because of ...

... The term AI was first time used in 1956 by John McCarthy. The term Computational Intelligence (CI) was first time used in 1994 to mainly cover areas such as neural networks, evolutionary algorithms and fuzzy logic. In this lecture we will focus only on neural network based algorithms because of ...

Lecture Slides

... The term AI was first time used in 1956 by John McCarthy. The term Computational Intelligence (CI) was first time used in 1994 to mainly cover areas such as neural networks, evolutionary algorithms and fuzzy logic. In this lecture we will focus only on neural network based algorithms because of ...

... The term AI was first time used in 1956 by John McCarthy. The term Computational Intelligence (CI) was first time used in 1994 to mainly cover areas such as neural networks, evolutionary algorithms and fuzzy logic. In this lecture we will focus only on neural network based algorithms because of ...

Introduction to Artificial Intelligence

... • SIR: answered simple questions in English • STUDENT: solved algebra story problems • SHRDLU: obeyed simple English commands in the blocks world ...

... • SIR: answered simple questions in English • STUDENT: solved algebra story problems • SHRDLU: obeyed simple English commands in the blocks world ...

Slide ()

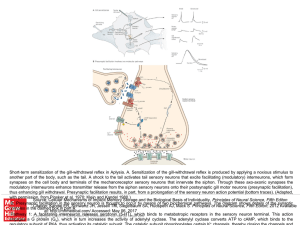

... Short-term sensitization of the gill-withdrawal reflex in Aplysia. A. Sensitization of the gill-withdrawal reflex is produced by applying a noxious stimulus to another part of the body, such as the tail. A shock to the tail activates tail sensory neurons that excite facilitating (modulatory) interne ...

... Short-term sensitization of the gill-withdrawal reflex in Aplysia. A. Sensitization of the gill-withdrawal reflex is produced by applying a noxious stimulus to another part of the body, such as the tail. A shock to the tail activates tail sensory neurons that excite facilitating (modulatory) interne ...

Neurons & the Nervous System

... • Synapse (synaptic cleft): gap between dendrites of one neuron and axon of another • Receptor sites: parts of dendrite which receive neurotransmitters • Neurotransmitters: chemical substances involved in sending neural impulses ...

... • Synapse (synaptic cleft): gap between dendrites of one neuron and axon of another • Receptor sites: parts of dendrite which receive neurotransmitters • Neurotransmitters: chemical substances involved in sending neural impulses ...

Introduction

... Feed forward back-propagation network Being able to properly approximate non-linear functions and if properly trained will perform reasonably well when presented with inputs it has not seen before HVS is non-linear To be useful. ...

... Feed forward back-propagation network Being able to properly approximate non-linear functions and if properly trained will perform reasonably well when presented with inputs it has not seen before HVS is non-linear To be useful. ...

The Neuron: The Basic Unit of Communication Neuron: Basic

... How do these drugs affect neural communication and behavior (page 178, 179)? Many drugs, especially those that affect moods or behavior, work by interfering with normal functioning of neurotransmitters in the synapse. How this occurs depends on the drug, such as the following: 1. Drugs can mimic spe ...

... How do these drugs affect neural communication and behavior (page 178, 179)? Many drugs, especially those that affect moods or behavior, work by interfering with normal functioning of neurotransmitters in the synapse. How this occurs depends on the drug, such as the following: 1. Drugs can mimic spe ...

Preparation for the Dissertation report

... It is reasonable to consider that modeling the brain is fundamental for conceiving engineering systems with similar functionalities. In fact, as stated by Haykin [2], “the brain is the living proof that fault tolerant parallel computing is not only physically possible, but also fast and powerful. It ...

... It is reasonable to consider that modeling the brain is fundamental for conceiving engineering systems with similar functionalities. In fact, as stated by Haykin [2], “the brain is the living proof that fault tolerant parallel computing is not only physically possible, but also fast and powerful. It ...

Artificial Neural Networks : An Introduction

... elements called nodes/neurons which operate in parallel. • Neurons are connected with others by connection link. • Each link is associated with weights which contain information about the input signal. • Each neuron has an internal state of its own which is a function of the inputs that neuron recei ...

... elements called nodes/neurons which operate in parallel. • Neurons are connected with others by connection link. • Each link is associated with weights which contain information about the input signal. • Each neuron has an internal state of its own which is a function of the inputs that neuron recei ...

Neurobiologically Inspired Robotics: Enhanced Autonomy through

... task that unifies the theoretical principles of DAC with biologically constrained models of several brain areas, they show that efficient goal-oriented behavior results from the interaction of parallel learning mechanisms accounting for motor adaptation, spatial encoding and decision-making. Human–R ...

... task that unifies the theoretical principles of DAC with biologically constrained models of several brain areas, they show that efficient goal-oriented behavior results from the interaction of parallel learning mechanisms accounting for motor adaptation, spatial encoding and decision-making. Human–R ...

notes as

... • Making the features themselves be adaptive or adding more layers of features won’t help. • Graphs with discretely labeled edges are a much more powerful representation than feature vectors. – Many AI researchers claimed that real numbers were bad and probabilities were even worse. • We should not ...

... • Making the features themselves be adaptive or adding more layers of features won’t help. • Graphs with discretely labeled edges are a much more powerful representation than feature vectors. – Many AI researchers claimed that real numbers were bad and probabilities were even worse. • We should not ...

Unimodal or Bimodal Distribution of Synaptic Weights?

... Other plasticity models, however, that exhibit always [2] or for certain inputs [3] a unimodal distribution of synaptic weights have the problem that they do not lead to long-term stability of the weights. In particular, if, after learing, the input pattern changes back to ‘weak’ correlations, the n ...

... Other plasticity models, however, that exhibit always [2] or for certain inputs [3] a unimodal distribution of synaptic weights have the problem that they do not lead to long-term stability of the weights. In particular, if, after learing, the input pattern changes back to ‘weak’ correlations, the n ...

PPT - Sheffield Department of Computer Science

... Attached to soma are long filaments: dendrites. Dendrites act as connections through which all the inputs to the neuron arrive. Axon: electrically active. Serves as output channel of neuron. Axon is non-linear threshold device. Produces pulse, called action potential when resting potential within s ...

... Attached to soma are long filaments: dendrites. Dendrites act as connections through which all the inputs to the neuron arrive. Axon: electrically active. Serves as output channel of neuron. Axon is non-linear threshold device. Produces pulse, called action potential when resting potential within s ...

PDF file

... (DNs) to any Finite Automaton (FA), a “commondenominator” model of all practical Symbolic Networks (SNs). From this FA, we can see what is meant by “abstraction”. This mapping explains why such a new class of neural networks abstract at least as well as the corresponding SNs. This seems to indicate ...

... (DNs) to any Finite Automaton (FA), a “commondenominator” model of all practical Symbolic Networks (SNs). From this FA, we can see what is meant by “abstraction”. This mapping explains why such a new class of neural networks abstract at least as well as the corresponding SNs. This seems to indicate ...

Dear Notetaker:

... Can get info about size of object, direction of motion, depth perception, and the beginnings of color vision processing o In the retina and LGN there are neurons that are classified as M-like, P-like, or K-like with different anatomical features and functions o In V1 the info from P, K, and M cells ...

... Can get info about size of object, direction of motion, depth perception, and the beginnings of color vision processing o In the retina and LGN there are neurons that are classified as M-like, P-like, or K-like with different anatomical features and functions o In V1 the info from P, K, and M cells ...

intelligent encoding

... A: Simple reconstruction network (RCN). B: RCN with sparse code shrinkage noise filtering and non-negative matrix factorization. C: RCN hierarchy. (See text.) MMI plays an important role in noise filtering. There are two different sets of afferents to the MMI layer: one carries the error, whereas th ...

... A: Simple reconstruction network (RCN). B: RCN with sparse code shrinkage noise filtering and non-negative matrix factorization. C: RCN hierarchy. (See text.) MMI plays an important role in noise filtering. There are two different sets of afferents to the MMI layer: one carries the error, whereas th ...

50 years of artificial intelligence

... Their paper introduces an automated system for property valuation that combines artificial neural network models with a geographic information system. The artificial neural network models used in this work are the multilayer perceptron, the radial basis function, and Kohonen’s maps. A third applicatio ...

... Their paper introduces an automated system for property valuation that combines artificial neural network models with a geographic information system. The artificial neural network models used in this work are the multilayer perceptron, the radial basis function, and Kohonen’s maps. A third applicatio ...

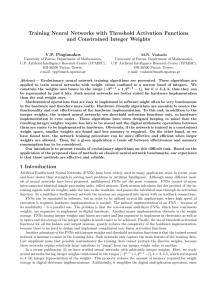

Training Neural Networks with Threshold Activation Functions and Constrained Integer Weights

... and have shown their strength in solving hard problems in Artificial Intelligence. Although many different models of neural networks have been proposed, multilayered FNNs are the most common. FNNs consist of many interconnected identical simple processing units, called neurons. Each neuron calculate ...

... and have shown their strength in solving hard problems in Artificial Intelligence. Although many different models of neural networks have been proposed, multilayered FNNs are the most common. FNNs consist of many interconnected identical simple processing units, called neurons. Each neuron calculate ...

DXNN: Evolving Complex Organisms in Complex Environments

... Yet still there are a number of problems that continue to plague even the most advanced of TWEANN systems: NEAT[2], CoSyNe[3], HyperNEAT[4], EANT[5], and EANT2[6]. Among these problems are the neural bloating, premature convergence, low population diversity, and the curse of dimensionality. The nove ...

... Yet still there are a number of problems that continue to plague even the most advanced of TWEANN systems: NEAT[2], CoSyNe[3], HyperNEAT[4], EANT[5], and EANT2[6]. Among these problems are the neural bloating, premature convergence, low population diversity, and the curse of dimensionality. The nove ...

Multilayer perceptrons

... Many problems can be described as pattern recognition For example, voice recognition, face recognition, optical character recognition ...

... Many problems can be described as pattern recognition For example, voice recognition, face recognition, optical character recognition ...

temporal visual event recognition

... that learned to represent different timescales was presented. A key aspect to their ability to learn time was their short-term synaptic plasticity. This is the first time where the effect of internally generated expectation has been studied for a biologically-plausible (e.g., each neuron adapts via ...

... that learned to represent different timescales was presented. A key aspect to their ability to learn time was their short-term synaptic plasticity. This is the first time where the effect of internally generated expectation has been studied for a biologically-plausible (e.g., each neuron adapts via ...

Information Integration and Decision Making in Humans and

... In short, idealized neurons using the logistic activation function can compute the probability of the hypothesis they stand for, given the evidence represented in their inputs, if their weights and biases have the appropriate values. ...

... In short, idealized neurons using the logistic activation function can compute the probability of the hypothesis they stand for, given the evidence represented in their inputs, if their weights and biases have the appropriate values. ...

APLICACIóN DE REDES NEuRONALES ARTIFICIALES A

... J. Jerez, L. Franco, E. Alba, A. Llombart-Cussac, A. Lluch, N. Ribelles, B. Munárriz and M. Martín. Improvement of Breast Cancer Relapse Prediction in High Risk Intervals Using Artificial Neural Networks. Breast Cancer Research and Treatment, 94, pp. 265--272 ...

... J. Jerez, L. Franco, E. Alba, A. Llombart-Cussac, A. Lluch, N. Ribelles, B. Munárriz and M. Martín. Improvement of Breast Cancer Relapse Prediction in High Risk Intervals Using Artificial Neural Networks. Breast Cancer Research and Treatment, 94, pp. 265--272 ...

On the Non-Existence of a Universal Learning Algorithm for

... (see FigJ). It is just a three step construction. First, each variable .1: i of D is represented in IV by a small sub-network. The structure of these modules is quite simple (left side of Fig.1). Note that only the self-recurrent connection for the unit at the bottom of these modules is "weighted" b ...

... (see FigJ). It is just a three step construction. First, each variable .1: i of D is represented in IV by a small sub-network. The structure of these modules is quite simple (left side of Fig.1). Note that only the self-recurrent connection for the unit at the bottom of these modules is "weighted" b ...

Neurons - Scott Melcher

... tip of the sending neuron and the dendrite or cell body of the receiving cell is called a synapse. The tiny gap at this junction is called the synaptic gap or cleft. When neurons are firing and action potentials are traveling down an axon, neurotransmitters are send through the synapse. Neurotransmi ...

... tip of the sending neuron and the dendrite or cell body of the receiving cell is called a synapse. The tiny gap at this junction is called the synaptic gap or cleft. When neurons are firing and action potentials are traveling down an axon, neurotransmitters are send through the synapse. Neurotransmi ...