Chapter 3 Experiments with a Single Factor: The Analysis

... • Linear statistical model • One-way or Signal-factor analysis of variance model • Completely randomized design: the experiments are performed in random order so that the environment in which the treatment are applied is as uniform as possible. • For hypothesis testing, the model errors are assumed ...

... • Linear statistical model • One-way or Signal-factor analysis of variance model • Completely randomized design: the experiments are performed in random order so that the environment in which the treatment are applied is as uniform as possible. • For hypothesis testing, the model errors are assumed ...

Two Groups Too Many? Try Analysis of Variance (ANOVA)

... the Logic in Comparing the Mean • If 2 or more populations have identical averages, the averages of random samples selected from those populations ought to be fairly similar as well. • Sample statistics vary from one sample to the next, however, large differences among the sample averages would caus ...

... the Logic in Comparing the Mean • If 2 or more populations have identical averages, the averages of random samples selected from those populations ought to be fairly similar as well. • Sample statistics vary from one sample to the next, however, large differences among the sample averages would caus ...

One Way ANOVA

... xij be the j-th measurement( we measure the response variable) for treatment i, then we assume that xij = µi + eij where µi is the mean of the response variable for experimental units with treated with treatment i, and eij is the error in this measurement, or the part in the measurement that can not ...

... xij be the j-th measurement( we measure the response variable) for treatment i, then we assume that xij = µi + eij where µi is the mean of the response variable for experimental units with treated with treatment i, and eij is the error in this measurement, or the part in the measurement that can not ...

power point - personal.stevens.edu

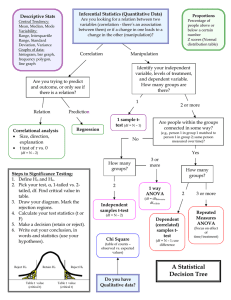

... The F distribution is asymmetrical and has two distinct degrees of freedom. This was discovered by Fisher, hence the label “F.” Once again, what we do is calculate the value of F for our sample data and then look up the corresponding area under the curve in ...

... The F distribution is asymmetrical and has two distinct degrees of freedom. This was discovered by Fisher, hence the label “F.” Once again, what we do is calculate the value of F for our sample data and then look up the corresponding area under the curve in ...

Chapter 12.1

... The F distribution is asymmetrical and has two distinct degrees of freedom. This was discovered by Fisher, hence the label “F.” Once again, what we do is calculate the value of F for our sample data and then look up the corresponding area under the curve in Table E. ...

... The F distribution is asymmetrical and has two distinct degrees of freedom. This was discovered by Fisher, hence the label “F.” Once again, what we do is calculate the value of F for our sample data and then look up the corresponding area under the curve in Table E. ...

AP Statistics: ANOVA Section 2

... when differences exist, not which specific groups differ. The goal of this section is to adapt the inference procedures of section 13.1 to use the results of the ANOVA analysis. This will allow us to find a confidence interval for the mean of any group, find a confidence interval for a difference in ...

... when differences exist, not which specific groups differ. The goal of this section is to adapt the inference procedures of section 13.1 to use the results of the ANOVA analysis. This will allow us to find a confidence interval for the mean of any group, find a confidence interval for a difference in ...

Chapter 5: Regression

... CONCLUSION: the samples in (b) contain a larger amount of variation among the sample means relative to the amount of variation within the samples, so ANOVA will find more significant differences among the means in (b) – assuming equal sample sizes here for (a) and (b) – Note: larger samples will fin ...

... CONCLUSION: the samples in (b) contain a larger amount of variation among the sample means relative to the amount of variation within the samples, so ANOVA will find more significant differences among the means in (b) – assuming equal sample sizes here for (a) and (b) – Note: larger samples will fin ...

Hypothesis Testing - St. Cloud State University

... • Will tell whether the values significantly vary across the groups, but not precisely which group is significantly different from the others. • If significance is found, post tests must be computed to determine where the differences are. ...

... • Will tell whether the values significantly vary across the groups, but not precisely which group is significantly different from the others. • If significance is found, post tests must be computed to determine where the differences are. ...

Chapter 10 Analysis of Variance (Hypothesis Testing III)

... Independent Random Samples LOM is I-R ...

... Independent Random Samples LOM is I-R ...

ANOVA review questions

... What would happen if instead of using an ANOVA to compare 10 groups, you performed multiple ttests? a. Nothing, there is no difference between using an ANOVA and using a t-test. b. Nothing serious, except that making multiple comparisons with a t-test requires more computation than doing a single AN ...

... What would happen if instead of using an ANOVA to compare 10 groups, you performed multiple ttests? a. Nothing, there is no difference between using an ANOVA and using a t-test. b. Nothing serious, except that making multiple comparisons with a t-test requires more computation than doing a single AN ...

Analysis of variance

Analysis of variance (ANOVA) is a collection of statistical models used to analyze the differences among group means and their associated procedures (such as ""variation"" among and between groups), developed by statistician and evolutionary biologist Ronald Fisher. In the ANOVA setting, the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether or not the means of several groups are equal, and therefore generalizes the t-test to more than two groups. As doing multiple two-sample t-tests would result in an increased chance of committing a statistical type I error, ANOVAs are useful for comparing (testing) three or more means (groups or variables) for statistical significance.