A Novel Method for Overlapping Clusters

... unlabeled input vectors into clusters such that points within the cluster are more similar to each other than vectors belonging to different clusters [4]. The clustering methods are of five types: hierarchical clustering, partitioning clustering, density-based clustering, grid-based clustering and m ...

... unlabeled input vectors into clusters such that points within the cluster are more similar to each other than vectors belonging to different clusters [4]. The clustering methods are of five types: hierarchical clustering, partitioning clustering, density-based clustering, grid-based clustering and m ...

Improved visual clustering of large multi

... Subspace clustering refers to approaches that apply dimensionality reduction before clustering the data. Different approaches for dimensionality reduction have been largely used, such as Principal Components Analysis (PCA) [12], Fastmap [7], Singular Value Decomposition (SVD) [17], and Fractal-based ...

... Subspace clustering refers to approaches that apply dimensionality reduction before clustering the data. Different approaches for dimensionality reduction have been largely used, such as Principal Components Analysis (PCA) [12], Fastmap [7], Singular Value Decomposition (SVD) [17], and Fractal-based ...

Comparing K-value Estimation for Categorical and Numeric Data

... whether to split a k-means center into two centers. We present a new statistic for determining whether data are sampled from a Gaussian distribution, which we call the G-means statistic. We also present a new Heuristical novel method for converting categorical data into numeric data. We describe exa ...

... whether to split a k-means center into two centers. We present a new statistic for determining whether data are sampled from a Gaussian distribution, which we call the G-means statistic. We also present a new Heuristical novel method for converting categorical data into numeric data. We describe exa ...

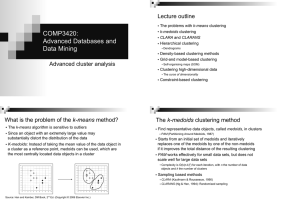

COMP3420: dvanced Databases and Data Mining

... Ordering Points To Identify the Clustering Structure Produces a special order of the database with respect to its density-based clustering structure This cluster-ordering contains information equivalent to the density-based clusterings corresponding to a broad range of parameter settings Goo ...

... Ordering Points To Identify the Clustering Structure Produces a special order of the database with respect to its density-based clustering structure This cluster-ordering contains information equivalent to the density-based clusterings corresponding to a broad range of parameter settings Goo ...

An Educational Data Mining System for Advising Higher Education

... performance. Md. Hedayetul Islam Shovon and Mahfuza Haque [5] implemented a k-means cluster algorithm. The main goal of their study is to help both the instructors and the students to improve the quality of the education by dividing the students into groups according to their characteristics using t ...

... performance. Md. Hedayetul Islam Shovon and Mahfuza Haque [5] implemented a k-means cluster algorithm. The main goal of their study is to help both the instructors and the students to improve the quality of the education by dividing the students into groups according to their characteristics using t ...

Unsupervised naive Bayes for data clustering with mixtures of

... The usual way of modeling data clustering in a probabilistic approach is to add a hidden random variable to the data set, i.e., a variable whose value has been missed in all the records. This hidden variable, normally referred to as the class variable, will reflect the cluster membership for every c ...

... The usual way of modeling data clustering in a probabilistic approach is to add a hidden random variable to the data set, i.e., a variable whose value has been missed in all the records. This hidden variable, normally referred to as the class variable, will reflect the cluster membership for every c ...

A Comparative Analysis of Density Based Clustering

... DBSCAN's definition of a cluster is based on the notion of density reach ability. Basically, a point q is directly density-reachable from a point p if it is not farther away than a given distance є (i.e., is part of its є -neighborhood) and if p is surrounded by sufficiently many points such that on ...

... DBSCAN's definition of a cluster is based on the notion of density reach ability. Basically, a point q is directly density-reachable from a point p if it is not farther away than a given distance є (i.e., is part of its є -neighborhood) and if p is surrounded by sufficiently many points such that on ...

Lecture 13

... - Principal components are orthogonal to each other, however, biological data are not - Principal components are linear combinations of original data - Prior knowledge is important - PCA is not clustering! ...

... - Principal components are orthogonal to each other, however, biological data are not - Principal components are linear combinations of original data - Prior knowledge is important - PCA is not clustering! ...

Quality Design Based on SAS/EM

... used. In SAS/STAT , there are 11 kinds of hierarchical clustering algorithms involved in CLUSTER procedure step and a dynamic clustering algorithm involved in FASTCLUS procedure step, but the clustering algorithms based on artificial neural network are absent. Therefore we use SAS/IML ...

... used. In SAS/STAT , there are 11 kinds of hierarchical clustering algorithms involved in CLUSTER procedure step and a dynamic clustering algorithm involved in FASTCLUS procedure step, but the clustering algorithms based on artificial neural network are absent. Therefore we use SAS/IML ...

Density-Based Clustering Method

... Lemma 1:Let p be a point in D and |NEps(p)| ≥ MinPts. Then the set O = {o | o ∈D and o is density-reachable from p wrt. Eps and MinPts} is a cluster wrt. Eps and MinPts. Lemma 2: Let C be a cluster wrt. Eps and MinPts and let p be any point in C with |NEps(p)| ≥ MinPts. Then C equals to the set O = ...

... Lemma 1:Let p be a point in D and |NEps(p)| ≥ MinPts. Then the set O = {o | o ∈D and o is density-reachable from p wrt. Eps and MinPts} is a cluster wrt. Eps and MinPts. Lemma 2: Let C be a cluster wrt. Eps and MinPts and let p be any point in C with |NEps(p)| ≥ MinPts. Then C equals to the set O = ...

Breast Cancer Prediction using Data Mining Techniques

... Abstract—Cancer is the most central element for death around the world. In 2012, there are 8.2 million cancer demise worldwide and future anticipated that would have 13 million death by growth in 2030.The earlier forecast and location of tumor can be useful in curing the illness. So the examination ...

... Abstract—Cancer is the most central element for death around the world. In 2012, there are 8.2 million cancer demise worldwide and future anticipated that would have 13 million death by growth in 2030.The earlier forecast and location of tumor can be useful in curing the illness. So the examination ...

Topic 5

... This is because of the “dimensionality curse”; a point could be as close to its cluster as to the other clusters. For such cases, clustering could be more meaningful in a subset of the full-dimensionality, where the rest of the dimensions are “noise” to the specific cluster. Some clustering techniqu ...

... This is because of the “dimensionality curse”; a point could be as close to its cluster as to the other clusters. For such cases, clustering could be more meaningful in a subset of the full-dimensionality, where the rest of the dimensions are “noise” to the specific cluster. Some clustering techniqu ...

Cluster Analysis: Basic Concepts and Algorithms What is Cluster

... Select a first point as the centroid of all points. Then, select (K1) most widely separated points – Problem: can select outliers – Solution: Use a sample of points ...

... Select a first point as the centroid of all points. Then, select (K1) most widely separated points – Problem: can select outliers – Solution: Use a sample of points ...

IADIS Conference Template

... on the approach of calculating membership degrees in each algorithm. We will sort the clusters based on their degree of importance for each data point or the ascending order of membership degrees in each row. For each session, the number of pages recommended from each cluster is determined by the me ...

... on the approach of calculating membership degrees in each algorithm. We will sort the clusters based on their degree of importance for each data point or the ascending order of membership degrees in each row. For each session, the number of pages recommended from each cluster is determined by the me ...

Document

... Each internal node of the tree partitions the data into groups based on a partitioning attribute, and a partitioning condition for the node ...

... Each internal node of the tree partitions the data into groups based on a partitioning attribute, and a partitioning condition for the node ...