A Taxonomy Framework for Unsupervised Outlier Detection

... Based on these real-life applications, it can clearly be seen that outlier detection is a quite critical part of any data analysis. In the detection of outliers, there is a universally accepted assumption that the number of anomalous data is considerably smaller than normal data in a data set. Thus, ...

... Based on these real-life applications, it can clearly be seen that outlier detection is a quite critical part of any data analysis. In the detection of outliers, there is a universally accepted assumption that the number of anomalous data is considerably smaller than normal data in a data set. Thus, ...

An Introduction to Cluster Analysis for Data Mining

... in a group will be similar (or related) to one other and different from (or unrelated to) the objects in other groups. The greater the similarity (or homogeneity) within a group, and the greater the difference between groups, the “better” or more distinct the clustering. The definition of what const ...

... in a group will be similar (or related) to one other and different from (or unrelated to) the objects in other groups. The greater the similarity (or homogeneity) within a group, and the greater the difference between groups, the “better” or more distinct the clustering. The definition of what const ...

Integrating Data Mining with Relational DBMS: A Tightly

... We also assume the existence of functions add and replace, with their usual meanings, for sets and the function append for ordered sets. The nondeterministic algorithm is shown in Fig. 2 The number of query ock plans that this nondeterministic algorithm can generate is rather large. Infact, with no ...

... We also assume the existence of functions add and replace, with their usual meanings, for sets and the function append for ordered sets. The nondeterministic algorithm is shown in Fig. 2 The number of query ock plans that this nondeterministic algorithm can generate is rather large. Infact, with no ...

Document

... In the next few slides we will discuss the 4 most common distortions, and how to remove them (c) Eamonn Keogh, [email protected] ...

... In the next few slides we will discuss the 4 most common distortions, and how to remove them (c) Eamonn Keogh, [email protected] ...

Neural Networks as Artificial Memories for Association Rule Mining

... on investigating how to perform the counting of patterns or itemsets, which is normally produced by looking for the patterns by scanning the high dimensional space defined by the original data environment, through decoding the knowledge embedded in an auto-associative memory and a self-organising ma ...

... on investigating how to perform the counting of patterns or itemsets, which is normally produced by looking for the patterns by scanning the high dimensional space defined by the original data environment, through decoding the knowledge embedded in an auto-associative memory and a self-organising ma ...

1.5. Frequent sequence mining in data streams

... classification errors made by this method using standard statistical methods. Therefore, the proposed method can be employed without extensive empirical performance evaluations that are necessary for other state-of-the-art approximate methods. Multiple Re-sampling Method (MRM) is an improved versi ...

... classification errors made by this method using standard statistical methods. Therefore, the proposed method can be employed without extensive empirical performance evaluations that are necessary for other state-of-the-art approximate methods. Multiple Re-sampling Method (MRM) is an improved versi ...

CHAPTER 25 MULTI-OBJECTIVE ALGORITHMS FOR ATTRIBUTE

... mined. Ea h gene an take on the value 1 or 0, indi ating that the orresponding attribute o urs or not (respe tively) in the andidate subset of sele ted attributes. 4.1.2. Fitness Fun tion The tness (evaluation) fun tion measures the quality of a andidate attribute subset represented by an indi ...

... mined. Ea h gene an take on the value 1 or 0, indi ating that the orresponding attribute o urs or not (respe tively) in the andidate subset of sele ted attributes. 4.1.2. Fitness Fun tion The tness (evaluation) fun tion measures the quality of a andidate attribute subset represented by an indi ...

Hierarchical Clustering Algorithms in Data Mining

... assemble the set of data items into clusters of the similar identity. Clustering is an example of unsupervised learning because there are no predefined classes. The quality of the cluster can be measure by high intra-cluster similarity and low inter-cluster similarity. Nowadays, clustering becomes o ...

... assemble the set of data items into clusters of the similar identity. Clustering is an example of unsupervised learning because there are no predefined classes. The quality of the cluster can be measure by high intra-cluster similarity and low inter-cluster similarity. Nowadays, clustering becomes o ...

Frequent pattern analysis for decision making in big data

... classification errors made by this method using standard statistical methods. Therefore, the proposed method can be employed without extensive empirical performance evaluations that are necessary for other state-of-the-art approximate methods. Multiple Re-sampling Method (MRM) is an improved versi ...

... classification errors made by this method using standard statistical methods. Therefore, the proposed method can be employed without extensive empirical performance evaluations that are necessary for other state-of-the-art approximate methods. Multiple Re-sampling Method (MRM) is an improved versi ...

slides

... draws from standard Gaussian distribution Quantitative evaluation: the recovery error is defined as the relative difference in 2-norm between the estimated sparse coefficient and the ground truth ...

... draws from standard Gaussian distribution Quantitative evaluation: the recovery error is defined as the relative difference in 2-norm between the estimated sparse coefficient and the ground truth ...

2 nR , n

... The description of an arbitrary real number requires an infinite number of bits, so a finite representation of a continuous random variable can never be perfect. Define the “goodness” of a representation of a source: distortion measure which is a measure of distance between the random variable and i ...

... The description of an arbitrary real number requires an infinite number of bits, so a finite representation of a continuous random variable can never be perfect. Define the “goodness” of a representation of a source: distortion measure which is a measure of distance between the random variable and i ...

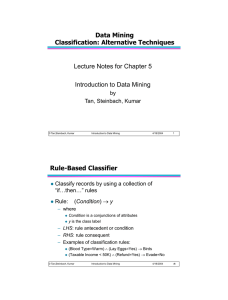

Rule-Based Classifier

... – The set of stored records – Distance Metric to compute distance between records – The value of k, the number of nearest neighbors to retrieve ...

... – The set of stored records – Distance Metric to compute distance between records – The value of k, the number of nearest neighbors to retrieve ...

K-nearest neighbors algorithm

In pattern recognition, the k-Nearest Neighbors algorithm (or k-NN for short) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space. The output depends on whether k-NN is used for classification or regression: In k-NN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the class of that single nearest neighbor. In k-NN regression, the output is the property value for the object. This value is the average of the values of its k nearest neighbors.k-NN is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The k-NN algorithm is among the simplest of all machine learning algorithms.Both for classification and regression, it can be useful to assign weight to the contributions of the neighbors, so that the nearer neighbors contribute more to the average than the more distant ones. For example, a common weighting scheme consists in giving each neighbor a weight of 1/d, where d is the distance to the neighbor.The neighbors are taken from a set of objects for which the class (for k-NN classification) or the object property value (for k-NN regression) is known. This can be thought of as the training set for the algorithm, though no explicit training step is required.A shortcoming of the k-NN algorithm is that it is sensitive to the local structure of the data. The algorithm has nothing to do with and is not to be confused with k-means, another popular machine learning technique.