2012-02

... realize that the response variable has only two outcomes. Assumptions of linear regression not valid. Linear regression may lead to predictions that are negative or greater then one. We are actually trying to model the probability of a success - the results must be values between 0 and 1. Introduce ...

... realize that the response variable has only two outcomes. Assumptions of linear regression not valid. Linear regression may lead to predictions that are negative or greater then one. We are actually trying to model the probability of a success - the results must be values between 0 and 1. Introduce ...

PEQWS_Mod03_Prob10_v02

... The first step in this problem is to pick the best approach to the problem. We can certainly solve this by simply writing KVL and KCL and Ohm’s Law expressions until we can solve, but it is better to take a systematic approach. Our two primary systematic approaches are the Node-Voltage Method, and t ...

... The first step in this problem is to pick the best approach to the problem. We can certainly solve this by simply writing KVL and KCL and Ohm’s Law expressions until we can solve, but it is better to take a systematic approach. Our two primary systematic approaches are the Node-Voltage Method, and t ...

HW 2 - Marriott School

... data cover 39 consecutive weeks and isolate the area around Boston. The variables in this collection are shares. Marketing research often describes the level of promotion in terms of voice. In place of he level of spending, voice is the share of advertising devoted to a specific product. The column ...

... data cover 39 consecutive weeks and isolate the area around Boston. The variables in this collection are shares. Marketing research often describes the level of promotion in terms of voice. In place of he level of spending, voice is the share of advertising devoted to a specific product. The column ...

The Efficient Exam

... • Gini’s Mean Difference can be decomposed in a way that makes the decomposition of the variance a special case. • This way one can find the implicit assumptions behind the variance. • Properties described in a 540 pages book • Entitled “The Gini Methodology” by ...

... • Gini’s Mean Difference can be decomposed in a way that makes the decomposition of the variance a special case. • This way one can find the implicit assumptions behind the variance. • Properties described in a 540 pages book • Entitled “The Gini Methodology” by ...

The Optimization Problem is

... The largest or smallest value of the objective function is called the optimal value, and a collection of values of x, y, z, . . . that gives the optimal value constitutes an optimal solution. The variables x, y, z, . . . are called the decision variables ...

... The largest or smallest value of the objective function is called the optimal value, and a collection of values of x, y, z, . . . that gives the optimal value constitutes an optimal solution. The variables x, y, z, . . . are called the decision variables ...

Elements of Statistical Learning Printing 10 with corrections

... • Linear Models (least squares) for classification • output G = { Blue, Orange } = {0,1} ...

... • Linear Models (least squares) for classification • output G = { Blue, Orange } = {0,1} ...

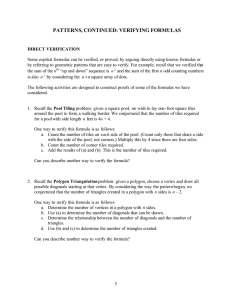

Day-1-Presentation-Equations in one variable .22 (PPT)

... and finding dimensions of plane figures. For instance, given the perimeter of a rectangular piece of land and a brief description of its dimensions, we can easily find the exact dimensions using linear equations. ...

... and finding dimensions of plane figures. For instance, given the perimeter of a rectangular piece of land and a brief description of its dimensions, we can easily find the exact dimensions using linear equations. ...

Least squares

The method of least squares is a standard approach in regression analysis to the approximate solution of overdetermined systems, i.e., sets of equations in which there are more equations than unknowns. ""Least squares"" means that the overall solution minimizes the sum of the squares of the errors made in the results of every single equation.The most important application is in data fitting. The best fit in the least-squares sense minimizes the sum of squared residuals, a residual being the difference between an observed value and the fitted value provided by a model. When the problem has substantial uncertainties in the independent variable (the x variable), then simple regression and least squares methods have problems; in such cases, the methodology required for fitting errors-in-variables models may be considered instead of that for least squares.Least squares problems fall into two categories: linear or ordinary least squares and non-linear least squares, depending on whether or not the residuals are linear in all unknowns. The linear least-squares problem occurs in statistical regression analysis; it has a closed-form solution. The non-linear problem is usually solved by iterative refinement; at each iteration the system is approximated by a linear one, and thus the core calculation is similar in both cases.Polynomial least squares describes the variance in a prediction of the dependent variable as a function of the independent variable and the deviations from the fitted curve.When the observations come from an exponential family and mild conditions are satisfied, least-squares estimates and maximum-likelihood estimates are identical. The method of least squares can also be derived as a method of moments estimator.The following discussion is mostly presented in terms of linear functions but the use of least-squares is valid and practical for more general families of functions. Also, by iteratively applying local quadratic approximation to the likelihood (through the Fisher information), the least-squares method may be used to fit a generalized linear model.For the topic of approximating a function by a sum of others using an objective function based on squared distances, see least squares (function approximation).The least-squares method is usually credited to Carl Friedrich Gauss (1795), but it was first published by Adrien-Marie Legendre.