Bernard Hanzon and Ralf L.M. Peeters, “A Faddeev Sequence

... linear dynamical models the Fisher information matrix is in fact a Riemannian metric tensor and it can also be obtained in symbolic form by solving a number of Lyapunov and Sylvester equations. For further information on these issues the reader is referred to [9, 4, 5]. One straightforward approach ...

... linear dynamical models the Fisher information matrix is in fact a Riemannian metric tensor and it can also be obtained in symbolic form by solving a number of Lyapunov and Sylvester equations. For further information on these issues the reader is referred to [9, 4, 5]. One straightforward approach ...

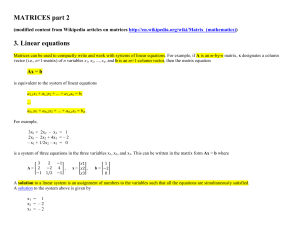

MATRICES part 2 3. Linear equations

... A variant of Gaussian elimination called Gauss–Jordan elimination can be used for finding the inverse of a matrix, if it exists. If A is a n by n square matrix, then one can use row reduction to compute its inverse matrix, if it exists. First, the n by n identity matrix is augmented to the right of ...

... A variant of Gaussian elimination called Gauss–Jordan elimination can be used for finding the inverse of a matrix, if it exists. If A is a n by n square matrix, then one can use row reduction to compute its inverse matrix, if it exists. First, the n by n identity matrix is augmented to the right of ...

Statistical Behavior of the Eigenvalues of Random Matrices

... system were in that state. (Because H is Hermitian, its eigenvalues are real.) In the case of an atomic nucleus, H is the “Hamiltonian”, and the eigenvalue En denotes the n-th energy level. Most nuclei have thousands of states and energy levels, and are too complex to be described exactly. Instead, ...

... system were in that state. (Because H is Hermitian, its eigenvalues are real.) In the case of an atomic nucleus, H is the “Hamiltonian”, and the eigenvalue En denotes the n-th energy level. Most nuclei have thousands of states and energy levels, and are too complex to be described exactly. Instead, ...

1 DELFT UNIVERSITY OF TECHNOLOGY Faculty of Electrical

... for M1 a translation along the vector (0, ty) and M2 a scaling relative to O with scale factors sx and 1 for M1 a rotation about O with θ° and M2 a scaling relative to O with equal x-direction and y-direction scale factors s and s for M1 a translation along the vector (tx, ty) and M2 a rotation abou ...

... for M1 a translation along the vector (0, ty) and M2 a scaling relative to O with scale factors sx and 1 for M1 a rotation about O with θ° and M2 a scaling relative to O with equal x-direction and y-direction scale factors s and s for M1 a translation along the vector (tx, ty) and M2 a rotation abou ...