Black Box Methods – Neural Networks and Support Vector

... The network topology is a blank slate that by itself has not learned anything. Like a newborn child, it must be trained with experience. As the neural network processes the input data, connections between the neurons are strengthened or weakened similar to how a baby's brain develops as he or she ex ...

... The network topology is a blank slate that by itself has not learned anything. Like a newborn child, it must be trained with experience. As the neural network processes the input data, connections between the neurons are strengthened or weakened similar to how a baby's brain develops as he or she ex ...

The Implementation of Artificial Intelligence and Temporal Difference

... Chess masters spend careers learning how to “evaluate” moves Purpose: can a computer learn a good evaluation function? ...

... Chess masters spend careers learning how to “evaluate” moves Purpose: can a computer learn a good evaluation function? ...

Quality – An Inherent Aspect of Agile Software Development

... Each node performs similar algorithm Each node learns ...

... Each node performs similar algorithm Each node learns ...

WEKA - WordPress.com

... computational model that tries to simulate the structure and/or functional aspects of biological neural networks. • ANN consists of an interconnected group of artificial neurons and processes information using a connectionist approach to computation [10]. • ANN is an adaptive system that can change ...

... computational model that tries to simulate the structure and/or functional aspects of biological neural networks. • ANN consists of an interconnected group of artificial neurons and processes information using a connectionist approach to computation [10]. • ANN is an adaptive system that can change ...

ANN Approach for Weather Prediction using Back Propagation

... Repeat phase 1 and 2 until the performance of the network is trained. Once the network is trained, it will provide the desired output for any of the input patterns. The network is first initialized by setting up all its weights to be small random numbers – say between –1 and +1. Next, the input patt ...

... Repeat phase 1 and 2 until the performance of the network is trained. Once the network is trained, it will provide the desired output for any of the input patterns. The network is first initialized by setting up all its weights to be small random numbers – say between –1 and +1. Next, the input patt ...

Lecture 14 - School of Computing

... 3. Define a local neighbourhood and a learning rate 4. For each item in the training set • Find the lattice node most excited by the input • Alter the input weights for this node and those nearby such that they more closely resemble the input vector, i.e., at each node, the input weight update rule ...

... 3. Define a local neighbourhood and a learning rate 4. For each item in the training set • Find the lattice node most excited by the input • Alter the input weights for this node and those nearby such that they more closely resemble the input vector, i.e., at each node, the input weight update rule ...

ppt - of Dushyant Arora

... Backpropagation Networks • Single neurons can perform certain simple pattern detection functions, the power of neural computation comes from the neurons connected in network structure. • For many years there was no theoretically sound algorithm for training multilayer artificial neural networks. • ...

... Backpropagation Networks • Single neurons can perform certain simple pattern detection functions, the power of neural computation comes from the neurons connected in network structure. • For many years there was no theoretically sound algorithm for training multilayer artificial neural networks. • ...

Evolutionary Algorithm for Connection Weights in Artificial Neural

... (mapping) of a multi-dimensional input variable into another multi-dimensional variable in the output. Any input –output mapping should be possible if neural network has enough neurons in hidden layers. Practically, it is not an easy task. Presently, there is no satisfactory method to define how man ...

... (mapping) of a multi-dimensional input variable into another multi-dimensional variable in the output. Any input –output mapping should be possible if neural network has enough neurons in hidden layers. Practically, it is not an easy task. Presently, there is no satisfactory method to define how man ...

intelligent encoding

... which can be called as not worth to communicate (non-WTC) type is either easy to solve and easy to verify, or hard to solve and hard to verify. The other type is hard to solve but easy to verify and, in turn, it is of WTC type. For non-WTC type problems communication is simply an overhead and as suc ...

... which can be called as not worth to communicate (non-WTC) type is either easy to solve and easy to verify, or hard to solve and hard to verify. The other type is hard to solve but easy to verify and, in turn, it is of WTC type. For non-WTC type problems communication is simply an overhead and as suc ...

DEEP LEARNING REVIEW

... • Two hidden units having the same bias, and same incoming and outgoing weights, will always get exactly the same gradients. • They can never learn different features. • Break the symmetry by initializing the weights to have small random values. • Cannot use big weights because hidden units with big ...

... • Two hidden units having the same bias, and same incoming and outgoing weights, will always get exactly the same gradients. • They can never learn different features. • Break the symmetry by initializing the weights to have small random values. • Cannot use big weights because hidden units with big ...

Neural Networks

... Each input presented to the network will have an associated desired output that will also be presented. Each learning cycle the error between the actual and the desired output is used to adjust the weights. When the error is acceptable amount the learning stops. A network thus trained will have the ...

... Each input presented to the network will have an associated desired output that will also be presented. Each learning cycle the error between the actual and the desired output is used to adjust the weights. When the error is acceptable amount the learning stops. A network thus trained will have the ...

1997-Learning to Play Hearts - Association for the Advancement of

... parts: the value of the trick (as in the previous case) and the next prediction. To make a decision we evaluate the network on all legal moves and select the one with the highest value. To update the network we wait until it is our turn again and calculate the value of the best move in the current p ...

... parts: the value of the trick (as in the previous case) and the next prediction. To make a decision we evaluate the network on all legal moves and select the one with the highest value. To update the network we wait until it is our turn again and calculate the value of the best move in the current p ...

Artificial Neural Network

... processing elements operating in parallel whose function is determined by network structure, connection strengths, and the processing performed at computing elements or nodes. DARPA Neural Network Study (1988, AFCEA International Press, p. 60) A neural network is a massively parallel distributed pro ...

... processing elements operating in parallel whose function is determined by network structure, connection strengths, and the processing performed at computing elements or nodes. DARPA Neural Network Study (1988, AFCEA International Press, p. 60) A neural network is a massively parallel distributed pro ...

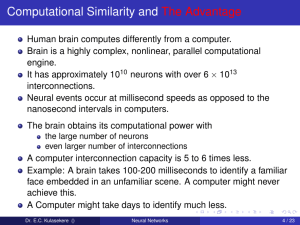

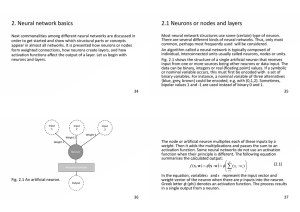

2. Neural network basics 2.1 Neurons or nodes and layers

... data can be binary, integers or real (floating point) values. If a symbolic or nominal variable occurs, this must first be encoded with a set of binary variables. For instance, a nominal variable of three alternatives {blue, grey, brown} could be encoded, e.g, with {0,1,2}. Sometimes, bipolar values ...

... data can be binary, integers or real (floating point) values. If a symbolic or nominal variable occurs, this must first be encoded with a set of binary variables. For instance, a nominal variable of three alternatives {blue, grey, brown} could be encoded, e.g, with {0,1,2}. Sometimes, bipolar values ...

Catastrophic Forgetting in Connectionist Networks: Causes

... learning trials, the one’s knowledge was at 1%, by 15 trials, no correct answers from the previous one’s problems could be produced by the network. The network had “catastrophically” forgotten its one’s sums. In a subsequent experiment that attempted to more closely match the original Barnes and Und ...

... learning trials, the one’s knowledge was at 1%, by 15 trials, no correct answers from the previous one’s problems could be produced by the network. The network had “catastrophically” forgotten its one’s sums. In a subsequent experiment that attempted to more closely match the original Barnes and Und ...

Week7

... Perceptron Learning Process • The learning process is based on the training data from the real world, adjusting a weight vector of inputs to a perceptron. • In other words, the learning process is to begin with random weighs, then iteratively apply the perceptron to each training example, modifying ...

... Perceptron Learning Process • The learning process is based on the training data from the real world, adjusting a weight vector of inputs to a perceptron. • In other words, the learning process is to begin with random weighs, then iteratively apply the perceptron to each training example, modifying ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.