neural_network_0219

... • Neural network A, B • Loosely coupled system C vs. Strongly coupled system D • After get A and B, the types of C: – C-NLC: C is a neural network, and output non linear combination of A and B – C-Retrain: the whole system ABC is further retrained – C-Avg: average A and B – C-OLC: get an optimal lin ...

... • Neural network A, B • Loosely coupled system C vs. Strongly coupled system D • After get A and B, the types of C: – C-NLC: C is a neural network, and output non linear combination of A and B – C-Retrain: the whole system ABC is further retrained – C-Avg: average A and B – C-OLC: get an optimal lin ...

Document

... Note there are also many excellent book available on specific topics such as neural networks but these are not listed here (see webpage). ...

... Note there are also many excellent book available on specific topics such as neural networks but these are not listed here (see webpage). ...

WYDZIAŁ

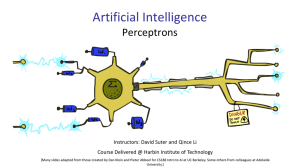

... Learning algorithms for OCR (perceptrons, Hopfield networks). Adaptive linear networks. Efficiency of learning methods. Speech recognition. Cancer diagnosis using SVM. Laboratory: Fuzzy expert systems as real-time controllers (navigation system for mobile robots). Medical diagnostic systems. Learnin ...

... Learning algorithms for OCR (perceptrons, Hopfield networks). Adaptive linear networks. Efficiency of learning methods. Speech recognition. Cancer diagnosis using SVM. Laboratory: Fuzzy expert systems as real-time controllers (navigation system for mobile robots). Medical diagnostic systems. Learnin ...

Computational intelligence meets the NetFlix prize IEEE

... computing activations is repeated through the layers of the network until the output layer is reached. Neural networks are not programmed; rather they are trained using one of several kinds of algorithms. The typical structure of a training algorithm starts at the output of the network, calculating ...

... computing activations is repeated through the layers of the network until the output layer is reached. Neural networks are not programmed; rather they are trained using one of several kinds of algorithms. The typical structure of a training algorithm starts at the output of the network, calculating ...

Neural Networks - 123SeminarsOnly.com

... output of a neuron in a neural network is between certain values (usually 0 and 1, or -1 and 1). In general, there are three types of activation functions, denoted by Φ(.) . First, there is the Threshold Function which takes on a value of 0 if the summed input is less than a certain threshold value ...

... output of a neuron in a neural network is between certain values (usually 0 and 1, or -1 and 1). In general, there are three types of activation functions, denoted by Φ(.) . First, there is the Threshold Function which takes on a value of 0 if the summed input is less than a certain threshold value ...

Slide 1

... Responses in excitatory and inhibitory networks of firing-rate neurons. A. Response of a purely excitatory recurrent network to a square step of input (hE). The blue curve is the response without excitatory feedback. Adding recurrent excitation increases the response but makes it rise and fall more ...

... Responses in excitatory and inhibitory networks of firing-rate neurons. A. Response of a purely excitatory recurrent network to a square step of input (hE). The blue curve is the response without excitatory feedback. Adding recurrent excitation increases the response but makes it rise and fall more ...

Artificial neural networks – how to open the black boxes?

... The black box character that was firstly the big advance in the use of ANN – the supervisor must not think about the model set up – becomes the crucial disadvantage. Finally, there seems to be no possibility to improve the networks by using simple rules up to now. Accordingly it is necessary to unde ...

... The black box character that was firstly the big advance in the use of ANN – the supervisor must not think about the model set up – becomes the crucial disadvantage. Finally, there seems to be no possibility to improve the networks by using simple rules up to now. Accordingly it is necessary to unde ...

Online Language Learning to Perform and Describe Actions for

... the interaction with the user teaching the robot by describing spatial relations or actions, creating

pairs. It could also be edited by hand to avoid speech

recognition errors. These interactions between the different

components of the system are shown in the Figure 1.

The neural ...

... the interaction with the user teaching the robot by describing spatial relations or actions, creating

network - Ohio University

... There’s a continuum between rules and similarity: rules are useful for a few variables - for many variables: similarity. |W-A|2 = |W|2 + |A|2 - 2W.A = 2(1 - W.A), for normalized X, A, so a strong excitation = a short distance (large similarity ). ...

... There’s a continuum between rules and similarity: rules are useful for a few variables - for many variables: similarity. |W-A|2 = |W|2 + |A|2 - 2W.A = 2(1 - W.A), for normalized X, A, so a strong excitation = a short distance (large similarity ). ...

Ken`s Power Point Presentation

... • Process the information as if you are preparing it to teach it to another individual. (“To teach is to learn twice.”) • Review old information before reading new information (build bridges from what is known to what is new) • Walk after reading or learning (while walking, talk about the newly acqu ...

... • Process the information as if you are preparing it to teach it to another individual. (“To teach is to learn twice.”) • Review old information before reading new information (build bridges from what is known to what is new) • Walk after reading or learning (while walking, talk about the newly acqu ...

Artificial Neural Networks (ANN), Multi Layered Feed Forward (MLFF

... descent algorithm which minimizes the relative entropy[19]. 2.2. GMDH Neural Network By means of GMDH algorithm a model can be represented as sets of neurons in which different pairs of them in each layer are connected through a quadratic polynomial and thus produce new neurons in the next layer. Su ...

... descent algorithm which minimizes the relative entropy[19]. 2.2. GMDH Neural Network By means of GMDH algorithm a model can be represented as sets of neurons in which different pairs of them in each layer are connected through a quadratic polynomial and thus produce new neurons in the next layer. Su ...

PowerPoint

... The study of computer systems that attempt to model and apply the intelligence of the human mind For example, writing a program to pick out objects in a picture ...

... The study of computer systems that attempt to model and apply the intelligence of the human mind For example, writing a program to pick out objects in a picture ...

Artificial Neural Networks

... Defense. • Automated target recognition, localization, and tracking in the presence of ……………. is an important signal processing problem • Algorithms have been developed for …………………………… such as those that occur during active jamming, non-cooperative maneuvering & complex battlefield scenarios of the ...

... Defense. • Automated target recognition, localization, and tracking in the presence of ……………. is an important signal processing problem • Algorithms have been developed for …………………………… such as those that occur during active jamming, non-cooperative maneuvering & complex battlefield scenarios of the ...

An Artificial Intelligence Neural Network based Crop Simulation

... algorithm and a corresponding algorithm of the model. Chung et al [2] This article presents a new back-propagation neural network (BPN) training algorithm performed with an ant colony optimization (ACO) to get the optimal connection weights of the BPN. The concentration of pheromone laid by the arti ...

... algorithm and a corresponding algorithm of the model. Chung et al [2] This article presents a new back-propagation neural network (BPN) training algorithm performed with an ant colony optimization (ACO) to get the optimal connection weights of the BPN. The concentration of pheromone laid by the arti ...

Transfer Learning of Latin and Greek Characters in

... each show two examples of separate RBMs that were trained to recreate their respective character sets. Its is very apparent from Figure 4.1 that these are the weights used to classify the Greek letter. Each separate square in Figure 4.1 and 4.2 corresponds to a weight matrix of the connections of th ...

... each show two examples of separate RBMs that were trained to recreate their respective character sets. Its is very apparent from Figure 4.1 that these are the weights used to classify the Greek letter. Each separate square in Figure 4.1 and 4.2 corresponds to a weight matrix of the connections of th ...

5 levels of Neural Theory of Language

... Wij = number of times both units i and j were firing -----------------------------------------------------number of times unit j was firing ...

... Wij = number of times both units i and j were firing -----------------------------------------------------number of times unit j was firing ...

Learning by localized plastic adaptation in recurrent neural networks

... adaptation is proportional to the strength of the synapse itself and to the number of times the synapse was activated. There is also a maximum synaptic strength (ωmax = 2), which can not be exceeded. If the activity is so weak that it did not even reach the output neuron, we consider the network as ...

... adaptation is proportional to the strength of the synapse itself and to the number of times the synapse was activated. There is also a maximum synaptic strength (ωmax = 2), which can not be exceeded. If the activity is so weak that it did not even reach the output neuron, we consider the network as ...

1 - AGH

... What are main advantages of such (layer) approach? It’s very simple to create a model, and simulate it‘s behavior using various computer programs. That’s why researchers adopted such structure and use it from there on, in every neural net. Let’s say it again – it’s very inaccurate if considered as a ...

... What are main advantages of such (layer) approach? It’s very simple to create a model, and simulate it‘s behavior using various computer programs. That’s why researchers adopted such structure and use it from there on, in every neural net. Let’s say it again – it’s very inaccurate if considered as a ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.