Mixed Model Analysis of Data: Transition from GLM to MIXED

... mixed model applications in the SAS® System. Other procedures, including NESTED and VARCOMP, are used for specific applications. Since its introduction in 1976, GLM has been enhanced with several mixed model facilities such as the RANDOM and REPEATED statements. However, there are aspects of certain ...

... mixed model applications in the SAS® System. Other procedures, including NESTED and VARCOMP, are used for specific applications. Since its introduction in 1976, GLM has been enhanced with several mixed model facilities such as the RANDOM and REPEATED statements. However, there are aspects of certain ...

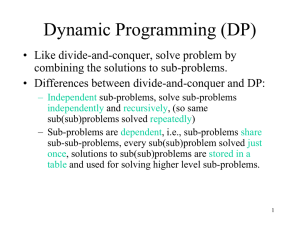

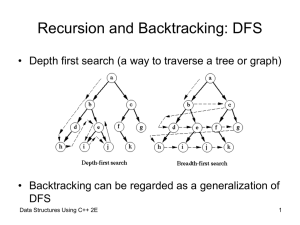

Dynamic Programming

... DP is better by a constant factor due to no recursive involvement as in Memoized algorithm. • If some subproblems may not need to be solved, Memoized algorithm may be more efficient, since it only solve these subproblems which are definitely required. ...

... DP is better by a constant factor due to no recursive involvement as in Memoized algorithm. • If some subproblems may not need to be solved, Memoized algorithm may be more efficient, since it only solve these subproblems which are definitely required. ...

3. supervised density estimation

... respect to a variable of interest is a challenging ongoing topic. The variable of interest can be a categorical or continuous. There are many possible algorithms to compute hot and cool spots; one such algorithm called SCDE (Supervised Clustering Using Density Estimation) will be introduced in the r ...

... respect to a variable of interest is a challenging ongoing topic. The variable of interest can be a categorical or continuous. There are many possible algorithms to compute hot and cool spots; one such algorithm called SCDE (Supervised Clustering Using Density Estimation) will be introduced in the r ...

An Efficient Fuzzy Clustering-Based Approach for Intrusion Detection

... manipulated with a given feature selection type, by q-Fold Cross Validation [9]. The resulting classifier is the one exhibiting maximum performance. In the training phase, the algorithm first normalizes continuous features (e.g., by a variance-based spread measure) to avoid the dispersion in differe ...

... manipulated with a given feature selection type, by q-Fold Cross Validation [9]. The resulting classifier is the one exhibiting maximum performance. In the training phase, the algorithm first normalizes continuous features (e.g., by a variance-based spread measure) to avoid the dispersion in differe ...

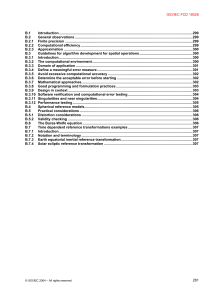

Annex B - SEDRIS

... operation. Many spatial operation formulations have closed-form solutions in one direction but do not have closed form solutions for the inverse. This situation leads to a requirement to solve multivariate non-linear equations where no closed solution is readily available. Traditionally, either trun ...

... operation. Many spatial operation formulations have closed-form solutions in one direction but do not have closed form solutions for the inverse. This situation leads to a requirement to solve multivariate non-linear equations where no closed solution is readily available. Traditionally, either trun ...

An Unbiased Distance-based Outlier Detection Approach for High

... The dissimilarity of a point p with respect to its k nearest neighbors is known by its cumulative neighborhood distance. This is defined as the total distance from p to its k nearest neighbors in DS. – In order to ensure that non-monotonicity property is not violated, the outlier score function is r ...

... The dissimilarity of a point p with respect to its k nearest neighbors is known by its cumulative neighborhood distance. This is defined as the total distance from p to its k nearest neighbors in DS. – In order to ensure that non-monotonicity property is not violated, the outlier score function is r ...

Expectation–maximization algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method for finding maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the E step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step.