SEM

... your model fits the data. • If no fit, then there are few clues to guide you how to shuffle the variables around to make the model better fit the data. • Note: Even if the model does fit, it does not guarantee that a new arrangement of variables would be an even better fit. • Therefore, one must rea ...

... your model fits the data. • If no fit, then there are few clues to guide you how to shuffle the variables around to make the model better fit the data. • Note: Even if the model does fit, it does not guarantee that a new arrangement of variables would be an even better fit. • Therefore, one must rea ...

Change-Point Detection in Time-Series Data by Direct Density

... A common limitation of the above-mentioned approaches is that they rely on pre-specified parametric models such as probability density models, autoregressive models, and state-space models. Thus, these methods tend to be less flexible in real-world change-point detection scenarios. The primal purpos ...

... A common limitation of the above-mentioned approaches is that they rely on pre-specified parametric models such as probability density models, autoregressive models, and state-space models. Thus, these methods tend to be less flexible in real-world change-point detection scenarios. The primal purpos ...

Section 9-3

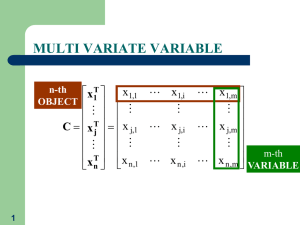

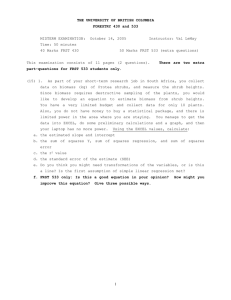

... Multiple Regression Equation A linear relationship between a dependent variable y and two or more independent variables (x1, x2, x3 . . . , xk) ...

... Multiple Regression Equation A linear relationship between a dependent variable y and two or more independent variables (x1, x2, x3 . . . , xk) ...

Generalized Linear Models (1/29/13)

... When processing discrete data, two commonly used probability distributions are the binomial distribution and the Poisson distribution. The binomial distribution is used when an event only has two possible outcomes (success, failure); the Poisson distribution describes the count of the number of rand ...

... When processing discrete data, two commonly used probability distributions are the binomial distribution and the Poisson distribution. The binomial distribution is used when an event only has two possible outcomes (success, failure); the Poisson distribution describes the count of the number of rand ...

Dimension Reduction of Chemical Process Simulation Data

... set I ⊆ {1, 2, . . . , n} and must contain a possibly empty subset M ⊆ I. For example, the xj , j ∈ I, may represent values that are easily measured in the laboratory and thus support a simplified partial verification of the full model via the reduced model. The subset M contains the indices of vari ...

... set I ⊆ {1, 2, . . . , n} and must contain a possibly empty subset M ⊆ I. For example, the xj , j ∈ I, may represent values that are easily measured in the laboratory and thus support a simplified partial verification of the full model via the reduced model. The subset M contains the indices of vari ...

G070840-00 - DCC

... problem as an optimization problem in an open search space of clustering models. However, this can lead to over-fitting problems or even worse, non-convergence of the algorithm. The new algorithms address these problems. S-means looks at similarity statistics of burst triggers and builds up clusters ...

... problem as an optimization problem in an open search space of clustering models. However, this can lead to over-fitting problems or even worse, non-convergence of the algorithm. The new algorithms address these problems. S-means looks at similarity statistics of burst triggers and builds up clusters ...

SECURE SYSTEM FOR DATA MINING USING RANDOM DECISION

... words are extracting by the comparative recursion of the combination of the words. Step 7: Then after fetching the important words from all the documents system will perform association rule using Apriori Algorithm with the step stated below. Let T be the training data with n attributes A1, A2, …, A ...

... words are extracting by the comparative recursion of the combination of the words. Step 7: Then after fetching the important words from all the documents system will perform association rule using Apriori Algorithm with the step stated below. Let T be the training data with n attributes A1, A2, …, A ...

AY4201347349

... small portion of them could be cycles. The algorithm in [9] is DFS-XOR based on the fact that small cycles can be joined together to form bigger cycle. It is more time efficient when it comes to real life problems of counting cycles in a graph because its complexity is not depending on the factor of ...

... small portion of them could be cycles. The algorithm in [9] is DFS-XOR based on the fact that small cycles can be joined together to form bigger cycle. It is more time efficient when it comes to real life problems of counting cycles in a graph because its complexity is not depending on the factor of ...

this PDF file

... analysis methods based on topic model achieved more accurate results than the traditional methods. But it is found that training and processing of the topic model is not applicable to large-scale data through a large number of experiments and practice. In this kind of model, it is assumed that the d ...

... analysis methods based on topic model achieved more accurate results than the traditional methods. But it is found that training and processing of the topic model is not applicable to large-scale data through a large number of experiments and practice. In this kind of model, it is assumed that the d ...

IOSR Journal of Electronics and Communication Engineering (IOSR-JECE)

... more customers to buy them together). The main problem of association rule induction is: It has so many possible rules. For the product range of a supermarket, for example, which may consist of several thousand different products, there are billions or even trillions of possible association rules. I ...

... more customers to buy them together). The main problem of association rule induction is: It has so many possible rules. For the product range of a supermarket, for example, which may consist of several thousand different products, there are billions or even trillions of possible association rules. I ...

Expectation–maximization algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method for finding maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the E step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step.