* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Performance

Survey

Document related concepts

Tektronix 4010 wikipedia , lookup

Indexed color wikipedia , lookup

Spatial anti-aliasing wikipedia , lookup

Molecular graphics wikipedia , lookup

Waveform graphics wikipedia , lookup

Apple II graphics wikipedia , lookup

BSAVE (bitmap format) wikipedia , lookup

Hold-And-Modify wikipedia , lookup

Mesa (computer graphics) wikipedia , lookup

Free and open-source graphics device driver wikipedia , lookup

Framebuffer wikipedia , lookup

Original Chip Set wikipedia , lookup

InfiniteReality wikipedia , lookup

Stream processing wikipedia , lookup

Graphics processing unit wikipedia , lookup

General-purpose computing on graphics processing units wikipedia , lookup

Transcript

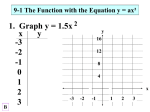

On Using Graphics Hardware for Scientific Computing ________________________________________________ Stan Tomov June 23, 2006 Slide 1 / 16 Outline • • • • • • • Motivation Literature review The graphics pipeline Programmable GPUs Some application examples Performance results Conclusion Slide 2 / 16 Motivation Problem size 11,540 47,636 193,556 780,308 Frames per second using OpenGL(GPU) Mesa (CPU) 189 8.01 52 1.71 13 0.44 3 0.12 Table 1. GPU vs CPU in rendering polygons. The GPU (Quadro2 Pro) is approximately 30 times faster than the CPU (Pentium III, 1 GHz) in rendering polygonal data of various sizes. Slide 3 / 16 Motivation • High flops count (currently 200GFlops, single precision) (picture: from the GPU Gems 2 book) • Compatible price performance (less then 1 cent per MFlop) • Performance doubling every 6 months • Continuously increasing functionality and programmability – Realistic games require more complicated physics Slide 4 / 16 Literature review Using graphics hardware for non-graphics applications (just a few examples): • • • • • Cellular automata Reaction-diffusion simulation (Mark Harris, University of North Carolina) Matrix multiply (E. Larsen and D. McAllister, University of North Carolina) Lattice Boltzmann (Wei Li, Xiaoming Wei, and Arie Kaufman, Stony Brook) CG and multigrid (J. Bolz et al, Caltech, and N. Goodnight et al, University of Virginia) • Convolution (University of Stuttgart) • BLAS 1,2; fft; certain eigensolvers; etc. • See also GPGPU’s homepage : http://www.gpgpu.org/ Slide 5 / 16 Literature review Typical performance results reported (by the middle of 2003): • Significant speedup of GPU vs CPU are reported if the GPU performs low precision computations (30 to 60 times; depends on the configuration) - integers (8 or 12 bit arithmetic), 16-bit floating point • Vendor advertisements about very high performance assume low precision arithmetic • NCSA, University of Illinois assembled a $50,000 supercomputer out of 70 PlayStation 2 consoles, which could theoretically deliver 0.5 trillion operations/second • GPU’s 32-bit flops performance is comparable to the CPU’s (may be 2-4 times faster depending on application and configuration) Slide 6 / 16 The graphics pipeline • GeForce 256 (August, 1999) - allowed certain degree of programmability - before: fixed function pipeline • GeForce 3 (February, 2001) - considered first fully programmable GPU • GeForce 4 - partial 16-bit floating point arithmetic • NV30 - 32-bit floating point • Cg - high-level programming language Slide 7 / 16 The graphics pipeline • GPUs: on their way into turning into programmable stream processors (picture: from the GPU Gems 2 book) • Stream formulation of the graphics pipeline: all data viewed as streams and computation as kernels • Streaming – Efficient computation (enable efficient parallelism; deep pipeline) – Efficient communication (efficient off-chip communication; intermediate results kept on chip; deep pipelining allows high degree of latency tolerance Slide 8 / 16 Programmable GPUs (in particular NV30) • GPU programming model: streaming – Naturally addresses parallelism and communication – Easy when problems maps well • Support floating point operations • Vertex program – Replaces fixed-function pipeline for vertices – Manipulates single vertex data – Executes for every vertex • Fragment program – Similar to vertex program but for pixels • Programming in Cg: – High level language; looks like C; portable; compiles Cg programs to assembly code Slide 9 / 16 Block Diagram of GeForce FX • • • • • • • AGP 8x graphics bus bandwidth: 2.1GB/s Local memory bandwidth: 16 GB/s Chip officially clocked at 500 MHz Vertex processor: - execute vertex shaders or emulate fixed transformations and lighting (T&L) Pixel processor : - execute pixel shaders or emulate fixed shaders - 2 int & 1 float ops or 2 texture accesses/clock circle Texture & color interpolators - interpolate texture coordinates and color values Performance (on processing 4D vectors): – – Vertex ops/sec - 1.5 Gops Pixel ops/sec - 8 Gops (int), or 4 Gops (float) Hardware at Digit-Life.com, NVIDIA GeForce FX, or "Cinema show started", November 18, 2002. Slide 10 / 16 Block Diagram of GeForce FX 3 vertex and 8 pixel processors Last nVidia card: dual-GPU GeForce 7950 GX2 with 32 vertex and 96 pixel processors • • • • • • • AGP 8x graphics bus bandwidth: 2.1GB/s Local memory bandwidth: 16 GB/s Chip officially clocked at 500 MHz Vertex processor: - execute vertex shaders or emulate fixed transformations and lighting (T&L) Pixel processor : - execute pixel shaders or emulate fixed shaders - 2 int & 1 float ops or 2 texture accesses/clock circle Texture & color interpolators - interpolate texture coordinates and color values Performance (on processing 4D vectors): – Vertex ops/sec - 1.5 Gops – Pixel ops/sec - 8 Gops (int), or 4 Gops (float) Hardware at Digit-Life.com, NVIDIA GeForce FX, or "Cinema show started", November 18, 2002. Slide 11 / 16 Summary of CPU vs GPU • General vs specialized hardware – CPUs have more complex control hardware – GPU can have hardware acceleration for specific tasks • Sequential vs parallel programming models – In general CPUs don’t have the GPU’s level of data parallelism (though some may be available: Intel’s SSE and PowerPC’s AltiVec instructions sets) • Memory latency vs bandwidth optimization Slide 12 / 16 Some application examples • Monte Carlo simulations – – – – Used in variety of simulations in physics, finance, chemistry, etc. Based on probability statistics and use random numbers A classical example: compute area of a circle Computation of expected values: N E(F) = F (S i )P(S i ) i=1 – N can be very large : on a 1024 x 1024 lattice of particles, every particle 2 modeled to have k states, N = k 1024 – Random number generation. We used linear congruential type generator: R(n) (a * R(n 1) b) mod N Slide 13 / 16 Some application examples • Monte Carlo simulations – Ising model • Simplified model for magnets • Evolve the system into “higher probability” states and compute expected values as average over only those states – Percolation • In studies of disease spreading, flow in porous media, forest fire propagation, clustering, etc. • Lattice Boltzmann method – Simulate fluid flow; particles are allowed to move and collide on a lattice Slide 14 / 16 Some performance results • saxpy on 512 x 512 (x 4) vectors 1GFlop – speed limited by GPU memory bandwidth (16 GB/s) • sin, cos, exp, log 20 times faster than on Pentium 4, 2.8GHz – hardware accelerated of low accuracy • Ising model 7GFlops – 44% of theoretical maximum – On fragment program compiled to 109 assembly instructions Slide 15 / 16 Conclusions • What to expect for future GPGPUs? Can GPGPUs influence future computer systems ? ( HPC and consequently our models of software development: is the IBM’s Cell processor already an example? ) Current trends: CPU multi-core GPU more powerful streaming model (Gather, scatter, conditional streams, reduction, etc.) more CPU functionality Slide 16 / 16