* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download MTH6140 Linear Algebra II

Survey

Document related concepts

Symmetric cone wikipedia , lookup

Euclidean vector wikipedia , lookup

Exterior algebra wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Matrix calculus wikipedia , lookup

Vector space wikipedia , lookup

Principal component analysis wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Four-vector wikipedia , lookup

System of linear equations wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Jordan normal form wikipedia , lookup

Transcript

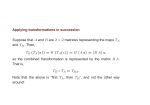

MTH6140 Linear Algebra II Assignment 7 Solutions 1. (a) Observe that D12 = D1 , D22 = D2 , D1 D2 = D2 D1 = O and D1 + D2 = I. We have Π21 = (P D1 P −1 )(P D1 P −1 ) = P D1 D1 P −1 = P D1 P −1 = Π1 , so Π1 is projection, and similarly for Π2 . Also Π1 Π2 = (P D1 P −1 )(P D2 P −1 ) = P D1 D2 P −1 = P OP −1 = O, and similarly for Π2 Π1 , as required. Finally Π1 + Π2 = (P D1 P −1 ) + (P D2 P −1 ) = P (D1 + D2 )P −1 = P IP −1 = I. 2. Suppose v, v 0 ∈ V and c ∈ K. We need to show, for any 1 ≤ i ≤ r, that πi (v + v 0 ) = πi (v) + πi (v 0 ) and πi (cv) = πi (v). For the first of these, let v = u1 + · · · + ur and v 0 = u01 + · · · + u0r be expressions for v and v 0 , with ui , u0i ∈ Ui , for all i. Then v+v 0 = (u1 +u01 )+· · ·+(ur +u0r ) with ui +u0i ∈ Ui for all i. So by definition of πi , we have that πi (v + v 0 ) = ui + u0i = πi (v) + πi (v 0 ). The second identity, π( cv) = πi (v), can be dealt with similarly. Suppose v ∈ V , and write v = u1 +· · ·+ur as above. By definition, πi (v) = ui . If we express ui as ui = w1 + · · · + wr , with wi ∈ Ui , for all i, then, clearly, wi = ui and wj = 0, for j 6= i. Thus, πi2 (v) = πi (πi (v)) = πi (ui ) = wi = ui = πi (v), and πi is a projection. If j 6= i then, with wi as above, πj πi (v) = πj (πi (v)) = πj (ui ) = wj = 0, and hence πj πi = 0. Suppose v ∈ V , and write v = u1 +· · ·+ur as usual. Then (π1 +· · ·+πr )(v) = π1 (v) + · · · + πr (v) = u1 + · · · + ur = v; thus π1 + · · · + πr = I. Since πi (v) ∈ Ui for all v ∈ V , we have Im(πi ) ⊆ Ui . Conversely, for every ui ∈ Ui we have πi (ui ) = ui , so Ui ⊆ Im(π). 3. (a) We are asked to solve 0 1 −1 0 a a =λ . b b We have b = λa and −a = λb, and hence a = −λ2 a. If a = 0 then b = 0, but the zero vector is never an eigenvector. Otherwise, λ2 = −1, which doesn’t have a solution in R. So in this case, the linear map has no eigenvalues (and no eigenvectors). 1 (b) As before, we can eliminate the possibility that a = 0. But over C, when a 6= 0, we have the λ = ±i. The corresponding 1 solutions 1 (eigenvalues) eigenvectors are i and −i . (Any non-zero scaling of these will be eigenvectors also.) (c) Using what we have learned so far, we might guess that something like 0 1 2 0 will be a suitable candidate. Indeed, repeating the above calculation, we√find that λ2 = 2, which is not solvable over Q, but yields eigenvalues ± 2 over R. 4. Suppose v, v 0 ∈ E(λ, α). Then α(v) = λv and α(v 0 ) = λv 0 , and hence α(v + v 0 ) = λ(v + v 0 ). This shows that v + v 0 ∈ E(λ, α). Also, α(cv) = cα(v) = cλv = λ(cv), and hence cv ∈ E(λ, α). Since E(λ, α) is non-empty (contains 0), and closed under vector addition and scalar multiplication, it is a subspace of V . 5. (a) D maps each non-constant polynomial to a polynomial of degree one lower, and each constant polynomial to 0. So D has only one eigenvalue, namely 0, with corresponding eigenvector 1 (the constant polynomial 1). Naturally, any constant polynomial not equal to 0 is am equally good eigenvector. Or, symbolically, we are looking for solutions to f 0 (x) = 2ax + b = λax2 + λbx + λc = λf (x). Equating coefficients, we see that λa = 0, λb = 2a and λc = b. There are two cases. If λ = 0 then a = b = 0, leading to the eigenvector 1 identified above. If λ 6= 0, then a = b = c = 0 and there are no corresponding eigenvectors. (Recall that the zero vector is not an eigenvalue, by definition.) (b) If f (x) = ax2 + bx + c, then xf 0 (x) = 2ax2 + bx. For f to be an eigenvector, 2ax2 +bx = λ(ax2 +bx+c), for some λ, and hence a(2−λ) = b(1−λ) = λc = 0. So there are three possible eigenvalues, namely λ = 0, λ = 1 and λ = 2, with corresponding eigenvectors 1, x and x2 (or some scaling of these). b form a basis for V3 , so D b is diagonalis(c) The eigenvectors (1, x, x2 ) of D 2 able (in fact the matrix relative to the basis (1, x, x ) is diagonal), while D has only one eigenvector and so is not diagonalisable. > 6. Letting v = a b c , solving Av = 2v yields 2a + b + c = 0. This equation defines a subspace of dimension 2, and a possible basis for it is T T 1 0 −2 , 0 1 −1 : this is E(2, α). (A variety of bases are possible: any two vectors satisfying 2a + b + c that are not multiples of each other.) Solving Av = 3v yields 2a + c = 0 and a + b = 0. These equations define a > subspace of dimension 1, with basis −1 1 2 : this is E(3, α). 2