* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Some ideas from thermodynamics

Thermal radiation wikipedia , lookup

Countercurrent exchange wikipedia , lookup

Van der Waals equation wikipedia , lookup

Equipartition theorem wikipedia , lookup

Heat capacity wikipedia , lookup

Calorimetry wikipedia , lookup

Thermoregulation wikipedia , lookup

Heat transfer wikipedia , lookup

Conservation of energy wikipedia , lookup

Heat equation wikipedia , lookup

First law of thermodynamics wikipedia , lookup

Temperature wikipedia , lookup

Thermal conduction wikipedia , lookup

Equation of state wikipedia , lookup

Internal energy wikipedia , lookup

Heat transfer physics wikipedia , lookup

Chemical thermodynamics wikipedia , lookup

Thermodynamic temperature wikipedia , lookup

Second law of thermodynamics wikipedia , lookup

Thermodynamic system wikipedia , lookup

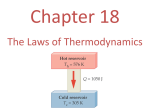

Physics of the Human Body Chapter 6 Some ideas from thermodynamics 53 Some ideas from thermodynamics The branch of physics called thermodynamics deals with the laws governing the behavior of physical systems at finite temperature. These laws are phenomenological, in the sense that they represent empirical relations between various observed quantities. These quantities include pressure, temperature, density and other measurable parameters that define the state of a given system. Thus, in discussing magnetic systems, e.g., the variables might include temperature, magnetization and external magnetic field; in discussing elastic systems we might want to use temperature, stress and strain as defining variables. Some useful references for the student who wants to pursue the subject further are 1. F.W. Sears, Thermodynamics, 2nd ed. (AddisonWesley Publishing Co., Reading, MA, 1963). 2. K. Huang, Statistical Mechanics (John Wiley and Sons, Inc., New York, 1963). 3. R.P. Feynman, Statistical Mechanics (W.A. Benjamin, Inc., Reading, MA, 1972). 1. The First Law of Thermodynamics We imagine a system such as a gas, where temperature, pressure and density are the appropriate system-defining variables. Then conservation of energy states that the following differential relationship must hold: dU = dQ − dW (1) where dQ represents (a small amount of) heat flowing into the system from some heat source; dW the mechanical work done by the system, and dU the change in internal energy. The quantity U depends, by assumption, only on the present state of the system and not on the history of how the system got into that state. In that case, dU must be, in the mathematical sense, an exact differential. To understand what this means, consider the work done by sliding a heavy block up an inclined plane. There is a force acting downhill (gravity) as well as friction. Obviously the work done traversing the wiggly path up the hill is greater than that along the straight path, since friction always acts opposite to the direction of motion. That is, the part of the work arising from overcoming gravity is independent of path (because gravity is a conservative force) but that used to overcome friction is path-dependent (because friction is a dissipative force). In other words, the work output depends on how the job is done, so dW is not an exact differential. Similarly, the heat input depends on the details of how the heat enters the system—for example, it can depend on the rate at which heat is supplied— so dQ is also not an exact differential. But experience indicates that the internal energy of a system does not depend on how it got there, hence we postulate that dU is exact. Now suppose this postulate were wrong: then conclusions we can deduce from it would at some point disagree with experience. So far no such cases have arisen; moreover, the microscopic theory of thermodynamics (that is, statistical mechanics) suggests strongly that dU is exact. 54 2. Physics of the Human Body Equation of state Equation of state We can use different sets of variables to define the state of a thermodynamic system. For example we can use temperature and pressure, pressure and volume, or temperature and volume. The reason we have only two independent variables is that the three variables p, V, T are related by an equation of state which takes the form f(p, V, T) = 0 . relationships. That is, imagine it depends on pressure and volume: U ≡ U(p, V) so that ∂U ∂U dU = dp + dV . ∂p V ∂V p (5) (2) For example one typical equation of state is the perfect gas law, pV = νRT (3) where R is the perfect gas constant (numerical value ≈ 8.3 J ⁄ oK ⁄ gm−mol) and ν is the number of gram-moles present. Another well-known equation of state is the Van der Waals equation, p + an2 1 − nV0 = n kBT (4) where n is the number-density of the molecules, a is a constant representing an average (attractive) inter-molecular interaction energy, and V0 is proportional to the volume of a molecule (that is, if there are N molecules the available volume is V − N V0 rather than V). Also kB = R ⁄ NA is Boltzmann’s constant. The Van der Waals equation of state represents an attempt to take into account two physical effects that cause actual gases to deviate from the behavior of an ideal gas: intermolecular forces, and the finite size of molecules. Although not a precise representation of any gas (except possibly over a limited range of the variables) Eq. (4) is useful in understanding qualitatively the behavior of gases in phase transitions. Now imagine that we go from internal energy U1 = U(p1, V1) to U2 = U(p2, V2) via two different paths as shown above. Since the result is independent of path, it must be true that ∫ Γ dU = ∫ Γ f(p, V) dp + g(p, V) dV = 0 , where the integral is taken around the closed path Γ. Now, by noting that if the result is true for a large path it must also be true for the small square path shown below, we find −g(p + dp)dV −f(V)dp f(V+dV)dp g(p)dV 3. Thermodynamic relations Because the internal energy differential dU is (by hypothesis) perfect, we immediately derive certain Physics of the Human Body Chapter 6 Some ideas from thermodynamics ∆Q ∂H cp = ≡ ∆T p ∂T V g(p,V) − g(p+dp, V) dV + or + f(p, V+dV) − f(p, V) dp = 0 55 (7) where the enthalpy H is defined as H = U + pV . If a thermally isolated ideal gas is allowed to expand slowly into a vacuum, as shown below, ∂ g ∂ f ∂V − ∂p dp dV = 0 , or finally ∂ ∂U ∂ ∂U = . ∂V ∂p ∂p ∂V p V In other words, for dU to be an exact differential the order of its second derivatives with respect to p and V is immaterial. (This result is just Stokes’s Theorem in two dimensions.) Since the the work output can be written dW = pdV , we can express the heat input into a system in the alternate forms ∂U ∂U dQ = dp + p + ∂V ∂p V p dV (5a) Joule’s free-expansion experiment ∂V ∂U + p + dp ∂p ∂p T T it is found that the temperature does not change. On the other hand the volume may change substantially. Since the system is thermally isolated the change in heat must be zero. On the other hand, no work is done in the expansion since no force is exerted on anything. Therefore we deduce that the internal energy of an ideal gas must be unchanged in the experiment; that is, the internal energy of an ideal gas depends neither on volume nor on pressure, but solely on temperature. ∂U ∂U dQ = dT + p + dV . (5c) ∂V ∂T V T From the above experimental result we further deduce that the internal energy of the ideal gas has the form ∂V ∂U dQ = p + dT + ∂T ∂T p p (5b) The specific heat at constant volume is defined by ∆Q ∂U cV = ≡ , ∆T V ∂T V (6) where the last relation follows from letting dV=0 above. The specific heat at constant pressure is defined as U = cV T + constant . (8) Since the origin of the energy scale is arbitrary, we may set the constant to zero (that is, it is unmeasureable). 56 Physics of the Human Body Heat engines and Carnot efficiency For an ideal gas, since pV = νRT, we see the enthalpy is also a function of temperature only, H = U + pV ≡ cV + R T ; that is, the molar specific heats cp and cV are related by cp = cV + R . 4. Heat engines and Carnot efficiency The first law of thermodynamics is really nothing more than the law of conservation of energy, taking into account that heat is a form of energy. The key difference between heat and other forms of energy, however, becomes manifest in the process of conversion from one form of energy to another. Electrical energy—in the form of charge on a capacitor or a current in a wire—can in principle1 be converted 100% into mechanical energy (work), chemical energy (charging a battery, or electrolysis, e.g.), electromagnetic energy (radio, light), or heat (radiant heater, oven, etc.). For that reason we say that electricity is “high grade” energy. Similarly chemical energy can be converted into electrical energy (battery, fuel cell), or into mechanical work, with high efficiency. Heat is different. If we want to convert heat into mechanical work or electricity using engines or engine-driven generators, the fundamental nature of heat imposes a limitation on the maximum efficiency that can be achieved with the most perfect machine conceivable. This limitation was discovered by the French engineer Sadi Carnot. Carnot’s argument was based on a heat engine using a perfect gas as its working fluid. The first part of the argument is the assumption that perpetual motion, in the form of an engine that cre- 1. ates more energy than it consumes, is impossible. Thus if we consider heat engines, we are entitled to imagine one that has no heat leaks, friction or other ways in which energy can be used unproductively. The most perfect kind of heat engine is one that begins in a certain state (pressure, volume), takes heat Q1 from a source at temperature T1, produces some work W ≤ Q1 , and if there is any leftover heat, df Q2 = Q1 − W , that has not been converted to work, dumps that into a heat sink at temperature T2 . Then the system is restored to its original state and the engine is in a position to repeat these actions. The key to getting the most work possible for a given heat input is to make all parts of the cycle reversible. That is, when heat is moved from the source to the engine, it is done with the engine at the same temperature as the source, and so slowly that one can reverse the engine and put the heat back with no loss or change of state. This is illustrated below. In the first step, a cylinder of gas at temperature T1 is placed in contact with the source and slowly expanded, extracting heat Practical considerations—friction, resistance, etc.—restrict the achievable conversion efficiencies to less than 100%, of course. Physics of the Human Body Chapter 6 Some ideas from thermodynamics and doing work. The cylinder is then removed from the source and insulated so no heat can flow in or out. The piston is further expanded, doing more work and allowing the temperature to fall to T2 . The cylinder is then placed in contact with the sink and the gas compressed so that heat flows into the sink. (Work has to be put in in order to compress the gas.) Finally, the cylinder is removed from the sink and insulated so no heat flows in or out, and the gas compressed still further until the temperature has come back to T1 . The gas is now back in its original state. How do we know the system returns to its original state at the end of a cycle? The answer is that we must show it is possible to construct a cycle that does precisely this. If the working fluid is a perfect gas we may find relations for the two types of expansion (or compression). When the cylinder is in contact with the heat reservoir, we say a change of volume is isothermal. When it is isolated, the change of volume is adiabatic. In mathematical terms, isothermal means Carnot cycle Isothermal Adiabatic Pressure 1 2 3 4 Volume dT = 0 whereas adiabatic means dQ = 0 . In an isothermal process (we assume we are working with 1 mole of gas) pV = RT = constant , whereas in an adiabatic process, 57 dU ≡ cV dT = −p dV . Applying the perfect gas equation of state we have cV dT = −R dT + V dp or with dT = 1 d(pV) R we have (η − 1) V dp + η p dV = 0 (9) or cp dV dp + = 0, cV V p and −cp ⁄ cV V p = p0 V 0 Since df γ = . (10) cp > 1, cV the curve of pressure vs. volume is steeper than that of an isotherm passing through the same point. Thus on the diagram to the left we see the initial isothermal expansion (from point 1 to point 2) lies on a curve that falls more slowly than the subsequent adiabatic expansion (from point 2 to point 3). Similarly, when the gas is compressed isothermally (point 3 to point 4) the pressure rises less steeply than in the subsequent adiabatic compression (4 to 1) that returns to the initial point. The possibility of returning to the initial state then depends on being able to return to the initial volume and pressure (point 1 on the diagram). The equation of state guarantees that the temperature will then be the initial temperature, T1 . Moreover, since the internal energy of an ideal gas depends only on temperature, U will also have returned to its initial value. A solution that looks like the figure is possible if the compression ratio λ satisfies 58 Physics of the Human Body Heat engines and Carnot efficiency 1 ⁄ (γ − 1) Vmax T1 λ = > Vmin T2 Then it is easy to see that . (11) T2 V3 = λVmin T1 and η = 1 ⁄ (γ − 1) Vmin < V3 < Vmax and Vmin < V1 < Vmax . What about the net work done by the engine in this cycle? It is clearly the area contained within the intersecting curves, W = 1 2 ∫2 p dV + ∫3 p dV + ∫4 p dV + ∫1 p dV 1 ⁄ (γ − 1) . = RT1 − T2 ln λ T2 ⁄ T1 It is worth noting that the areas under the adiabatic parts of the curves, W34 = 4 ∫3 p dV and W12 = . Hence the Carnot efficiency is T1 V1 = Vmin T2 so that 4 1 ⁄ (γ − 1) df Q1 = W23 = RT 1 ln λ T2 ⁄ T1 1 ⁄ (γ − 1) 3 Now to get the efficiency we need to evaluate the energy extracted from the heat source. This is just the area 2 ∫1 p dV cancel exactly. This should not be surprising since on these segments of the cycle, ∆Q = 0, and therefore W34 = cV T1 − T2 and W12 = cV T2 − T1 ≡ −W34 . T1 − T2 ∆W = . T1 Q1 Carnot’s proof that this is the maximum possible efficiency of any heat engine is based on the assumption that a machine that creates work from nothing (that is, a perpetuum mobile of the first kind) is impossible. Suppose some other kind of engine could do better than a Carnot cycle engine that uses an ideal gas. Then we could use it to produce energy, and run the Carnot engine backward to dump heat back into the heat source. That is, if engine X can produce work ∆WX > ∆WCarnot from the same heat input Q1, then running the Carnot engine backwards uses work ∆WCarnot to put the heat Q1 back, leaving the source and the sink in their initial conditions, but with a net output of work, ∆WX − ∆WCarnot > 0 , having been produced. This argument of Carnot’s is so general and clever that it has been applied in many other circumstances. For example, Einstein used it to show that all forms of energy content must contribute to the weight of an object according to g ∆m = g ∆E c2 —for if one form of energy did not contribute to weight, one could in principle use pulleys, ropes and an energy converter to make a perpetual motion machine. Physics of the Human Body Chapter 6 Some ideas from thermodynamics 5. The Second Law of Thermodynamics Carnot’s discussion of the efficiency of heat engines led naturally to the concept of entropy, although it was not until the end of the 19th Century that Boltzmann was able to give a physical interpretation of entropy in terms of degree of order and probability. Now in the Carnot engine, we note that the heat taken out of the reservoir at T1 is Q1 = W23 1 ⁄ (γ − 1) = RT 1 ln λ T2 ⁄ T1 and that dumped in the sink at temperature T2 is 1 ⁄ (γ − 1) Q2 = W41 = RT 2 ln λ T2 ⁄ T1 ; that is, Q2 Q1 = . T1 T2 (12) Like many results in physics, Eq. 12 is more general than the arguments used to derive it. If we imagine a lot of heat reservoirs (at different temperatures) we could add up all the heats extracted from them, divided by their temperatures, to obtain the integral df S = ∫ dQ . T 2. 3. dQ T (14) is sometimes called the second law of thermodynamics. One of its chief consequences is that in a closed system the entropy always increases or remains the same. 6. Statistical Mechanics Thermodynamics is somewhat of a misnomer—it deals with equilibrium relations rather than timedependent ones !2 .Thetheory consistsoftwolaws: the First Law, which is just conservation of energy; and the Second Law, which posits a new thermodynamic function, entropy, that (in a closed system) always increases or remains the same. The remainder of thermodynamics consists of empirical relations (equation of state, specific heat) and relationships that can be derived from them. Statistical mechanics is an attempt to derive the laws of thermodynamics from a microscopic description at the atomic level. That is, historically statistical mechanics accepted the reality of atoms and molecules, even though its early practitioners did not know how exactly big atoms were, and had no means by which to observe them directly!3 . (13) It turns out that S is independent of the path the system takes in getting from state 1 to state 2, hence it is another thermodynamic function like the internal energy, U, that depends only on the state of the system. This new thermodynamic function is called entropy. The differential relation dS = 59 Boyle’s Law Daniel Bernoulli seems to have been the first to use the statistical approach to obtain a practical result, Boyle’s Law, which states that at constant temperature the product of pressure and volume of a gas is constant: pV = constant . (15) …that is, it should really be called thermostatics. Of course technological progress has somewhat altered this—we can now isolate and work with individual atoms, as well as visualize the atomic granularity of the surfaces of solids. 60 Physics of the Human Body Statistical Mechanics N N i=1 i=1 m m 1 Fx = v2x (i) ≡ N ⋅ ∑ v2x (i) ∑ L L N Nm 2 〈vx 〉 , L = (19) where 〈v2x 〉 is the mean-square velocity component in the x-direction. But there is nothing special about one direction or another, so we may write 〈v2x 〉 ≡ His (vastly oversimplified) picture of a gas consisted of a box of point masses whizzing around randomly, and colliding elastically with the walls of the box. The particles do not interact with each other. We shall imagine a box in the form of a rectangular parallelepiped of length L and crosssectional area A, as shown below!4 . A single molecule collides with the face at the right end of the box (say, lying in the y-z plane) and reverses the x component of its velocity. The momentum transferred to the wall is thus ∆px = 2mvx . (16) The time between such collisions is 2L ∆t = vx (17) 1 2 1 〈vx + v2y + v2z 〉 = 〈v2〉 . 3 3 Now, finally, the force per unit area (that is, the pressure) acting on the rightmost face is df p = Fx N 2 m 2 N2 = 〈v 〉 ≡ 〈ε〉 . (20) LA 3 2 V 3 A Not only does Eq. 20 express Boyle’s Law, it even tells us (once we extend Boyle’s Law to the ideal gas law) how the average kinetic energy of a molecule is related to the absolute temperature: RT = N2 〈ε〉 . ν 3 (21) If we take one mole of molecules, then ν = 1 and N = NA so we have 〈ε〉 = df 3 3 R T = kBT . 2 2 NA (22) so the average force exerted by this molecule is mv2x = . fx = L ∆T ∆px (18) If there are N such molecules whizzing about, the total force they exert on the rightmost face of the box is 4. Maxwell-Boltzmann distribution When we study matter from the atomic viewpoint we can ask more detailed questions than those permitted by thermodynamics. For example we might ask what fraction of molecules of a perfect gas, at temperature T, have velocities between v and v + dv . The shape of the container is immaterial. A derivation for a spherical container may be found in G.B. Benedek and F.M.H. Villars, Physics with Illustrative Examples from Medicine and Biology: Statistical Physics (Springer Verlag, New York, 2000) pp. 250-252. Physics of the Human Body Chapter 6 Some ideas from thermodynamics James Clerk Maxwell first derived the probability distribution of molecular velocities in a dilute gas in thermal equilibrium. He argued that if the temperature is constant, then the probability that a molecule has x-component of velocity in the range [vx , vx + dvx] must be the same function as the probability that it has y-component in the range [vy , vy + dvy]. That is, the motions in orthogonal directions (and their corresponding probabilities) are statistically independent. The total probability function therefore has the form5 → pv d3v = f(vx) f(vy) f(vz) dvx dvy dvz . (23) → Moreover, p( v ) can depend only on the squared → → → magnitude, |v|2 ≡ v ⋅ v , of the velocity, since it must be6 invariant under rotations and reflections of the coordinates. Thus we have p(v2x + v2y + v2z ) = f(v2x ) f(v2y ) f(v2z ) . Now suppose we rotate the coordinates so that v2y = v2z = 0 ; 2 2 p(v ) = A f(v ) , or ln f(v2x + v2y + v2z ) + 2 ln A = ln f(v2x ) + ln f(v2y ) + ln f(v2z ) . It is easy to show that the only function for which this is true is f(u) = −α u + Γ where α is an undetermined constant and Γ = 1 2 ln A2 is a normalization constant that we determine from the condition that the total probability that a particle has some velocity is 1. Therefore the velocity distribution function is 2 p(v2) = A3 e−α v . To determine the constant α we need to calculate the average kinetic energy, since the kinetic theory of gases, as we just saw, relates the average kinetic energy of a molecule to temperature via 1 2 〈 mv2 〉 = 3 k T; 2 B therefore, since df 〈v2〉 = 2 ∫ d3v v2 e−αv ∫ d3v e−αv 6. = 3 , 2α we find 1 2 α = m ⁄ kBT , p(v2) = A3 e−ε ⁄ kBT , (24) where ε is the kinetic energy. Ludwig Boltzmann noticed that Maxwell’s velocity distribution has the form (24), and saw that the law for the variation of gas density with altitude in a uniform gravitational field, ρ(z) = ρ(0) e−mgz ⁄ kBT (25) was the same law, with kinetic energy replaced with potential energy. He therefore proposed a probability distribution law governing the energies of a complicated system: p(E) = g e−E ⁄ kT , 5. 2 or in other words, then it becomes clear that (let A = f(0) ) 2 61 (26) The sparseness of the Latin alphabet leads us to use the symbol p to mean both probability, as in this section; and pressure, as elsewhere. …that is, by symmetry. This is an illustration of the enormous power of symmetry in theoretical physics. 62 Physics of the Human Body Applications of Maxwell-Boltzmann distributions of which Maxwell’s result was a special case. 7. Applications of Maxwell-Boltzmann distributions We now consider three applications of the distribution law, Eq. 26, each with biological implications. Liquid-vapor equilibrium The first concerns the equilibrium of a liquid and a gas in contact, say water and water vapor. We note the well known fact that energy is required to convert a molecule of water from the liquid to the gaseous phase. This is basically the binding energy of water molecules to the body of liquid. In thermodynamics it is called the chemical potential. In general the calculation of chemical potentials from first principles—that is, from a knowledge of the microscopic forces acting between water molecules, and using the laws of quantum mechanics— is a difficult task that has only been performed for some simple systems. So we shall assume it is a given (slowly varying) function of temperature and pressure. We call this energy µ. Reaction rates A second application of Boltzmann’s Law concerns activation and reaction rates. Suppose that before two molecules can undergo a chemical reaction (that ultimately releases energy, to be sure!) they have to approach each other within a certain distance. If there is a repulsive potential between Then according to Boltzmann, the probability for a molecule to be in a state of energy µ relative to the liquid state—that is to be gas rathor than liquid—is proportional to e−µ ⁄ kBT and we may write the partial pressure of vapor molecules as pvap = p0 e−µ ⁄ kBT where p0 is a constant that, like µ, could in principle be calculated from fundamental considerations, but has not been because of the difficulty of the calculation. That is, we regard µ and p0 as empirical fitting parameters. If we take the logarithm of the vapor pressure and plot it against 1 ⁄ T we should obtain a straight line of negative slope, −µ ⁄ kBT ; as the figure at the above right shows, this is indeed what we find. them, as shown below, their motion must surmount the barrier before they can get close enough to react. The height of the barrier is the “activation energy” ∆E; not surprisingly, the fraction of molecules with energies equal to or greater than this energy varies like f ≈ e − ∆E ⁄ kBT. Physics of the Human Body Chapter 6 Some ideas from thermodynamics Thus we expect the reaction rate to increase very rapidly with temperature, when kBT << ∆E , but only slowly thereafter. The dependence of reaction rate on temperature explains why life as we know it can exist only within a narrow range of temperatures. The effects are especially severe on muscles: in order to generate mechanical power most efficiently, muscles must be maintained at a definite temperature. It is interesting to note that certain large fishes—marlin, great white sharks, and so on—actually maintain their cruising muscles well above the ambient temperature of the water7. They are then able to use oxygen more efficiently, meaning they need less muscle for the same propulsive power, can thereby be more streamlined, and thus obtain a competitive edge in the evolutionary struggle. Dissolved gases The third application of Boltzmann’s Law concerns the solution of gases in liquids, which is crucial to understanding oxygen transport by blood. Animals above a certain size cannot rely on the amount of oxygen that can dissolve to provide for the demands of sustained metabolism. Therefore they have evolved a transport mechanism of much greater capacity. Red blood cells represent about 40% of the blood volume. The volume of a red blood cell is 1.6×10−16 m3, and it contains 2.8×108 molecules of hæmoglobin. A single hæmoglobin molecule binds 4 O2 molecules. Thus we may ask what is the ratio of O2 dissolved in plasma to the hæmoglobin-borne O2, in fully oxygenated blood? To discover the answer to this question we must understand how a gas dissolves in a liquid. Assuming the solution is dilute, 7. 63 ngas << n liq , we understand that a gas molecule, when it dissolves, releases an energy µ (the binding to the fluid). It also incurs an energy cost because the molecules of the fluid are forced to rearrange themselves to accomodate the intruder. Let us say the net energy is positive (rearrangement cost exceeds binding)—then we should expect the fraction of molecules in the dissolved phase to be fdis ≈ e − δE ⁄ kT. At equilibrium we might expect the partial pressure in the fluid to roughly equal the gas pressure outside, hence pg δV − δE ⁄ kT df ngas ∝ e = pg K(T) . n liq kT The latter is called Henry’s Law, and K(T) is called the solubility of the gas. For O2 in water at 25 oC, the solubility is 3.03 × 10−8 mm−1. (That is, the pressure must be measured in mm of Hg.) Air pressure is about 760 mm. About 20% of it is O2 so the dissolved oxygen concentration must be (at most) 6×10−4 mol/liter of water. With 6 liters of blood in the body, and 60% of the volume of the blood being liquid (assumed to be water) we get 0.002 moles of dissolved gas. The total number of hæmoglobin molecules is about NH ≈ 2.8×108 × 0.4 × 6×10−3 1.6×10−16 ≈ 0.44×1022 . There are 4 oxygen molecules per hæmoglobin molecule giving about 0.028 mol of oxygen carried by the hæmoglobin. In other words, the hæmoglo- C.J. Pennycuick, Newton Rules Biology (Oxford U. Press, New York, 1992) pp. 33-36. 64 Physics of the Human Body Applications of Maxwell-Boltzmann distributions bin carries about 14 times as much oxygen as can dissolve in the blood. The bends The physics of solution of gases in liquids also explains caisson disease, or “the bends”, that afflicted so many laborers working at high pressure during the 19th Century8, and that remains a hazard to scuba divers. Although nitrogen gas (the dominant fraction of our atmosphere) is not very soluble in blood, as the external pressure rises, more dissolves (see below). When a diver brings his own air supply underwater, it must be supplied at the pressure of the surrounding water or the effort of breathing would soon exceed the diver’s strength. Thus in a deep dive for extended periods, a considerable amount of nitrogen dissolves in the blood. If the external pressure is removed too rapidly—for example, by the diver rising too swiftly—the nitrogen can no longer remain in solution and forms bubbles, rather like a bottle of soda that has been shaken and then opened quickly. These bubbles can lodge in joints, where they inflict great pain (causing the victim to bend over in distress); or they can press on nerves, causing paralysis; or they can even cause embolisms blocking blood flow to crucial areas of the body. In extreme cases the bends can be permanently crippling or fatal. immediately to high pressure in a decompression chamber (the pressure is then gradually reduced, according to an appropriate schedule). Finally, especially in deep dives, a diver can breathe a special mixture of oxygen and helium. (He cannot breathe pure oxygen since that is poisonous at high pressure!) Using helium as the inert component of breathing air has two advantages, and one disadvantage. First, helium emerges from the blood far more readily than nitrogen, and can pass from the capillaries to the lungs far more readily. Using it therefore greatly reduces the decompression time. Second, helium is chemically far more inert than nitrogen, hence does not produce nitrogen narcosis (“rapture of the deep”) at high pressure. The disadvantage is that the speed of sound in helium is far greater than in air, causing divers breathing that mixture to sound like Alvin the Chipmunk or Donald Duck, with consequent effects on communication with the surface. One solution is to return to the surface more slowly. A diver progresses to the surface in gradual stages, using empirical “decompression tables” to schedule how long to remain at each intermediate depth, based on the maximum depth of the dive and how long the diver remained there. Alternatively, a diver can return to the surface quickly (for example, in an emergency) if he can be returned 8. The workers who sank the footings for the Brooklyn Bridge suffered from this disease in great numbers. Even John Roebling, the chief engineer, was incapacitated permanently by the bends.