* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Data hierarchy in Oracle

Survey

Document related concepts

Transcript

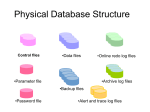

Data hierarchy in Oracle • Data hierarchy in Oracle is specific (and demented!) – see figure 3.1 • Normally, unit of storage is file… – Managed by OS – Can grow / shrink – Physical sections logically connected by OS • In Oracle a DB = one or more tablespaces – – – – One or more objects, indexes, clusters… Fixed size – gets full (Extended by DBA) Many admin functions at that level One file can support only ONE tablespace but reverse not true Terminology • File: physical entity for storing data on disk • Segment: collection of extents for a particular object • Extent: unit of disk storage made up of contiguous blocks • Blocks: sizing of smallest piece of database data decided upon when db created • (Block – OS) Create a tablespace • Create tablespace FREDDY datafile ‘path\freddy.dbf’ size 1000K Default storage (initial 10K next 10K Minextent 1 maxextents 100 Pctincrease 0) Permanent; 10 10 10 10 20 30 10 40 10 50 10 60 10 70 10 80 10 90 10 100 10 10 12 10 20 32 14.4 46.4 17.2 63.6 20.7 84.3 25 109.3 30 139.3 36 175.3 42.3 218.5 About tablespaces • Kind of partitioning – Easier to deal with small ones – Inc. monitor perf. – Oracle very strong at TS level • Optimal seems to be around 2GB • Strategy = isolate aged data into one tablespace => can be made read-only • Should have same size extents – Contiguous blocks Reasoning behind TS creation • • • • • • • Business relevance Size / type of objects Type of activity Volume of activity Backup Transportability Time-based changes in activity P 148 Reasoning behind TS creation • • • • • • • Business relevance e.g. modular design / keep data together Size / type of objects Object of similar sizes – reuse of space Type of activity e.g. index VS table / full scan VS rowID Volume of activity Low I/O VS high I/O objects Backup TS is smallest unit for Bcup and restore Transportability Cloning copy is done by TS as well Time-based changes in activity Eg: DW versus OLTP systems Block size • Smallest unit of oracle DB • Critical perf. Factor as inefficient space use leads to increase I/O activity • Block overhead – – – – – Integrity section (90 bytes) Runtime overhead (136 bytes) Approx. 256 b Transactional header (24 bytes and growing) Directory section (Pointers…) And then………..data } • Big is beautiful? Percentage of overhead decreases when block size increases, but no right answer here 2 K, 4K or 16K? • OLTP prefer smaller – small granularity increase likelihood that data is in buffer (indexed access) • DW or DSS prefer larger – physically close data used together + less movement • In practice constraint on memory will dictate • Buffer size + now split in 3 areas: – KEEP pool – RECYCLE pool – DEFAULT pool Then, there was a table • Tables = one physical space on disk [segment] – same for any object • Each segment = space set out in tablespace [initial extent] • When table grows allocate next extent – set of adjacent blocks • Until tablespace is full • Look again at figure 20.3 What a segment looks like • Each segment contains a header block • Space directory for other blocks • Properly sized tables = few extents (low fragmentation) • Additional extents are never lost until drop or delete commands are used • Can also adjust pctfree value (to fill a Null value column) Sizing extents • Try to match the max capacity of your storage device to limit I/O operations – eg: minimal read size of OS (64K?) • For single objects, It is beneficial to keep extents of same size (deletion) • Try to limit the number of extents below 50 (measure of the growth of the object) • If too many, rebuild object with larger size extents Roll back segments • Special case of segment to hold the before image of DB while transactions take place • Should be stored in separate TS because specific behaviour: – – – – Cyclical rather than random access pattern Set of segments that gets written and written over Then written to disk Best used when “Hot” • RB Segments grow to be big enough to hold complete transacs (cannot span segments) Oracle “space allocation” • DBA allocates space for table after 2 days of hard thinking • Oracle ignores it blatantly as follows: Table name initial size next extent oracle sizing next extent Smalltab Mediumtab 7K 103K 7K 103K 20K 120K Total size after growth smalltab = 40K instead of 14K mediumtab = 206K instead of 240K 20K 120K Creating and using tables • Table creation with CREATE TABLE statement • Syntax: Create table NAME ( field1 datatype special constraint field2 datatype etc… ); • Special constraint: e.g. NOT NULL or PRIMARY KEY or CHECK Constraints • Candidate key: Create table fred ( name varchar2(10) NOT NULL firstname varchar2(10) NOT NULL age number Constraint FRED_UQ unique (name, firstname) ); Primary Key Create table fred ( name varchar2(10) firstname varchar2(10) age number Constraint FRED_PK Primary Key (name, firstname) ); • Same apart: always NOT NULL + can only have one of them in a table • If only one field just list primary key as special constraint Foreign key – aka referential integrity constraint Create table fred ( ID number NOT NULL name varchar2(10) firstnamevarchar2(10) age number Constraint FRED_PK Primary Key (ID), foreign key (age) references OTHERTAB(age) ); • Use ON DELETE CASCADE to maintain constraint • Can use DEFERRAL mode Check constraint Create table fred ( ID number NOT NULL name varchar2(10) firstname varchar2(10) age number CHECK (age between 18 and 65) ); Deleting data • Drop table statement – remove table • Or truncate table statement – empty it • Warning: neither can be rolled back • Otherwise: Alter table NAME Add (…) Alter table NAME modify (…) drop column fieldname • See rules on handout Now what? Clusters • To store different tables in physically close areas on disk • Create a cluster to store both objects • Cluster key is any useful field that links the tables • Can keep name of field or pick new one – e.g. Create cluster fred (name datatype) – then assign tables: create table…cluster fred (name) What this cluster looks like 12 Cork fred adam 13 Midleton Bob Fitz 14 Bally… Brian O’reilly Table 1 Table 2 Cluster Key French Irish English Finally… • Users can have different logical views on a database • These views are called Schemas • They allow for a re-organisation of physical DB objects for each users • Each schema can contain elements from different tablespaces • Has limited size allocated to each user Summary: storage in Oracle • Keywords: tablespace / datafiles / database objects / segments / extents • When you create an object: – Specify size / tablespace info = storage clause – Otherwise: default values • Pctincrease should be used with care (affects reusability of reclaimed space – i.e. extents) • Wise use of tablespace eases admin (like partitioning) – – – – sizing follows sizing of device – e.g. 2GB Many utilities to work with tablespaces Isolate processes – e.g. a report running inside a TS Also for archiving (move to read only – huge perf. gains) I/O Performance issues • Extent sizing a key • Oracle has 2 ways to read data: – By rowID – typically thru an index – Full table scan • In second case, oracle reads several blocks at a time – – – – Set with DB_FILE_MULTIBLOCK_READ_COUNT Limited only by I/O device buffer capacity E.g. block = 4K, I/O buffer = 64K => ????? Extent size should be multiple of 64K example • • • • Table with 10 extents 64 K each I/O device buffer = 64K => 10 reads If extent = 640K => ???? Reads If 8 extents 80K each => ??? Reads – Reads cannot span extents => 16 reads or an increase of 60% in I/O operations • Cl: either use extents much larger than buffer or use multiple of buffer size And for roll back segments • Should be kept small enough • Current wisdom is to ensure TS for rollback is normally 50% free • Not always possible to use only one RB size – For different types of processes – e.g. TPS – Use two different TB to isolate activites – Easier trouble shooting • Discarded rollback segments go into the log file Data utilities • ORACLE is king of data handling • Export: to transfer data between DBs – Extract both table structure and data content into dump file • Import: corresponding facility • SQL*loader automatic import from a variety of file formats into DB files – Needs a control file Control files: using SQLloader • Data tranfers in and out of DB can be automated using the loader – Create a data file with the data(!) – Create a control file to guide the operation • Load creates two files – Log file – “bad transactions” file • Also a discard file if control file has selection criteria in it Querying the Oracle DB • 2 main methods: – Full scan – TABLE ACCESS FULL – Direct access – TABLE ACCESS BY ROWID • When spelling out queries – drop HINT using syntax: /*+ HINT */ • This invokes the OPTIMISER – RBO – CBO Example: • Accessing the worker field in the worker table: • By default full scan (+ when no where statement) • else Select /*+ FULL(worker)*/ From worker Where lodging = ‘Rose Hill’ Select /*+ ROWID(worker)*/ ……… • For low hit ratio • need index or rowid value Effect of Primary key constraints • No index by default • When primary key added = > index created • Name given will be same as name of primary key (e.g. TABLE_PK) • PK indexes are of UNIQUE kind • Other non-unique indexes can be created on other fields • CREATE INDEX name ON table(field) TABLESPACE indexes Using the index • Query with “where” clause on PK • First the PK index is accessed in INDEX UNIQUE SCAN mode • Matching Rowid is retrieved and returned • Second: access table with ROWID as argument in TABLE ACCESS BY ROWID mode • When value sought is in index, no need to read table at all Index Range Scan • If query based on range of values / non-unique index – E.g. field like’M%’ • More than one value returned => IRS • Less efficient • Especially if values are not in index => IRS followed by table rowid access • Another reason why index creation important Multiple index scans • When more than one value in WHERE clause • Two indexes can be used to produce two scans of range values • Then concatenation of scans • Result of the query • Broadly similar to cross-reference of bitmap indexes Database creation • Using a script: Connect internal as sysdba; create database NAME….; • Using the OEM GUI – Parameters stored in a small DB – Access to other utility pgms – eg: RMAN – Can accommodate several DBAs • Key decisions on sizing – Eg: assign a block size – once and for all Opening and closing the DB Startup open NAME; Or Startup mount NAME; Alter database open; Shutdown; Else – use OEM menus When DB is started page846 1. System Global Area (SGA) created: a) Data block buffer cache created • • • • • • Size is key for performance of DB typically 2% of whole DB DB_BLOCK_BUFFERS / DB_BLOCK_SIZE Least Recently Used (LRU) method Too small means fight for space between objects => increase in misses => increase in I/O Too big = crippling for other operations When DB is started (2) b) Shared SQL Pool created: • • • • • Stores DD cache: info about data structure Stores library cache: info about SQL statements run LRU algorithm Too small => no re-use and low performance SHARED_POOL_SIZE in init.ora Parameter files: INIT.ORA • Initialisation file for each DB • MYDB = > initmydb.ora • Contains sizing of SGA and number of background processes, DB name and block size • Read at startup means changes only implemented at next startup The control file • Each DB also has a control file • Contains Dbname, name and location of files and redo logs, timestamp of DB creation • Can be mirrored for added security • Identifies all files that must be opened when Db is loaded • Also used in DB recovery See page 873 – add more stuff on backups The REDO function • Very Hot redo is located in buffer – on-line redo • Semi-Hot redo is kept in redo log files – archived redo • Limit to how much can reasonably be held • So ORACLE organises hot backups of the DB • Redo files up to that point can then be discarded • Needs to run in ARCHIVELOG mode Backups • When DB is offline – Datafiles – Control file – On-line redo – Init.ora • Stores a complete workable DB • Does not work when DB is running • Not to be trusted after abort shutdown On-line backups • When running in ARCHIVELOG mode • 3 distinct redo log files – When one full moves to next one – After 3, overwrite first one • Archiver background process backs up each redo file before overwriting it – to disk • Automatic recovery from on-line backup – When DB started all failed transactions rerun or roll back • Else, limited back up Recovery manager • Takes redo logs on by one and checks transactions • Warning redo files cannot be skipped in the sequence of recovery • Options: – – – – Level 0: all blocks ever used Level 1: all blocks used since last full Bup Level 2: only those changed last Bup Huge impact on Bup performance • See screen shot Other functions • • • • • • • • Archiving Locking Logwriting Data writing Recovering Job queues System monitor Process monitor Summary Oracle Instance PMON SMON DBWR SNPn Shared Pool LCKn RECO Snnn SGA Database Buffer Cache Dnnn Pnnn Redo Log Buffer CKPT LGWR ARCH Server Processes User Processes Oracle Database Parameter File Control Files DataFiles Redo Log Files After Oracle documentation Database creation – non-data objects • DBs contain number of compulsory objects • Create / assign tablespaces • System tablespace – Data dictionary – Names and locations of all tablespaces, indexes and clusters of DB – Files name / location (multiple instances) – Rollback segments • Data tablespaces are separate