* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download 20060717-phoebus-almes

Piggybacking (Internet access) wikipedia , lookup

Computer network wikipedia , lookup

Remote Desktop Services wikipedia , lookup

Cracking of wireless networks wikipedia , lookup

Network tap wikipedia , lookup

TCP congestion control wikipedia , lookup

Deep packet inspection wikipedia , lookup

Airborne Networking wikipedia , lookup

Internet protocol suite wikipedia , lookup

Recursive InterNetwork Architecture (RINA) wikipedia , lookup

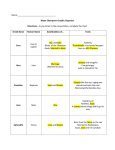

Achieving Dependable Bulk Throughput in a Hybrid Network Guy Almes <[email protected]> Aaron Brown <[email protected]> Martin Swany <[email protected]> Joint Techs Meeting Univ Wisconsin -- 17 July 2006 Outline Observations: on user needs and technical opportunities on TCP dynamics Notion of a Session Layer the obvious application a stronger application Phoebus as HOPI experiment deployment early performance results Phoebus as an exemplar hybrid network On User Needs In a variety of cyberinfrastructure-intensive applications, dependable high-speed wide-area bulk data flows are of critical value Examples: Terabyte data sets in HPC applications Data-intensive TeraGrid applications Access to Sloan Digital Sky Survey and similar very large data collections Also, we stress ‘dependable’ rather than ‘guaranteed’ performance As science becomes more data-intensive, these needs will be prevalent in many science disciplines On Technology Drivers Network capacity increases, but user throughput increases more slowly Source: DOE The cause of this gap relates to TCP dynamics On TCP Dynamics Consider the Mathis Equation for Reno MTU Speed RTT * loss Focus on bulk data flows over wide areas How can we attack it? Reduce non-congestive packet loss (a lot!) Raise the MTU (but only helps if end-to-end!) Improve TCP algorithms (e.g., FAST, Bic) RTT is still a factor Use end-to-end circuits Decrease RTT?? Situation for running example The Transport-Layer Gateway A session is the end-to-end chain of segmentspecific transport connections In our early work, each of these transport connections is a conventional TCP connection Each transport-level gateway (depot) receives data from one connection and pipes it to the next connection in the chain Session User Space Session Transport Transport Transport Network Network Network Data Link Data Link Data Link Physical Physical Physical The Logistical Session Layer Obvious Application Place a depot half-way between hosts A and B, thus cut the RTT roughly in half MTU Speed max( RTT1,RTT2 ) * loss Bad news: only a small factor Good news: it actually does more MTU1 MTU 2 Speed min( , ) RTT1 * loss 1 RTT2 * loss2 Obvious Application: With one depot to reduce RTT Stronger Application Place one depot at HOPI node near the source, and another near the destination Observe: Abilene Measurement Infrastructure: 2nd percentile: 950 Mb/s median: 980 Mb/s MTU = 9000 bytes; loss is very low Local infrastructure: MTU and loss are good, but not always very good but the RTT is very small But with HOPI we can do even better The HOPI Project QuickTime™ and a TIFF (Uncompressed) decompressor are needed to see this picture. The Hybrid Optical and Packet Infrastructure Project (hopi.internet2.edu) Leverage both the 10-Gb/s Abilene backbone and a 10-Gb/s lambda of NLR Explore combining packet infrastructure with dynamically-provisioned lambdas Stronger application: depots near each host Backbone: large RTT 9000-byte MTU very low non-congestive loss GigaPoP / Campus: very small RTT some 1500-byte MTU some non-congestive loss Two Conjectures Small RTT does effectively mask moderate imperfections in MTU and loss End-to-end session throughput is (only a little less than) the minimum of component connection throughputs MTU1 MTU 2 Speed min( , , RTT1 * loss 1 RTT2 * loss2 MTU 3 ) RTT3 * loss3 Phoebus Phoebus aims to narrow the performance gap by bringing revolutionary networks like HOPI to users Phoebus is another name for the mythical Apollo in his role as the “sun god” Phoebus stresses the ‘session’ concept to enable multiple network/transport infrastructures to be catenated Phoebus builds on an earlier project called the Logistical Session Layer (LSL) Experimental Phoebus Deployment QuickTime™ and a TIFF (Uncompressed) decompressor are needed to see this picture. Place Phoebus depots at each HOPI node Ingress/egress spans via ordinary Internet2/ Abilene IP infrastructure Backbone span can use either/both of: 10-Gb/s path through Abilene dynamic 10-Gb/s lambda Initial test user sites: SDSC host with gigE connectivity Columbia Univ host with gigE connectivity Initial Performance Results In very early tests: SDSC to losa: about 900 Mb/s losa to nycm: about 5.1 Gb/s nycm to Columbia: about 900 Mb/s direct: 380 ± 88 Mb/s Phoebus: 762 ± 36 Mb/s In later tests with a variety of file sizes, SDSC to losa performance became worse Initial Performance Results Bandwidth Comparison 600 Megabits/second 500 400 300 200 100 0 32 64 128 256 512 1024 Transfer Size in Megabytes Direct Phoebus 2048 4096 Initial Test Results What about the three components? SDSC to losa depot: 429-491 Mb/s losa depot to nycm depot: 5.13-5.15 Gb/s nycm depot to Columbia: 908-930 Mb/s Whatever caused that weakness in the SDSC-to-losa path did slow things down Plans for Summer 2006 ‘Experimental production’ Phoebus, reaching out to interested users Improve access control and instrumentation: Maintain a log of achieved performance Test use of dynamic HOPI lambdas Evaluate Phoebus as a service within newnet Test use of Phoebus internationally Comments on Backbone Span Backbone could ensure flow performance between pairs of backbone depots Backbone could provide a Phoebus Service in addition to its “IP” service Relatively easy to use dynamic lambdas within the backbone portion of the Phoebus infrastructure Alternatively, the backbone portion could use IP, but a non-TCP transport protocol! Comments on the Local (Ingress and Egress) Spans Near ends, we have good, but not perfect, local/metro-area infrastructure Relatively hard to deploy dynamic lambdas Small RTTs allow high-speed TCP flows to be extended to many local sites in a scalable way Thus, Phoebus leverages both: innovative wide-area infrastructure and conventional local-area infrastructure Phoebus can thus extend the value of multi-lambda wide-area infrastructure to many science users on high-quality conventional campus networks Ongoing Work Phoebus deployment on HOPI We’re seeking project participants! Please email for information ESP-NP ESP = Extensible Session Protocol Implementation on an IXP Network Processor from Intel The IXP2800 can forward at 10 Gb/s Acknowledgements UD Students: Aaron Brown, Matt Rein Internet2: Eric Boyd, Rick Summerhill, Matt Zekauskas, ... HOPI Testbed Support Center (TSC) Team MCNC, Indiana Univ NOC, Univ Maryland San Diego Supercomputer Center: Patricia Kovatch, Tony Vu Columbia University: Alan Crosswell, Megan Pengelly, the Unix group Dept of Energy Office of Science: MICS Early Career Principal Investigator program End Thank you for your attention Questions?