* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download NTH ROOTS OF MATRICES - University of Central Missouri

Capelli's identity wikipedia , lookup

Matrix completion wikipedia , lookup

System of linear equations wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

Rotation matrix wikipedia , lookup

Principal component analysis wikipedia , lookup

Determinant wikipedia , lookup

Four-vector wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Jordan normal form wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Gaussian elimination wikipedia , lookup

Matrix calculus wikipedia , lookup

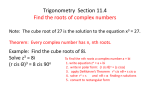

NTH ROOTS OF MATRICES

by

Eric Jarman

An Abstract

presented in partial fulllment

of the requirements for the degree of

Master of Science

in the Department of Mathematics and Computer Science

University of Central Missouri

June, 2012

ABSTRACT

by

Eric Jarman

This paper investigates the feasibility of nding any

power, for an arbitrary

m×m

m × m square matrix.

matrices and complex

m×m

nth root,

and by extension any rational

Root nding methods are investigated for real

matrices.

NTH ROOTS OF MATRICES

by

Eric Jarman

A Thesis

presented in partial fulllment

of the requirements for the degree of

Master of Science

in the Department of Mathematics and Computer Science

University of Central Missouri

June, 2012

iv

NTH ROOTS OF MATRICES

by

Eric Jarman

ACCEPTED:

C~o:::hematics

and Computer Science

Contents

1

Introduction

1

2

Iterative Methods

3

2.1

Babylonian Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

2.2

Binomial Theorem

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

2.3

Netwon's Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

2.4

Denman-Beavers Iteration

2.5

Weaknesses

3

4

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

Complex Number Equivalents

13

3.1

Complex Numbers

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

3.2

Quaternions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

3.3

Cayley-Dickson construction . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

Matrix Forms and Properties

22

4.1

Powers of a Matrix and Diagonal Matrices

. . . . . . . . . . . . . . . . . . .

22

4.2

Matrix Diagonalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

4.3

Examples: Diagonalizing Matrix Forms of Complex Numbers . . . . . . . . .

29

4.3.1

Example 1:

2×2

Matrix of a Complex Number . . . . . . . . . . . .

29

4.3.2

Example 2:

2×2

Matrix of a Quaternion . . . . . . . . . . . . . . . .

34

v

vi

Contents

4.3.3

5

4×4

Matrix of a Quaternion . . . . . . . . . . . . . . . .

38

4.4

Roots of Complex Numbers vs. Roots of Diagonal Matrices . . . . . . . . . .

42

4.5

Jordan Canonical Form . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

4.6

Nilpotent Matrices

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

4.7

Existence of Roots

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

Hypercomplex Numbers

55

5.1

Multicomplex Numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

55

5.1.1

Bicomplex Numbers

. . . . . . . . . . . . . . . . . . . . . . . . . . .

56

5.1.2

Tessarines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

5.2

Split-Complex Numbers

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

58

5.3

Dual Numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

60

5.4

Split-Quaternions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

60

5.4.1

Roots of Split-Quaternions . . . . . . . . . . . . . . . . . . . . . . . .

62

Biquaternions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

5.5.1

68

5.5

6

Example 3:

Conclusion

Bibliography

Roots of Biquaternions . . . . . . . . . . . . . . . . . . . . . . . . . .

72

73

Chapter 1

Introduction

The operations for nding any integer power of a matrix are well-dened. The same, however,

cannot be said for non-integer powers of a matrix. If we can nd a method for calculating

some root of a matrix

integer

n > 1,

A,

that is to say, nd some matrix

then we can dene this matrix

A0

to be an

It is important to note that for an arbitrary matrix

of

A.

A0

nth

A,

where

root of

(A0 )n = A

for some

A.

there may be multiple

nth

roots

It is easy to construct a simple example which shows this:

2

2

4 0

−2 0

2 0

.

=

=

0 4

0 −2

0 2

When there are multiple solutions, we can choose one of them as the principal root. If we

do so, we can denote the principal root by

1

An .

We should note that it is possible to construct examples of complex matrix roots as well

as real matrix roots:

2

2

i 0

−i 0

−1 0

=

=

.

0 i

0 −i

0 −1

So, we would ideally like to have root nding methods which will work for real matrices and

1

CHAPTER 1.

2

INTRODUCTION

complex matrices.

This denition can also be used to nd any rational power

the principal

nth

root to the

pth

p

of a matrix by simply taking

n

power

A

1

n

p

p

= An .

We note that this construction only has meaning when

matrices. There is no need to examine

m×n

construct matrices whose product is a given

matrices where

m×n

A

and

m 6= n,

A0

are square

m×m

as we might of course

matrix, but such matrices would not be

equal in values or dimensions, and so cannot be called roots.

Chapter 2

Iterative Methods

We can begin by searching for methods of directly calculating a root. The simplest place to

start is to look at methods of calculating square roots of real numbers, and expand them to

be used on matrices.

2.1

Babylonian Method

The Babylonian method, also known as Heron's method, is based on a historical method

of calculating square roots.

The earliest reference to this method is listed in a numerical

approximation for the square root of 2 on the Babylonian clay tablet YBC 7289 [20]. The

rst explicit denition of the method was given by Hero of Alexandria in the rst century

[1].

This method for nding the square root of

s ∈ R starts with

root, and can be fully described as follows:

√

s,

1

s

=

xn +

2

xn

x0 ≈

xn+1

3

for

n > 0.

some approximation for the

CHAPTER 2.

4

ITERATIVE METHODS

This sequence is guaranteed to converge quadratically to the principal root if both

s

and

x0

are positive.

This method can be extended to matrices by starting with the identity matrix

I,

and

proceeding as follows:

X0 = I

Xk+1 =

1

Xk + AXk−1 .

2

This method is numerically unstable, i.e., small perturbations of the error matrix in the

k th

iteration may induce perturbations with increasing norm in successive iterations, causing the

sequence to diverge from the root [24]. When it does converge, this sequence will converge

quadratically to the root. The computational expense of this method is low, requiring the

calculation of a single matrix inverse per iteration.

Note that the norm in the preceding paragraph is the norm of a square matrix.

norm of

A,

denoted

N (A)

or

kAk,

is a nonnegative number associated with

A

The

having the

properties [50]

1.

kAk > 0

2.

kkAk = |k|kAk

3.

kA + Bk ≤ kAk + kBk,

4.

kABk ≤ kAkkBk.

when

A 6= 0,

and

kAk = 0 ⇔ A = 0,

for any scalar

k,

There are multiple methods which may be used to compute the norm of a matrix

of the simplest to compute norms [46] are

NI (B) =

sX X

i

j

b2ij

B.

Two

CHAPTER 2.

5

ITERATIVE METHODS

and

NII (B) = max

X

i

2.2

|bij | .

j

Binomial Theorem

The binomial theorem can be used to nd the coecients of any power of the quantity

For

x, y ∈ R, n ∈ Z, n ≥ 0,

(x+y).

we state:

n n X

n n−k k X n k n−k

(x + y) =

x y =

x y .

k

k

k=0

k=0

n

The well-known generalization of the binomial theorem by Sir Isaac Newton allows for any

real exponent, rather than being limited to positive integers, by replacing the nite sum with

an innite series.

∞ X

r r−k k

(x + y) =

x y .

k

k=0

r

In this case, it is necessary to dene what is meant by a binomial coecient with any real

upper index. If

n

is an integer we have

n

n!

=

k

k!(n − k)!

For any real number

r,

we factor out

(n − k)!

the usual binomial coecient, and substitute

r

from the top and bottom of the formula for

for

n

to yield [21]

r

r(r − 1) · · · (r − k + 1)

(r)k

=

=

,

k

k!

k!

where

(r)k

denotes the falling factorial, dened as

To nd an arbitrary root of any real

a,

(r)k = r(r − 1) · · · (r − k + 1).

we substitute

a = 1+x

into the generalized

CHAPTER 2.

6

ITERATIVE METHODS

binomial equation above to yield a formula involving only a single variable.

(1 + x)

r

=

∞ X

r

k

k=0

xk

= 1 + rx +

r(r − 1) 2 r(r − 1)(r − 2) 3

x +

x + ··· .

2!

3!

Note that we can use the rst few terms of this series as an approximation for a root of

This method can be extended for nding a root of a real matrix

Rm×m

where

A=I +B

(I + B)

r

=

∞ X

r

k

r(r − 1) 2 r(r − 1)(r − 2) 3

B +

B + ··· .

2!

3!

This series will converge if it can be shown that

of

B

as

A, B ∈

Bk

= I + rB +

B k → [0]

[46] by taking

and rewriting the power series as

k=0

show that

A

a.

k

B k → [0]

as

k

increases. It is possible to

increases by either showing that the dominant characteristic root

is less than unity, or by showing that the norm of the matrix

If the norm of the matrix

B

is close to

1,

N (B) < 1.

the series may converge slowly, in which case

the norm of the matrix in the series can be reduced by introducing a scalar coecient. For

A, C ∈ Rm×m ,

and a scalar

k ∈ R,

we take

A = k(I + C),

where

C=

1

k

A−I

to make the

series become

r

A =k

where

k

r

r(r − 1) 2 r(r − 1)(r − 2) 3

I + rC +

C +

C + ··· ,

2!

3!

is any scalar value selected that minimizes the norm of the matrix

C.

The norm

CHAPTER 2.

NI (C)

7

ITERATIVE METHODS

is minimized [46] by using

P P 2

i

j aij

k= P

.

i aii

Other norms may be more dicult to minimize.

The calculation of this series is even cheaper than the Babylonian square root method

shown earlier, since only one matrix multiplication is required for each term, rather than

one matrix inversion. If the series can be shown to converge, or modied appropriately to

ensure faster convergence, only the rst few terms of the series are necessary to nd an

approximation of a root. Also, since we may choose any real value for

r,

we are not limited

to nding only square roots.

2.3

Netwon's Method

Newton's method, or the Newton-Raphson method, is a method for nding roots (or zeroes)

of real valued functions. The method starts with an initial guess

using the function

f

and its derivative

f (xn )

f 0 (xn )

This method can be used to nd a square root of a number

and its derivative

f0

and proceeds to iterate

f 0:

xn+1 = xn −

f

x0 ,

as:

f (x) = x2 − a

f 0 (x) = 2x

a by simply dening the function

CHAPTER 2.

8

ITERATIVE METHODS

We should note that given this denition for the real function

f (x),

we see that the Baby-

lonian method is just a special case of Newton's Method for the square root.

f (xn )

f 0 (xn )

x2 − a

xn − n

2xn

2

2xn − x2n − a

2x

2 n 1 xn − a

2

xn

1

a

xn −

.

2

xn

xn+1 = xn −

=

=

=

=

The proof of quadratic convergence [51] shows us the properties of successive error terms of

the sequence

{xn },

n+1 ≈

f 00 (α) 2

2f 0 (α) k

from which it is possible to determine conditions on

x0

that guarantee convergence of the

sequence:

Let I be the interval

1.

f 0 (x) 6= 0; ∀x ∈ I

2.

f 00 (x)

3.

x0

Then

[α − r, α + r]

r ≥ |α − x0 |

where

,

is not unbounded,

is suciently close to

{xn }

for some

∀x ∈ I

α

,

.

will converge to a square root

α

of

a.

Since the function used for nding a square root of a number

see that the rst derivative

f 00 (x) = 2

means that

f 0 (x) = 2x

will only equal

is constant, and thus not unbounded.

x0

0

at

x = 0,

a

is

f (x) = x2 − a,

we can

and the second derivative

For the initial guess, suciently close

is in a neighborhood of a root within which the sequence can be guaranteed

to converge. One set of conditions for nding such a neighborhood is found in Kantorovich's

Theorem [25]:

CHAPTER 2.

9

ITERATIVE METHODS

Let a0 be a point in Rn , U an open neighbor-

Theorem 2.3.1 (Kantorovich).

hood of a0 in Rn , and f~ : U 7→ Rn a dierentiable mapping, with its derivative

h

i

Df~(a0 ) invertible. Dene

h

i−1

~h0 = − Df~(a0 )

f~(a0 ), a1 = a0 + ~h0 , U0 = B|~h0 | (a1 )

h

i

~

If U0 ⊂ U and the derivative Df (x) satises the Lipschitz condition

h

i h

i

~

~

Df (u1 ) − Df (u2 ) ≤ M |u1 − u2 |

for all points u1 , u2 ∈ U0 ,

and if the inequality

h

i−1 2

1

~

~

f (a0 ) Df (a0 ) M ≤

2

is satised, the equation f~(x) = ~0 has a unique solution in the closed ball U0 , and

Newton's method with initial guess a0 converges to it.

Note that

M

above is the Lipschitz ratio, measuring the change in the derivative.

By modifying our initial function to

nd higher

nth

f (x) = xn − a,

we can use Newton's method to

roots as well. In this general case, the convergence criteria on the rst and

second derivatives are met by any interval which does not contain

use Kantorovich's Theorem to nd an initial guess

x0 .

x = 0,

and we again

The convergence criteria can also be

adjusted to apply to complex valued functions [26], meaning that this method is not restricted

to nding only real roots. Since we see some advantages to this method, we should continue

investigating this method as it applies to matrices.

Newton's method can be extended to matrices by starting with some initial guess

X0

for

a root, and expanding the iteration using Fréchet derivatives.

Fréchet derivatives are used to nd the derivative of a function at a point within the

domain of the function. A simple denition is: A function

f

is Fréchet dierentiable at

a

if

CHAPTER 2.

10

ITERATIVE METHODS

the limit

f (x) − f (a)

x→a

x−a

lim

exists [47]. When applied to a matrix function, for example

F : Cn×n → Cn×n , it is necessary

to remember that matrix multiplication is not commutative for all matrices.

working with matrix derivatives, for any

A, B ∈ Cn×n

Thus, when

we may have something like [19]

d(AB) = d(A)B + Ad(B).

Now to apply Newton's Method to nd a square root of a matrix, we start with

Cn×n

and a matrix function

F : Cn×n → Cn×n

X, A ∈

dened as

F (X) = X 2 − A

To dene the iteration, we use a general function

X0

G : Cn×n → Cn×n ,

taking

as an initial approximation, we have:

−1

Xk+1 = Xk − [G0 (Xk )]

where

G0

is the Fréchet derivative of

G.

G(Xk ),

k = 0, 1, 2, . . . ,

Next identifying

F (X + H) = X 2 − A + (XH + HX) + H 2

with the Taylor series for F,

F (U ) = F (X) + F 0 (X)(U − X) +

F 00 (X)

(U − X)2 + · · · ,

2

F (X + H) = F (X) + F 0 (X)H +

F 00 (X) 2

H + ··· ,

2

Xk ∈ Cn×n ,

and

CHAPTER 2.

11

ITERATIVE METHODS

so

F (X) = X 2 − A,

F 0 (X)H = XH + HX,

F 00 (X) 2

H = H2

2

and so

F 0 (X)

is a linear operator,

so

F 00 = 2,

F 0 : Cn×n → Cn×n .

Thus the iteration can be written as [24]:

X0 given,

Xk+1

=

Xk + Hk

k = 0, 1, 2, . . . .

where

Hk

is the solution of the equation

Xk Hk + Hk Xk = A − Xk2 ,

which can be calculated using the Schur decomposition of

If

F 0 (X)

is nonsingular and

k X − X0 k

converge quadratically to a square root

X

Xk

[4].

is suciently small, the Newton iteration will

of the original matrix

A

[24]. It is important to

note that because this iteration as dened above requires calculating a Schur decomposition

at each step, it is computationally expensive, especially as compared to other iterations.

CHAPTER 2.

2.4

12

ITERATIVE METHODS

Denman-Beavers Iteration

The Denman-Beavers iteration [16] starts with both the identity matrix

I

and the matrix

A,

and iterates in two directions towards a root:

Y0 = A,

Z0 = I,

1

(Yk + Zk−1 ),

2

1

=

(Zk + Yk−1 ).

2

Yk+1 =

Zk+1

This iteration converges quadratically, with

A,

and

Zk

converging to its inverse

X −1 .

Yk

converging to a square root

X

of matrix

The iteration is not guaranteed to converge, even

if a root exists. The computation is relatively expensive, requiring calculating two matrix

inverses at each iteration.

It should be noted that this iteration can be shown to be a

simplication of the general form of the Newton's Method iteration [24].

2.5

Weaknesses

There are various methods which can be used to improve the likelihood of producing a root,

but each of these methods and others like them share a few weaknesses.

numerically based, they all calculate an approximated value.

First, all being

Second, they can all fail to

converge to a solution, even if a root of a given matrix is known to exist. Lastly, they each

only nd a single result, while there may be multiple roots for a given matrix. Given these

limitations, it would desirable to nd some method that would always produce a root, or

further, multiple roots, if such roots exist.

Chapter 3

Complex Number Equivalents

In order to nd a method assured of nding a root of any matrix, it makes sense to rst

look at number systems where roots will be guaranteed to exist and nd matrix equivalents

of these.

3.1

Complex Numbers

The rst number system to investigate is the complex numbers.

To nd any root in the

complex number system, it is necessary only to use a generalization of de Moivre's formula.

Given a complex number

z

in polar form

z = r(cos θ + i sin θ)

or in exponential form

z = reiθ

13

CHAPTER 3.

an

nth

root

α

14

COMPLEX NUMBER EQUIVALENTS

can be written

α = r

1

n

cos

θ + 2kπ

n

+ i sin

θ + 2kπ

n

,

or

1

α = r n ei

where k is an integer with values from

z

n−1

0+i

ei 2

0−i

ei 2

1

2

+

1

2

−

√

i 3

2

√

i 3

2

−1 + 0i

,

providing the unique roots of

eiπ

1

1

to

−1 + 0i

z2

z3

0

θ+2kπ

n

π

3π

π

ei 3

2π

ei 3

eiπ

We can also of course nd the roots of the complex conjugate

z̄ = re−iθ .

z̄ = r(cos x − i sin x)

or

Quickly looking at a few sets of examples of the roots of a complex number

and the roots of its conjugate

values.

z.

1

z̄ n ,

we notice that there do not appear to be any overlapping

CHAPTER 3.

z

ei 2

1+i

√

2

ei 4

√

− 1+i

2

ei 4

1

1

π

0+i

z2

z3

COMPLEX NUMBER EQUIVALENTS

√

i

2

+

i

2

−

3

2

√

3

2

0−i

z̄

π

1

z̄ 2

3π

π

1

ei 6

z̄ 3

5π

ei 6

π

e−i 2

π

0−i

e−i 2

1−i

√

2

e−i 4

1−i

√

2

e−i 4

− 2i +

− 2i −

π

3π

√

3

2

√

3

2

0+i

π

e−i 6

5π

e−i 6

π

ei 2

15

CHAPTER 3.

1

1

z3

π

1+i

√

2

ei 4

+ i sin π8

− cos π8 − i sin π8

√

√3

3

1

1

2

2

+ 2√2 + i 2 − 2√2

2

√

√3

3

1

1

− 2 2 + 2√2 + i − 2 2 − 2√2

ei 8

z

z2

cos

π

8

1

1

z̄ 2

9π

ei 8

π

1

ei 12

z̄ 3

9π

ei 12

e

π

π

π

+ i sin 16

π

π

−i cos 16

+ sin 16

π

π

− cos 16

− i sin 16

π

π

i cos 16

− sin 16

cos

π

e−i 4

− i sin π8

− cos π8 + i sin π8

√

√3

3

1

1

2

2

+ 2√2 + i − 2 + 2√2

2

e−i 8

√

− 1+i

2

√

√3

3

− 2 2 + 2√1 2 + i 2 2 +

e−i 12

cos

1

ei 16

16

z̄ 4

9π

ei 16

17π

ei 16

25π

ei 16

We would like to prove that the roots of

z

π

8

π

16

and the roots of its conjugate

0 ≤ k < n, 0 ≤ l < n ,

θ+2πk

n

θ + 2πk

n

= ei

=

−θ+2πl

n

,

−θ + 2πl

+ 2πm,

n

θ + 2πk = −θ + 2πl + 2πmn,

θ = π(l − k + mn),

⇒ eiθ ∈ R.

z̄

π

9π

e−i 8

π

e−i 12

9π

1

√

2 2

π

− i sin 16

π

π

−i cos 16

− sin 16

π

π

− cos 16

+ i sin 16

π

π

i cos 16

+ sin 16

cos

equal. We can start by seeing what would happen if they were equal. Let

ei

π

1−i

√

2

z̄

i 17π

12

√

− 1−i

2

z4

16

COMPLEX NUMBER EQUIVALENTS

17π

e−i 12

π

e−i 16

9π

e−i 16

17π

e−i 16

25π

e−i 16

will never be

k, l, n ∈ Z, n ≥ 1,

CHAPTER 3.

In other words, the roots of

have

n

distinct

17

COMPLEX NUMBER EQUIVALENTS

nth

z

and

z̄

are only equal when

z = z̄ .

Otherwise,

z

and

z̄

will each

roots. This will be notable later in section 4.4.

We would like to nd a ring isomorphism

Searching yields the mapping

φ from the complex numbers to a set of matrices.

φ : C → R2×2

for a complex number to a real matrix [37]:

z = a + bi,

a b

φ(z) 7→ Z =

,

−b a

if

then

where

z

z ∈ C, Z ∈ R2×2 , and a, b ∈ R.

We can then see that the roots of the complex number

can be used to nd roots for the real matrix

Z.

This method has multiple advantages for the matrices in

φ:

it will nd exact roots,

while the iterative methods all nd numerical approximations; it is very ecient, as roots

of complex numbers are easy to calculate; and it will nd

n

roots, namely those which

correspond to roots of the corresponding complex numbers.

The weakness of this approach is that is it not clear that all possible roots for a matrix

in

φ

will be found using this mapping. Further, this mapping cannot be used to represent

any arbitrary

2×2

real matrix, leaving much of the matrix space of the

2×2

real matrices

untouched. Thus, it appears to be necessary to try a larger complex space.

3.2

Quaternions

A larger well-known complex number system is the set of quaternions.

We also have a

method for determining any root of a quaternion, using a variant of de Moivre's formula.

This is achieved by taking a quaternion

z ∈ H, z = a + bi + cj + dk ,

z = a + ω , where a is the real part of z , and ω

If we now take

q

to be a unit quaternion (ie.

and writing it as

is what is called the pure quaternion part of

|q| = 1),

z.

then we can write the unit quaternion

CHAPTER 3.

18

COMPLEX NUMBER EQUIVALENTS

q = a + bi + cj + dk

as:

q = cos θ + ω sin θ,

where

cos θ = a

and

1

(bi + cj + dk)

b2 + c 2 + d 2

1

(bi + cj + dk),

= √

1 − a2

ω = √

a form similar to the polar form of a complex number.

Moivre's formula for a unit quaternion

q

It is then possible to express de

as [8]

q n = eωnθ

= (cos θ + ω sin θ)n

= cos nθ + ω sin nθ.

A generalization of de Moivre's formula shows that

nth

roots of a unit quaternion can

be written [31]

α = cos

where

θ + 2kπ

n

+ ω sin

θ + 2kπ

n

,

k, n ∈ Z, 0 ≤ k < n.

The quaternions are also representable in matrix form, as either a complex

2×2

matrix,

CHAPTER 3.

or a real

4×4

19

COMPLEX NUMBER EQUIVALENTS

matrix, with isomorphisms

φ1 : H → C2×2

and

φ2 : H → R4×4

[52]:

a, b, c, d ∈ R,

if

z ∈ H = a + bi + cj + dk,

a + bi c + di

2×2

φ1 (z) 7→ Z1 =

∈C ,

−c + di a − bi

φ2 (z) 7→ Z2

a

b

c

d

−b a −d c

=

∈ R4×4 .

−c d

a −b

−d −c b

a

Even though we can use de Moivre's formula and the isomorphisms above to nd roots

of matrices of the appropriate forms here, it should be noted that in neither of the matrix

equivalences above are we able to represent any arbitrary matrix. We can say that we would

like to nd is some isomorphism which forms a basis for the matrix space.

3.3

Cayley-Dickson construction

We can continue looking at larger complex number elds through the same type of method

used to yield the Quaternion space, namely the Cayley-Dickson construction [18].

This

construction produces increasingly larger complex number spaces, doubling the dimension

each time the construction is used on the previously resulting complex space. In this way,

CHAPTER 3.

20

COMPLEX NUMBER EQUIVALENTS

the complex numbers, quaternions, and larger spaces can be realized as constructions on the

eld of real numbers.

We start by looking at a complex number as an ordered pair

(a, b).

On such ordered

pairs, we dene addition, multiplication, and the conjugate (or involution) as:

(a, b) + (c, d) = (a + c, b + d),

(a, b)(c, d) = (ac − db, ad + cb),

(a, b)∗ = (a, −b).

We take this to be a real algebra

linear spaces

A0 = A ⊕ A,

A,

and construct a new algebra

A0

as a direct sum of

with multiplication dened as

(a, b)(c, d) = (ac − db∗ , a∗ d + cb)

and conjugation extended to

(a, b)∗ = (a∗ , −b).

This construction can now be used to build an innite number of extensions to the complex numbers, known as Cayley-Dickson algebras, each with dimension twice the previous.

However, with each further step, some properties of the algebra may be lost. Applying this

construction to the real numbers yields the complex numbers. When applied to the complex

numbers, we get the Quaternions, which are not commutative. Applied to the Quaternions

this construction results in the Octonions, which are not associative [3]. Applying it further

to the Octonions yields the Sedonions, which are not alternative [27].

Further iterations

CHAPTER 3.

COMPLEX NUMBER EQUIVALENTS

21

from the Sedonions do not lose any additional properties, and remain nicely normed and are

power associative [2].

Given the loss of algebraic properties in the Cayley-Dickson construction, it is important

to note in particular that the Octonions and beyond are no longer associative, meaning that

it will probably not be possible to nd a direct matrix representation for them, as a mapping

from a matrix space would be to an associative subgroup within the Octonions or above.

This leaves only the complex numbers and quaternions with matrix representations known

at this time.

It appears necessary at this point to investigate more properties of matrix

spaces in order to nd some alternate number system in which we might nd an equivalence

or isomorphism to help us search for roots.

Chapter 4

Matrix Forms and Properties

It is not obvious that the iterative or series methods examined earlier are the only methods

for looking for a root of a matrix.

Nor is it obvious that the complex numbers and the

quaternions are the only types of numbers that can be represented in a matrix space. Next

we examine some transformations on matrices and dierent representations of a matrix, and

the properties that can be found or calculations that can be made within each representation.

4.1

Powers of a Matrix and Diagonal Matrices

A power of a matrix is well known, and has the same meaning with respect to matrix

multiplication as a power of an element of a eld such as the real or complex numbers.

When we take any positive integer power

by itself

n−1

n of any matrix A, we simply multiply that matrix

times.

An = A

| ·A·A

{z· · · · · A}

n

22

CHAPTER 4.

When we consider any diagonal real matrix

Cm×m ,

23

MATRIX FORMS AND PROPERTIES

A ∈ Rm×m

or diagonal complex matrix

A ∈

multiplying it by itself looks like this:

A2 = A

·A

a1 0

0 a2

= .

..

0 0

2

a1 0

0 a2

2

= .

..

0 0

···

···

..

.

···

···

···

..

.

···

0 a1 0 · · · 0

0 a2 · · · 0

0

·

. .

.

.

.

.

..

.

. .

.

am

0 0 · · · am

0

0

.

.

.

.

2

am

By induction, we can see that to nd any positive integer power

n of the matrix A, we simply

have to raise each element on the diagonal to the same power.

An

We also note that the

0th

n

a1 0 · · · 0

0 an · · · 0

2

= .

.

.

..

..

.

.

.

n

0 0 · · · am

power of a matrix

A

is dened to be the identity matrix

I.

It

CHAPTER 4.

24

MATRIX FORMS AND PROPERTIES

is clear that this extends the previous denition.

A0

0

a1

0

= .

..

0

1

0

= .

..

0

0

···

0

a02 · · · 0

.

..

.

.

.

0

0 · · · am

0 · · · 0

1 · · · 0

.

..

.

.

.

0 ··· 1

= I.

When considering a negative power of a matrix, we take such an exponent to be a power

of the matrix inverse of

A.

The matrix inverse of a diagonal matrix yields another diagonal

matrix containing the multiplicative inverse of each value on the diagonal of the original

CHAPTER 4.

25

MATRIX FORMS AND PROPERTIES

matrix in order. Thus, for any negative integer power

n

A−1

a1 0

0 a2

=

.

.

.

0 0

−1

0

a1

0 a−1

2

= .

..

0

0

−n

0

a1

0 a−n

2

= .

..

0

0

−n,

A−n =

Thus, we can say that for any diagonal matrix

diagonal to the

nth

···

···

..

.

···

A,

···

···

..

.

···

···

···

..

.

···

−1 n

0

0

.

.

.

am

n

0

0

.

.

.

−1

am

0

0

.

.

.

.

−n

am

nding

power holds for any integer value of

An

by taking each element on the

n.

It can also be shown that the same is true for any rational power of a diagonal matrix.

CHAPTER 4.

26

MATRIX FORMS AND PROPERTIES

We start by looking at a diagonal matrix

A0

where

0 n

(a1 )

0

= .

..

0

a1 0

0 a2

= .

..

0 0

0 n

(A )

···

0

(a02 )n

0

···

···

..

(A0 )n = A.

.

···

0

···

0

.

..

.

.

.

0 n

· · · (am )

0

0

.

.

.

.

am

Taking the entries along the diagonal piecewise, we see

ai = (a0i )n ,

i = 1, . . . , m

Since each element in the arrays is either a real or a complex number (i.e.

can say that

a0i

is an

nth

root of

ai .

matrix

A0 .

1/n

ai

we

We then can choose

a0i = (ai )1/n ,

where we dene

ai , a0i ∈ C),

as the principal

nth

i = 1, . . . , m

root of

ai .

This produces one example of such a

CHAPTER 4.

27

MATRIX FORMS AND PROPERTIES

Now, we can rewrite

A0

in terms of values from

0

a1 0

0 a0

2

= .

..

0 0

1/n

(a1 )

0

= .

..

0

A0

Given this representation of

A

···

0

··· 0

.

..

.

.

.

0

· · · am

···

0

(a2 )1/n · · ·

..

0

0

.

.

.

.

· · · (am )1/n

0

then

ai

has

n

.

A0 , it is reasonable to dene A1/n = A0 .

of something we might call the principal root of a matrix.

ai 6= 0,

distinct

nth

roots, the matrix

A

This is our rst example

Note that since, in general, if

may have up to

nm

distinct

of this type. We also note that it is not obvious that there are no other roots of

nth

A.

roots

Thus,

we can see that if a matrix is diagonal, this implies that we can always nd roots of that

matrix. In fact, this is also true if the matrix is diagonalizable, as we see in the next section.

4.2

Matrix Diagonalization

To diagonalize a real matrix, we need to nd an invertible matrix

a diagonal matrix [44]. If an

m×m

matrix has exactly

m

P

such that

P −1 AP

is

distinct eigenvalues, then that

matrix is diagonalizable. Note however that the converse may not be true. Thus, if a matrix

does not have

m

distinct eigenvalues, it may still be diagonalizable.

In order to know if

a given matrix is diagonalizable or not, the diagonalization theorem states that an

matrix

If

A

A

is diagonalizable if and only if

is diagonalizable and the

m

A

has

m

m×m

linearly independent eigenvectors [5].

eigenvalues of the matrix, counting multiplicity, are

CHAPTER 4.

λ1 , λ2 , . . . , λm ,

28

MATRIX FORMS AND PROPERTIES

then we can write

λ1 0 · · · 0

0 λ2 · · · 0

D = P −1 AP = .

.

..

..

.

.

.

0 0 · · · λm

Note that each column in the matrix

column

matrix

n

D

P

is equal to one of the eigenvectors of

is an eigenvector associated with the eigenvalue

contains the eigenvalues of

columns of

P

A

λn ,

A,

namely

and the resulting diagonal

on its diagonal. The order of the eigenvectors in the

is not important, as diering orders only changes the order of the eigenvalues

along the diagonal of

D.

It can also be shown that we can use the diagonalized matrix

a matrix.

P −1 AP = D

⇐⇒

A = P DP −1 .

D

to nd the

nth

power of

CHAPTER 4.

Letting

29

MATRIX FORMS AND PROPERTIES

1

D0 = D n

and

A0 = P (D0 )P −1 ,

(A0 )n = (P (D0 )P −1 )n

= (P (D0 )P −1 )(P (D0 )P −1 )(P (D0 )P −1 ) · · · (P (D0 )P −1 )

= P (D0 )(P −1 P )(D0 )(P −1 P ) · · · (P −1 P )(D0 )P −1

= P (D0 )n P −1 .

So

(A0 )n = A,

and again we will have a principal

nth

root for which we can write

1

An =

1

P D n P −1 .

4.3

Examples: Diagonalizing Matrix Forms of Complex

Numbers

We already know that we can use de Moivre's theorem to directly nd the

complex number

z ∈ C, or of a quaternion q ∈ H.

nth

roots of a

It would also be useful to see if the matrix

forms of the complex numbers and quaternions are also diagonalizable, and further if the

nth

roots of such diagonal matrices have the correct form.

4.3.1

Example 1:

2×2

Matrix of a Complex Number

The matrix form of a complex number contains only real numbers, so it makes sense to rst

try to diagonalize as a real matrix. Let

We want to nd a matrix

Let

X ∈ R2×2

C ∈ R2×2

such that

be the matrix form of a complex number.

X −1 CX

a b

C=

.

−b a

is diagonal.

CHAPTER 4.

30

MATRIX FORMS AND PROPERTIES

Let

w x

X=

.

y z

So,

X −1 =

1

z −x

.

wz − xy −y w

Now multiplying them together yields

1

z −x a b w x

X −1 CX =

wz − xy −y w

−b a

y z

zb − xa w x

1

za + xb

=

wz − xy −ya − wb −yb + wa

y z

(za + xb)x + (zb − xa)z

1

(za + xb)w + (zb − xa)y

=

wz − xy (−ya − wb)w + (−yb + wa)y (−ya − wb)x + (−yb + wa)z

which must be a diagonal matrix. This implies

wb)w + (−yb + wa)y = 0.

(za + xb)x + (zb − xa)z = 0

Simplifying these equations, we see

0 = (za + xb)x + (zb − xa)z

= xza + x2 b + z 2 b − xza

= x2 b + z 2 b

= (x2 + z 2 )b = 0

and

(−ya −

CHAPTER 4.

31

MATRIX FORMS AND PROPERTIES

and

0 = (−ya − wb)w + (−yb + wa)y

= −wya − w2 b − y 2 b + wya

= −w2 b − y 2 b

= (w2 + y 2 )(−b) = 0.

So, we nd that either

b = 0

w, x, y, z ∈ R, w, x, y, z = 0.

or both

x2 + z 2 = 0

and

w 2 + y 2 = 0,

meaning that when

Thus, the real matrix form of a non-real complex number is

not diagonalizable as a real matrix.

Since we note that the diagonalization failed in the real matrices, we should instead try

to diagonalize again in the complex matrices. Let

C ∈ C2×2

representation of a complex number.

a b

C=

.

−b a

be a matrix in the form of a

CHAPTER 4.

MATRIX FORMS AND PROPERTIES

32

We verify that the matrix is diagonalizable by nding the eigenvalues.

a − λ

b

det(C − λI) = −b a − λ

= (a − λ)(a − λ) − (b)(−b)

= λ2 − 2λa + a2 + b2 = 0

=⇒ (λ − a)2 = −b2

√

λ−a =

−b2

= ±bi

=⇒

λ = a ± bi.

We have exactly two distinct eigenvalues when

b 6= 0,

which means that this form of

2×2

matrix is always diagonalizable. Also note that the eigenvalues of the matrix are the original

complex number and its conjugate. We continue with the eigenvectors.

For

λ = a + bi,

(A − λI)v = 0,

−bi b x

0

= ,

−b −bi

y

0

CHAPTER 4.

33

MATRIX FORMS AND PROPERTIES

−bix + by = 0,

−bx − biy = 0.

Thus since we assume

Similarly, for

b 6= 0, y = ix

and the eigenspace of

a + bi

λ = a − bi,

(A − λI)v = 0,

0

bi b x

= ,

0

−b bi

y

bix + by = 0,

−bx + biy = 0.

Thus, the eigenspace of

a − bi

is spanned by

1

.

−i

So, placing the eigenvectors as columns in a matrix, we have

1 1

P =

i −i

and its inverse

P −1 =

1 1 i

.

2 1 −i

is spanned by

1

.

i

CHAPTER 4.

34

MATRIX FORMS AND PROPERTIES

So, we can use these to nd the diagonalized form of the matrix representation of any complex

number:

0 1 i 1

a b 1 1 a + bi

=

,

−b a

i −i

0

a − bi

1 −i 2

where we note that the entries on the diagonal are the original complex number and its

conjugate. The roots of the resulting diagonal matrix can now be easily calculated by the

roots of the entries on the diagonal, and as noted earlier, these values will not be equal

unless the original number is equal to its complex conjugate.

These roots will be further

investigated in section 4.4.

4.3.2

Example 2:

For some quaternion

2×2

Matrix of a Quaternion

q ∈ H, q = a + bi + cj + dk ,

quaternion. We nd the eigenvalues of

let

Q ∈ C2×2

be a matrix form of a

Q:

a + bi c + di

Q =

,

−c + di a − bi

c + di

(a + bi) − λ

det(Q − λI) = det

−c + di

(a − bi) − λ

= (a − λ + bi)(a − λ − bi) − (c + di)(−c + di)

= a2 − 2aλ + λ2 + b2 + (ba − bλ − ba + bλ) i − −c2 − d2 + (−cd + cd) i

= λ2 − 2aλ + a2 + b2 + c2 + d2 .

CHAPTER 4.

35

MATRIX FORMS AND PROPERTIES

Thus, the characteristic equation is

(λ − a)2 + b2 + c2 + d2 = 0,

(λ − a)2 = −b2 − c2 − d2 ,

√

λ = a ± i b2 + c 2 + d 2 .

√

We can choose to represent the quantity

eigenvalues

a + ωi,

and

a − ωi,

b2 + c2 + d2

with

ω,

and we can diagonalize this matrix.

eigenvectors,

For

giving us the two distinct

λ = a + ωi,

(Q − λI)v = 0,

c + di x

0

bi − ωi

= ,

0

−c + di −bi − ωi

y

x (bi − ωi) + y(c + di) = 0,

x(−c + di) − y (−bi − ωi) = 0.

Now, to nd the

CHAPTER 4.

MATRIX FORMS AND PROPERTIES

So

(c + di)y = −(bi − ωi)x.

And the resulting eigenvector:

c + di

v =

.

(−b + ω)i

Similarly, for

λ = a − ωi

c + di x

0

bi + ωi

= ,

0

−c + di −bi + ωi

y

x (bi + ωi) + y(c + di) = 0,

x(−c + di) − y (−bi + ωi) = 0.

So

(c + di)y = −(bi + ωi)x.

36

CHAPTER 4.

37

MATRIX FORMS AND PROPERTIES

And the resulting eigenvector:

c + di

v =

.

(−b − ω)i

Now the eigenvectors yield the matrix

c + di

c + di

P =

(−b + ω)i (−b − ω)i

and its inverse

P −1 =

c + di

1

(b + ω)i

.

2ωi(c + di) (−b + ω)i −(c + di)

Note that for the inverse to exist, we must have

(c + di) 6= 0.

These are now used to nd the diagonalized form of the complex matrix representation

of any quaternion,

Qd ∈ C2×2

0

a + ωi

P −1 QP = Qd =

0

a − ωi

and we can now create

nth

roots as before.

CHAPTER 4.

4.3.3

Example 3:

We now look at the

Q ∈ C4×4

38

MATRIX FORMS AND PROPERTIES

4×4

4×4

Matrix of a Quaternion

matrix form of a quaternion, and see if it is diagonalizable. Let

be a matrix form of a quaternion. We nd the eigenvalues of

Q:

b

c

d

a

−b a −d c

Q =

,

−c d

a −b

−d −c b

a

a − λ

b

c

d −b a − λ −d

c

det(Q − λI) = −c

d

a − λ −b −d

−c

b

a − λ

= λ2 − 2aλ + a2 + b2 + c2 + d2 λ2 − 2aλ + a2 − b2 + c2 + d2

= 0.

CHAPTER 4.

39

MATRIX FORMS AND PROPERTIES

Thus,

(λ − a)2 + b2 + c2 + d2 = 0,

(λ − a)2 = −b2 − c2 − d2 ,

√

λ = a ± −b2 − c2 − d2 ,

or

(λ − a)2 − b2 + c2 + d2 = 0,

(λ − a)2 = b2 − c2 − d2 ,

√

λ = a ± b2 − c 2 − d 2 .

Here we see that when

b 6= 0,

we have four distinct eigenvalues,

a+

√

−b2 − c2 − d2 , a −

√

√

√

−b2 − c2 − d2 , a + b2 − c2 − d2 , and a − b2 − c2 − d2 , and when b = 0, the eigenvalues

√

√

a + −c2 − d2 , a − −c2 − d2 each have multiplicity 2, indicating that this matrix is also

diagonalizable.

We can use the representations

ω=

√

b2 + c2 + d2 , ϕ =

to write the eigenvalues in a more compact form:

For

−b2 + c2 + d2 , and γ = (c2 +d2 )

a + ωi, a − ωi, a + ϕi, a − ϕi.

λ = a − ωi,

√

−bd−cωi

− γ

− −bc+dωi

γ

v =

.

1

0

CHAPTER 4.

For

40

MATRIX FORMS AND PROPERTIES

λ = a + ωi,

−bd+cωi

− γ

− −bc−dωi

γ

v =

.

1

0

For

λ = a − ϕi,

c( −bd+cϕi

−

−γ

ϕi(bc−dϕi)

b(−γ)

)

d

−bd+cϕi ϕi(bc−dϕi)

−

+ b(−γ)

−γ

v =

.

ϕi

−b

1

For

λ = a + ϕi,

c( −bd−cϕi

+

−γ

ϕi(bc+dϕi)

b(−γ)

d

−bd−cϕi

−

−

−γ

v =

ϕi

b

1

We then construct the matrix

to generate a diagonal matrix.

P

and its inverse

)

ϕi(bc+dϕi)

b(−γ)

.

P −1

using the eigenvectors, and use them

CHAPTER 4.

P −1

− 2cϕi

− 2dωiϕi

γ

b(γ)

2dϕi

− 2cωiϕi

γ

b(γ)

4d2 ωiϕi

4c2 ωiϕi

−

−

(γ)2

(γ)2

− 4c2 ωiϕi − 4d2 ωiϕi

(γ)2

(γ)2

2cϕi

2dωiϕi

−

− 2dϕi

− 2cωiϕi

γ

b(γ)

γ

b(γ)

2 ωiϕi

4d2 ωiϕi

4c2 ωiϕi

4d2 ωiϕi

−

−

−

− 4c(γ)

2

(γ)2

(γ)2

(γ)2

=

− “ 2 2dωi 2 ” − “ 2 2cωi 2 ”

(γ) − 4c ωiϕi − 4d ωiϕi

(γ) − 4c ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

(γ)2

(γ)2

“

2dωi

2cωi

“

”

”

2 ωiϕi

2

− 4d ωiϕi

(γ)2

(γ)2

(γ) − 4c

2 ωiϕi

2

− 4d ωiϕi

(γ)2

(γ)2

(γ) − 4c

4

0

0

2

2bc2 ωi

+ 2bd 2ωi

(γ)2

(γ)

2

2

− 4c ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

2

2

− 2bc 2ωi − 2bd 2ωi

(γ)

(γ)

2

2

− 4c ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

2 2

4

2c ϕi

4c d ϕi

2d ϕi

− b(−γ)(γ)

− b(−γ)(γ)

− b(−γ)(γ)

2

2 ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

2c4 ϕi

4c2 d2 ϕi

2d4 ϕi

+ b(−γ)(γ)

+ b(−γ)(γ)

b(−γ)(γ)

2

2

− 4c ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

2

2

− 2c ωiϕi

− 2d ωiϕi

(γ)2

(γ)2

2

2

− 4c ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

2

2

− 2c ωiϕi

− 2d ωiϕi

(γ)2

(γ)2

2

2

− 4c ωiϕi

− 4d ωiϕi

(γ)2

(γ)2

− 4c

.

MATRIX FORMS AND PROPERTIES

ϕi(bc−dϕi)

ϕi(bc+dϕi)

c( −bd+cϕi

− b(−γ) )

c( −bd−cϕi

+ b(−γ) )

−γ

−γ

−bd−cωi

−bd+cωi

− γ

d

d

− γ

ϕi(bc−dϕi)

ϕi(bc+dϕi)

−bd−cϕi

− −bc+dωi − −bc−dωi − −bd+cϕi +

−

−

γ

γ

−γ

b(−γ)

−γ

b(−γ)

P =

.

ϕi

ϕi

1

1

−b

b

0

0

1

1

41

CHAPTER 4.

42

MATRIX FORMS AND PROPERTIES

We write out the diagonal matrix

Qd ∈ C4×4

0

0

0

a − ωi

0

a

+

ωi

0

0

P −1 QP = Qd =

,

0

0

a − ϕi

0

0

0

0

a + ϕi

and again we can nd roots of the diagonalized matrix.

4.4

Roots of Complex Numbers vs. Roots of Diagonal

Matrices

When de Moivre's formula is used to nd a root of a complex number

the root

1

zn

Considering

z,

the matrix form of

can easily be seen to be one root of the matrix form of the complex number

Z

as the matrix form of

the diagonalization of the matrix

z = eiθ

(using polar form for readability),

z.

Zd = P −1 ZP

1

1

Z , and z n

or

Zdn

as the principal

nth root of each, we have

iθ

0

e

Zd =

,

−iθ

0 e

1

in

θ

1

e

Zdn =

0

0

.

1

e−i n θ

We know that these are not the only possible roots of the matrix

each have

roots of

z

n distinct roots, any of the n2

and

z̄

on the diagonal of the

Zd .

In fact, since

z

and

z̄

matrices formed by all possible permutations of the

2 × 2 matrix form a valid matrix root of Zd .

Further,

it is not obvious that the roots of a matrix found from diagonalization are the only roots of

CHAPTER 4.

MATRIX FORMS AND PROPERTIES

the matrix. As an example, in the

2×2

real matrix space, we can construct an example of

a root of a diagonal matrix which is not itself a diagonal matrix.

2

1 x

1 0

=

.

0 −1

0 1

We can see that for any real matrix of this form

2

2

a b

a ab + bd

=

,

2

0 d

0

d

when

a = −d,

the square of the matrix is

2

a

0

In general, we can see for any

2×2

43

0

.

d2

matrix whose square is diagonal,

2

2

a b

a + bc ab + bd n 0

=

,

=

0 m

ac + cd d2 + bc

c d

CHAPTER 4.

44

MATRIX FORMS AND PROPERTIES

giving the set of equations

a2 + bc = n,

ab + bd = 0 ⇒ a = −d

or

b = 0,

ac + cd = 0 ⇒ a = −d

or

c = 0,

d2 + bc = m.

So,

b = 0, c 6= 0 ⇒ a = −d,

a2 = d2 = n = m,

c = 0, b 6= 0 ⇒ a = −d,

a2 = d2 = n = m,

b 6= 0, c 6= 0 ⇒ a = −d,

a2 + bc = d2 + bc = n = m,

b = c = 0 ⇒ a2 = n, d2 = m ∀a, d.

And conversely,

n = m ⇒ a2 + bc = d2 + bc

⇒ a2 = d 2

⇒ a = ±d,

CHAPTER 4.

45

MATRIX FORMS AND PROPERTIES

so,

a = d 6= 0 ⇒ b = c = 0

since

ab + bd = 0

and

ac + cd = 0,

a = −d 6= 0 ⇒ ab + bd = 0, ac + cd = 0, a2 + bc = d2 + bc = n = m ∀b, c,

a = d = 0 ⇒ ab + bd = 0, ac + cd = 0, bc = n = m ∀b, c.

Thus, a diagonal

2×2

matrix

A

has non-diagonal square roots if and only if it is a scalar

multiple of the identity matrix, ie.

4.5

A = nI .

Jordan Canonical Form

A matrix in Jordan canonical form, or Jordan normal form, is a block matrix of the form

J1

J2

J =

..

.

Jm

consisting of one or more Jordan blocks, where all entries outside of the blocks are zeros. Each

Jordan block is a matrix having zeroes everywhere except the diagonal and the superdiagonal,

CHAPTER 4.

MATRIX FORMS AND PROPERTIES

46

and the elements on the superdiagonal all consist of 1.

0 ··· 0

0

λm 1

0 λ

1

0

0

m

.

..

.

.

0

0 λm

.

.

Jm =

..

..

.

.

1

0

0

0

0

λm 1

0

0

0 · · · 0 λm

Each

λm

represents an eigenvalue of the Jordan matrix, and each may or may not have

dierent values from other blocks. A

1×1

matrix can also be considered a Jordan block

even though it lacks a superdiagonal [48].

For any square matrix

A

there is a unique Jordan matrix decomposition

A = SJS −1 ,

where

S −1

is the matrix inverse of

S,

Given any complex square matrix

by nding a Jordan basis

bi,j

and

J

is a matrix in Jordan canonical form [49].

A, the matrix can be written in Jordan canonical form

for each Jordan block, where each Jordan basis satises [39, 40]

Abi,1 = λi bi,1

and

Abi,j = λi bi,j + bi,j−1 .

Much like diagonalized matrices, it is easy to relate a power of a matrix to a power of its

CHAPTER 4.

47

MATRIX FORMS AND PROPERTIES

Jordan canonical form,

A = SJS −1 ,

An =

SJS −1

n

SJS −1

SJS −1 · · · SJS −1

= SJ S −1 S J S −1 S · · · S −1 S JS −1

=

= SJ n S −1 ,

and a power of a Jordan matrix can also be easily calculated using the power of its Jordan

blocks.

n

J1

J2n

Jn =

..

.

n

Jm

.

Also as with a diagonal matrix, any roots of a a Jordan matrix can be calculated using the

roots of its Jordan blocks. Given a Jordan Matrix

terms of the Jordan blocks from

J

(Ji0 )n = Ji .

where

(J 0 )n = J ,

we can write

J0

in

as:

0

J1

J20

J0 =

where

J0

..

.

0

Jm

,

This would potentially allow us to nd an

nth

provided we can nd roots of its corresponding Jordan blocks.

root of any square matrix,

The next sections explore

CHAPTER 4.

48

MATRIX FORMS AND PROPERTIES

cases where the roots may not exist, and a criteria for knowing when the roots do exist.

4.6

Nilpotent Matrices

A nilpotent matrix is any matrix that when taken to some power

For an

m×m

matrix

n becomes the zero matrix.

A,

An = [0]

n

and we can call the minimum value of

nilpotent matrix

for which

An

is the zero matrix the index of the

A.

It can be shown that the eigenvalues of a nilpotent matrix are always 0. When nding

an eigenvalue

λ,

A~x = λ~x,

An~x = λn~x,

and since

An

is the zero matrix,

An~x = ~0

⇒

λn~x = ~0.

Because this will be true for any eigenvector

~x,

we must have

λ = 0.

The most obvious example of a nilpotent matrix is any strictly triangular matrix, with all

zeros along the diagonal. These are clearly not the only type of matrices that are nillpotent.

In fact it is not necessary that any entries in the matrix be zeroes, as we can construct

CHAPTER 4.

49

MATRIX FORMS AND PROPERTIES

examples such as

1

1

1

1

1

1

1

1

1

2

2

2

2

,

,

1

1

1

3

−1 −1

3

3

3

−2 −2 −2

−6 −6 −6 −6

which are all nilpotent with index 2.

We see from the examples that it should be possible to construct a nilpotent

matrix of index 2 for any

m.

m×m

It would also be useful to show the maximum nilpotent index

that could exist for a given size

m.

Let

A

be a nilpotent

m×m

then not the zero matrix, so we can say there exists a vector

~x

matrix of index

such that

Ak−1~x

k . Ak−1

is

is non-zero.

It can then be seen that the k non-zero vectors

~x, A~x, A2~x, . . . , Ak−1~x

form a linearly independent set in

values

c0 , c1 , . . . , ck−1

Rm ,

which would imply

k ≤ m.

To wit, if we have scalar

which satisfy

c0~x + c1 A~x + · · · + ck−2 Ak−2~x + ck−1 Ak−1~x = ~0,

CHAPTER 4.

50

MATRIX FORMS AND PROPERTIES

then there will also be

k−1

equations

c0 A~x + c1 A2~x + · · · + ck−2 Ak−1~x + ck−1 Ak ~x = ~0,

c0 A2~x + c1 A3~x + · · · + ck−2 Ak ~x + ck−1 Ak+1~x = ~0,

.

.

.

c0 Ak−2~x + c1 Ak−1~x + · · · + ck−2 A2k−4~x + ck−1 A2k−3~x = ~0,

c0 Ak−1~x + c1 Ak ~x + · · · + ck−2 A2k−3~x + ck−1 A2k−2~x = ~0.

Since

Ak

and all higher powers of

c0 Ak−1~x = ~0,

implies

c1 = 0.

implying that

c0 = 0 .

A

are the zero matrix, the last equation above says

Then, the previous equation

c0 Ak−2~x + c1 Ak−1~x = ~0

Continuing in this manner through each equation, we nd that

c0 , c1 , . . . , ck−1

are all zero, which establishes linear independence of the vectors above [30]. Thus, we have

shown that for a nilpotent

m×m

matrix, the index

k ≤ m.

We can more easily see some properties of a nilpotent matrix by considering its Jordan

canonical form.

Since the Jordan canonical form of a nilpotent matrix always has zeros

on the main diagonal, and ones or zeros on the superdiagonal, the only non-trivial Jordan

canonical form of a

2×2

nilpotent matrix is

0 1

A=

.

0 0

CHAPTER 4.

For nilpotent

51

MATRIX FORMS AND PROPERTIES

3×3

2

matrices, we can have index 2 (B2

= 0)

3

or index 3 (B3

= 0).

0 1 0

0 0 0

, B2 = 0 0 1 .

B3 =

0

0

1

0 0 0

0 0 0

And for nilpotent

4

4 ( C4

4×4

2

matrices, we can have index 2 (C2

= 0),

3

index 3 (C3

0 0 0

0

0

0 1 0

, C2 =

0

0 0 1

0

0 0 0

0 0 0

0 0 0

.

0 0 1

0 0 0

= 0),

or index

= 0).

0

0

C4 =

0

0

1 0 0

0

0

0 1 0

, C3 =

0

0 0 1

0

0 0 0

By this representation, it seems clear that the index of the nilpotent is related to the number

of Jordan blocks, and hence to the dimension of the eigenspace of

the

4×4

(x

~1

= [1, 0, 0, 0]), C3

examples above, we note that

has (x

~1

C4

0, ie.

the null space. From

has a nullspace of dimension

= [1, 0, 0, 0], x~2 = [0, 1, 0, 0])),

and

C2

1,

has (x

~1

generated by

= [1, 0, 0, 0],

x~2 = [0, 1, 0, 0]), x~3 = [0, 0, 1, 0])).

We would also like to take a quick look at whether we can calculate roots of a nilpotent

matrix. We start with the Jordan Canonical form of a

0 1

A=

.

0 0

2×2

nilpotent matrix

CHAPTER 4.

MATRIX FORMS AND PROPERTIES

We want to nd some matrix

A0

where

52

A02 = A

2

2

a b

a + bc ab + bd 0 1

A02 =

=

=

.

c d

ca + dc cb + d2

0 0

So, we have the series of equations

a2 + bc = 0,

ab + bd = 1

⇒ abc = c − bcd,

ca + dc = 0

⇒ abc = −bcd,

cb + d2 = 0.

Thus,

c = 0,

a2 = 0,

a = 0,

d2 = 0,

d = 0,

Contradiction, since ab + bd = 0.

Thus, we see there is no solution to this system of four equations with four unknowns, and

thus there are no square roots of a

2×2

nilpotent matrix.

CHAPTER 4.

53

MATRIX FORMS AND PROPERTIES

We next look at the Jordan Canonical forms of a

0 1 0

A=

0 0 1

0 0 0

where we want to nd some matrix

A0

3×3

nilpotent matrix

0 0 0

or A =

0 0 1 ,

0 0 0

where

A02 = A or A03 = A.

Utilizing the Mathematica

software to solve the resulting systems of nine equations with nine unknowns also results in

no solution, and thus there are no square roots or cube roots of a

Looking further at the

4×4

1 0

0 1

0 0

0 0

0

0

,

1

0

AAAA = (AA)(AA) = [0], we can consider the matrix B = AA, where B 2 = [0].

the matrix

B.

nilpotent matrix.

nilpotent matrices, we notice that for the matrix

0

0

A=

0

0

since

3×3

B

is nilpotent, and the matrix

A

Thus,

is considered to be a square root of the matrix

This can be generalized based on the associative property of matrix multiplication for

larger matrices as well.

Theorem 4.6.1.

Let A be a nilpotent m × m matrix of index m, and let p, n ∈ Z, where

p, n > 1, such that pn = m. Then, there exists some nilpotent m × m matrix A0 of index p

where An = A0 . Thus, we can say that A is an nth root of A0 .

CHAPTER 4.

4.7

54

MATRIX FORMS AND PROPERTIES

Existence of Roots

We have already noted that there seems to be a relation between the dimension of the

nullspace of a nilpotent matrix and its index.

In fact, relations between dimensions of

nullspaces can be used to determine the existence of

of a matrix

A,

nth roots.

We utilize the ascent sequence

which is the sequence

di = dim Null Ai − dim Null Ai−1

; i = 1, 2, . . . .

Psarrakos [36] shows the following:

Theorem 4.7.1 (Psarrakos).

Given a complex m × m matrix A ∈ Cm×m , A has

an nth root if and only if for every integer ν ≥ 0, the ascent sequence of A has

no more than one element between nν and n(ν + 1).

and a corollary,

Corollary 4.7.2 (Psarrakos).

Let d1 , d2 , d3 , . . . be the ascent sequence of a sin-

gular complex matrix A.

(i) If d2 = 0, then for every integer n > 1, A has an nth root.

(ii) If d2 > 0, then for every integer n > d1 , A has no nth roots.

Now that we see a method for knowing if

nth

roots exist, we look for methods which

might provide us with multiple roots, should they exist.

Chapter 5

Hypercomplex Numbers

Knowing that it is possible to nd multiple roots in the complex numbers and in the quaternions, it makes sense to next look for other number systems for which multiple roots can be

found where we might be able to nd a ring isomorphism with the matrices. The area of

Hypercomplex Numbers oers many number systems in which we can begin looking.

A basic denition for a hypercomplex number is a number having properties which depart

from those of the real or complex numbers [45].

More precisely, a hypercomplex number

system in a nite dimensional algebra over the reals which is unital and distributive, but not

necessarily associative, with elements generated by real number coecients

for some basis

1, i0 , i1 , . . . , in ,

where each basis is conventionally normalized such that

−1, 0, 1

[28].

5.1

Multicomplex Numbers

The multicomplex numbers are a sequence of number systems

C0

is taken to be the real number system, and

for each

n > 0, in

(a0 , a1 , . . . , an )

is some square root of

−1.

55

Cn

i2k ∈

dened inductively, where

Cn+1 = {z = x + yin+1 : x, y ∈ Cn },

where

A further requirement is that multiplication

CHAPTER 5.

56

HYPERCOMPLEX NUMBERS

on the imaginary units must be commutative, such that for any

this denition,

Cn

C1

is the complex number system,

is a multicomplex number system of order

5.1.1

n

C2

n 6= m, in im = im in .

Under

is the bicomplex number system, and

[35].

Bicomplex Numbers

The bicomplex numbers were developed by Corrado Segre [42], using two commuting square

roots of

−1,

h2 = i2 = −1,

whose product would then have the square

(hi)2 = (ih)2 = 1.

The algebra also contains idempotent values

g=

5.1.2

1 + hi

2

g∗ =

1 − hi

.

2

Tessarines

The tessarines were rst described by James Cockle [9, 10, 11, 12, 13, 14] as an algebra

similar to the quaternions, but which have the form

t = w + xα + yβ + zγ,

where

w, x, y, z ∈ R

and

α2 = −1,

β 2 = +1,

αβ = βα = γ,

γ 2 = −1

CHAPTER 5.

A tessarine

t = w + xi + yj + zk

i = α, j = β, k = γ , α, β, γ

numbers,

57

HYPERCOMPLEX NUMBERS

(using more modern notation for complex numbers:

as listed above) can also be written in terms of two complex

t = (w + xi) + (y + zi)j ,

since

ij = k ,

so we can write

t = a + bj , a, b ∈ C.

We can use this representation to show a mapping into the

f : T 7→ C2×2

2×2

complex matrices

a b

t = a + bj 7→

b a

and

c d

s = c + dj →

7

,

d c

so we see

a + c b + d a b c d

t + s = (a + c) + (b + d)j 7→

+

=

d c

b a

b+d a+c

and

ac + bd bc + ad a b c d

ts = (ac + bd) + (ad + bc)j 7→

=

.

bc + ad ac + bd

b a

d c

We can easily see that for any complex

2×2

matrix of the appropriate form,

a b

,

b a

there will exist some

all

a, b ∈ C,

t ∈ T , t = a + bj .

So, the inverse mapping

f 0 : C2×2 7→ T

and we can see that this mapping is an isomorphism.

is dened for

CHAPTER 5.

5.2

58

HYPERCOMPLEX NUMBERS

Split-Complex Numbers

We can examine the non-real root of

+1 seen above in the Tessarines and Bicomplex Numbers

apart from the usual complex unit by examining the quantity

z = a + bj , j 2 = +1,

rst

examined by Cockle [14], they are often referred to currently as a split complex numbers

[38] or Hyperbolic numbers [43]. Much as seen earlier in the bicomplex numbers, the split

complex numbers will have idempotent values

z=

1+j

,

2

z∗ =

1−j

.

2

The split complex numbers also contain zero divisors.

product of any two split complex numbers

We can nd them by setting the

z1 = a1 + b1 j , z2 = a2 + b2 j

z1 z2 = (a1 + b1 j)(a2 + b2 j)

= a1 a2 + a1 b 2 j + a2 b 1 j + b 1 b 2

= (a1 a2 + b1 b2 ) + (a1 b2 + a2 b1 )j

= 0.

Setting the real and imaginary parts both to zero, we have:

a1 a2 + b1 b2 = 0,

a1 b2 + a2 b1 = 0.

to zero:

CHAPTER 5.

59

HYPERCOMPLEX NUMBERS

So

a1 =

−b1 b2

a2

and a1 =

−a2 b1

b2

if

a2 , b2 6= 0.

Thus

−b1 b2

−a2 b1

=

,

a2

b2

−b1 b22 = −a22 b1 ,

b22 = a22 .

So we see, the split complex number

z = a + bj

where

a = ±b

is a zero divisor for any other

split complex number.

In the

2 × 2 matrix space, there are a few dierent matrices that can be used to represent

a non-real root of

+1.

Looking at diagonal matrices, we can see that a matrix that has 1

and -1 on the diagonal will have a square that contains all 1's on the diagonal, and so is the

image of the real +1.

2

2

1 0

1 0

−1 0

.

=

=

0 1

0 −1

0 1

Another matrix which squares to a diagonal matrix of ones is an anti-diagonal matrix of

ones:

2

0 1

1 0

=

.

1 0

0 1

Since this representation is orthogonal to the representation of a real number, we can use

CHAPTER 5.

60

HYPERCOMPLEX NUMBERS

this in mapping from the split complex numbers into the real

2×2

matrix space. If

a, b ∈ R

a b

z = a + bj 7→

.

b a

5.3

Dual Numbers

As with the complex numbers (z

a + bj, j 2 = +1),

In the

2×2

= a + bi, i2 = −1)

it is possible to dene a number

and the split-complex numbers (z

z = a + b

where

is nilpotent,

matrix space, there are several matrices which could represent

2 = 0

=

[6].

, namely any

nilpotent matrix. The easiest example is any strictly triangular matrix, where all values on

the diagonal are 0.

2

2

0 1

0 0

0 0

=

=

.

0 0

1 0

0 0

Then the mapping

a b

z = a + b 7→

0 a

is an isomorphism.

5.4

Split-Quaternions

The split-quaternions, which were rst described as the coquaternions by James Cockle [10],

are numbers of the form

q = w + xi + yj + zk

where

ij = k = −ji, jk = −i = −kj, ki = j = −ik, ijk = 1,

i2 = −1, j 2 = +1, k 2 = +1.

CHAPTER 5.

A split quaternion has conjugate

w 2 + x2 − y 2 − z 2 .

q̄ = w − xi − yj − zk ,

A split quaternion

q

q ∗ q −1 = q −1 ∗ q = 1,

and multiplicative modulus

q̄

,

q q̄

which exists when the multiplicative modulus

We can also write a split quaternion in terms of two complex numbers

b = y + zi,

so

q = a + bj .

q q̄ =

will have a multiplicative inverse

q −1 =

such that

61

HYPERCOMPLEX NUMBERS

q q̄ 6= 0.

a = w + xi,

When written this way, we can dene a mapping to the

2×2

complex matrix space

a b

q = a + bj 7→

,

b̄ ā

where

a, b ∈ C,

and

ā, b̄

are the complex conjugates of

a

and

b.

Written out explicitly, this

gives

w + xi y + zi

q = (w + xi) + (y + zi)j 7→

.

y − zi w − xi

Recall now, however, the real

2×2 matrix representations of each non-real component, where

we have one real matrix which can represent a root of

non-real root of

+1.

−1,

and two that can can represent a

We then consider the following mappings

0 1

i 7→

,

−1 0

0 1

j 7→

,

1 0

CHAPTER 5.

62

HYPERCOMPLEX NUMBERS

1 0

k 7→

,

0 −1

and see that

0 1 1 0 0 −1

=

,

1 0

0 −1

1 0

noting that this corresponds to

jk = −i

as seen in the coquaternions. We further note that

these matrices together with the identity matrix form a basis for the

Then for any arbitrary real

2×2

matrix, with

a, b, c, d ∈ R,

2×2

real matrices.

we have the mapping

(b + c)

(a − d)

(a + d) (b − c)

a b

+

i+

j+

k

7 q=

→

2

2

2

2

c d

which forms a ring isomorphism [29].

5.4.1

Roots of Split-Quaternions

The split quaternions can also be represented in a polar form [33] which can be used to

exhibit a version of the de Moivre formula and subsequently used to nd roots[32]. The de

Moivre formula for split quaternions is dened piecewise depending on the properties of a

split quaternion

N (q) =

q = w + xi + yj + zk .

p

|w2 + x2 − y 2 − z 2 |.

the split quaternion

q

is called

The split quaternion

q

will have norm

Considering the multiplicative modulus

spacelike

if

q q̄ < 0, timelike

if

q q̄ > 0,

N (q) dened as

q q̄ = w2 +x2 −y 2 −z 2 ,

and

lightlike

if

q q̄ = 0,

with these labels stemming from how these quantities are used in physics. Note that under

these labels, spacelike and timelike split quaternions will have multiplicative inverses, but

lightlike quaternions will have no inverses.

Further characterization of a split quaternion is taken by splitting it into its scalar part

Sq = w,

and its vector part

V~q = xi + yj + zk ,

where the vector part is identied with the

CHAPTER 5.

63

HYPERCOMPLEX NUMBERS

Minkowski 3-space. The Lorentzian inner product of the vector part with itself,

−x2 + y 2 + z 2 ,

is called spacelike if