* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Lab 5 - Risk Analysis, Robustness, and Power

Survey

Document related concepts

Transcript

Type equation here.Biology 458 Biometry

Lab 5 - Risk Analysis, Robustness, and Power

I. Risk Analysis

The process of statistical hypothesis testing involves estimating the probability of

making errors when, after the examination of quantitative data, we conclude that the

tested null hypothesis is false or not false. These 'errors' can be thought of as the "risks"

of making particular kinds of mistakes when basing a decision on the examination of

quantitative data. To determine or estimate 'risks' (the probability of Type I and Type II

errors), we follow a formal hypothesis testing procedure in which we insure or assume

that our data meet certain conditions (e.g., independence, normality, and homogeneity

of variances for parametric tests and independence and continuity for non-parametric

tests). We state a decision rule prior to applying our test which amounts to specifying

α, the significance level for our test, but also is synonymous with setting our Type I error

rate and defining our region of rejection (i.e., the values of the test statistic for which we

will reject the null hypothesis). Since we control or specify our Type I error rate prior to

performing a test, if we meet the assumptions of the test, then the actual frequency of

Type I errors we would make, if we repeated our experiment many times under the

same conditions, will equal to the nominal rate we specify.

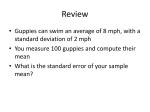

For example, the histogram below represents 20,000, 2-sample t - tests computed from

data that were derived by sampling 5 subjects from each of two 2 underlying

populations with exactly identical means (9.0) and variances (2.0). Note that this

histogram is the sampling distribution of the two-sample t - statistic for samples of size

n1 = n2 = 5, and that the distribution has a mean suspiciously close to 0, and a variance

suspiciously close to 1.0.

1

If our t - test is working well and we perform an upper one-tailed test with α = 0.05, then

5% of our t - values would fall in the region of rejection even when the null hypothesis is

exactly true! In the example above, 20 sets of 1000 t - tests were conducted and one

would expect that 5% x 1000 = 50 test to be significant at the 5% level. In fact the

average number of Type I errors (significant t - tests even when the null hypothesis was

true) was 50.8 ± 2.6359 (se).

II. Robustness

In many cases, we may be in error when we assume that our data follow a particular

distribution or when we claim that the variances in our treatment groups are equal.

When this situation arises, the distribution of the statistic that we use in testing our

hypothesis may differ from that expected when the assumptions of the test are met. For

example, if we use a t - test to compare a sample estimate of the mean to a theoretical

value, the test statistic does not have an exact t - distribution if the population of values

of our random variable, x, is not normally distributed. Similarly, if we are performing a

separate groups t - test and assume that the treatment group variances are equal when

in fact they are not, our estimates of the probability of making Type I and Type II errors

will be distorted.

Therefore, the consequence of not meeting the assumptions of normality and

homogeneity of variances is that our estimates of the probability of making Type I or

Type II errors will be distorted. For instance, we may conclude from a t - test that the

chance we are making a Type I error when we reject the null hypothesis is 5%. But, if x

is not a normally distributed random variable, the true probability of making a Type I

error might be more than 5% because the test statistic does not follow exactly the t distribution with the specified degrees of freedom.

The ability of a specific statistical hypothesis test to provide accurate estimates of the

probability of Type I and Type II errors, even when the underlying assumptions are

violated, is called robustness. Some hypothesis tests are more robust to deviations

from certain underlying assumptions than others. The type and magnitude of the

deviation of the data from the assumptions required by a test is often important in

choosing the appropriate statistical test to apply. Tests of hypotheses are used in many

situations where the underlying assumptions are violated. Therefore, robustness is a

desirable property.

The following table summarizes the results of a large number of computer simulations to

determine the consequences of violating the assumptions of normality and equality of

variances in the two sample t - test. For each simulation, samples were drawn from two

underlying populations with exactly equal means and either equal or unequal variances.

Since the population means were known to be equal, anytime we reject the null

hypothesis we are committing a Type I error. The values given in this table are the

number of Type I errors (out of 1000 replicate simulation runs) when the null hypothesis

was tested versus an upper 1 - tailed alternative hypothesis. At an α = 0.05 level, we

would expect 0.05 X 1000 = 50 Type I errors in each instance, if the test was performing

2

according to expectations. Since 20 - replicate runs of 1000 simulations were performed

for each table entry, the standard error of each entry is also presented. Values that are

substantially lower than 50 or greater than 50 indicate that the test is actually being

performed at an α level other than the nominal 5% we intended. In other words we

either make to many Type I errors (reject when true) or two few (fail to reject when

false). The simulations were performed on populations that were either normally or

uniformly distributed with means equal to 9.0. In the case where variances were equal,

variances both = 4.0. For the case of unequal variances var1 = 4.0, var2 =16.0.

Assessment of the Robustness of the t - test to violations of the

assumptions of Normality and Equality of Variances (values in table are

number of Type I errors in 1000 trials ± se)

Sample

Sizes

Unequal Variances

(var1 = 4.0, var2 = 16.0)

Equal Variances (var1

= var2 = 4.0)

pooled variance

estimate

n1,n2

separate variance

estimate

normal

uniform

normal uniform normal

uniform

A

B

C

D

E

F

5,5

49.5±1.4

50.6±1.5

54.2±2.1

71.1±2.1

48.8±1.8

61.1±1.8

20,20

48.6±1.7

50.4±1.5

51.8±1.3

80.7±1.9

46.7±1.5

84.6±2.0

100,100

53.1±1.5

52.6±1.3

50.4±1.5

138.9±2.3

51.1±1.8

141.3±2.9

5,20

53.4±2.0

51.5±1.6

10.0±0.8

15.8±0.9

47.4±1.9

71.35±1.7

20,100

52.4±1.4

51.3±1.7

6.7±0.7

19.7±0.9

50.4±1.6

106.7±2.9

5,100

49.4±1.9

50.4±1.4

0.90±0.2

2.9±0.4

49.3±1.3

78.9±2.1

20,5

-

-

131.4±2.0 163.9±2.5

46.9±1.9

65.3±1.8

100,20

-

-

144.4±2.2 203.9±2.6

53.6±1.9

81.8±1.6

100,5

-

-

185.9±2.7 227.7±2.3

44.9±1.4

63.0±2.0

From this table we see that when variances are equal, the t - test performed well

regardless of whether the population was normally distributed or the sample sizes were

equal (columns A and B). However, when the variances are unequal the test only

performs well if the population is normally distributed and sample sizes are equal (first

three rows in column C). If we use the Satterthwaite correction with unequal variances,

then the t - test also performs well for unequal variances whether or not sample sizes

are equal (data column E). In virtually all instances, the t - test does not perform well

when the underlying population is non-normal and variances are unequal (data column

F).

3

II. Power

Power is a measure of the ability of a statistical test to detect an experimental effect that

is actually present. Power is an estimate of the probability of rejecting the null

hypothesis (Ho) if a specified alternative hypothesis (Ha) is actually correct. Power is

equal to one minus the probability of making a Type II error, β, (failing to reject Ho when

it is false): power = 1 - β; thus, the smaller the Type II error, the greater the power and,

therefore, the greater the sensitivity of the test. The level of power will depend on

several factors: 1) the magnitude of the difference between Ho and Ha, which is also

called the "effect size," 2) the amount of variability in the underlying population(s) (the

variances), 3) the sample size, n, and 4) the level of significance chosen for the test, α

or the probability of a Type I error. For more information on statistical power analysis

see the supplemental lecture notes on power and more about power analysis.

As long as we are committed to making decisions in the face of incomplete knowledge,

as every scientist is, we cannot avoid making Type I and Type II errors. We can,

however, try to minimize the chances of making them. We directly control the probability

of making a Type I error by our selection of α, the significance level of our test. By

setting a region of rejection, we are taking a risk that a certain proportion of the time (for

example 5%, when α = 0.05) we will obtain values of our test statistic that would lead us

to reject the null hypothesis (fall in the region of rejection) even when the null hypothesis

is true.

How can we reduce the probability of making a Type II error? One obvious way is to

increase the size of the region of rejection. In other words, increase α. Of course, we do

so at the cost of an increased probability of making Type I errors. Every researcher

must strike a balance between the two types of error. Other ways to reduce the

probability of making a Type II error are to increase the sample size or to reduce the

variability in the data. Increasing sample size is simple, but reducing variability in the

observations is also an important means to improve the power of tests. The process of

"experimental design" is one in which research effort is allocated to insure the most

powerful test for the effort expended is actually conducted.

The probability of making a Type II error and the power of a statistical test are more

difficult to determine because they require one to specify a quantitative alternative

hypothesis. However, if one can specify a reasonable alternative hypothesis, guides for

calculating the power of a test, or the sample size necessary to achieve a desired level

of power are now becoming more widely available. The most comprehensive book on

statistical power analysis is by Cohen (1977), but many web-based power calculators

and statistical packages now include functions to permit the determination of power and

sample size. Click here to view the available web-based power calculators or see the

table below for links to power calculators for t - tests. Click here to link to the website

where you can download the program PS, a Windows based power calculator.

4

Calculating Power in R

In R there are four different ways to make power calculations: 1) using built in

functions for simple power calculations, 2) using the contributed package "pwr", 3) using

the non-central distribution functions, and 4) by simulation.

Built in R Functions for Power and Sample Size Calculation

There are 3 functions in the base R installation for power and sample size calculation:

power.t.test, power.anova.test, and power.prop.test. These functions will calculate

power for various t - tests, analysis of variance (ANOVA) designs, and for tests of

equality of proportions. For the purposes of this lab exercise and for illustrating the

general workings of these functions, I will focus on the power.t.test function.

The power.t.test function has several arguments

power.t.test(n = , delta = , sd = , sig.level = ,power = ,type =

"", alternative ="" , strict = FALSE)

where n is the sample size (the within group sample size for a 2 sample t-test), delta (δ)

is the effect size expressed as a difference in means, s is the standard deviation,

sig.level is the α - value for the test, power is the desired power which is entered only

when you are calculating sample size in which case n = NULL or is left out, type has

three possible levels given below, alternative has two possible levels given below, and

strict which determines whether or not both tails of the non-central distribution are

included in the power calculation (TRUE or not FALSE).

type =("two.sample", "one.sample", "paired"),

alternative = ("two.sided", "one.sided")

strict = (FALSE , TRUE)

Suppose we have a preliminary estimate of σ = 4.6 and wanted to determine the power

of a one sample t – test relative the alternative hypothesis that µ exceeded the

hypothesized value by 3 units with a sample size of n =15.

# compute power of one sample t - test using built in function

power.t.test(n=15,d=3, sd=4.6,sig.level=0.05,type="one.sample",alternative="o

ne.sided")

##

##

##

##

##

##

One-sample t test power calculation

n = 15

delta = 3

sd = 4.6

5

##

##

##

sig.level = 0.05

power = 0.7752053

alternative = one.sided

Not that the output from R reiterates the conditions for which power is bring calculated

and give the estimate of power.

The 'pwr' package in R

The 'pwr' package in R implements the power calculations outlined by Jacob Cohen in

his book "Statistical Power Analysis for the Behavioral Sciences" (1988). Cohen's book

is about the only book length treatment that deals with power and sample size

calculation. The 'pwr' package has functions to compute power and sample size for a

wider array of statistical tests than the baseline functions in R. However, the drawback

to using the 'pwr' package is that its functions require you to express the "effect size" in

terms of effect size formula that are given in Cohen's book, but are not available in the

documentation for the package. Alternatively, if you are willing to use Cohen's definition

of a "small," "medium," or "large" effect size, which he defined based on his knowledge

of the behavioral sciences, then an internal function will provide the necessary effect

size values for each test. In my experience, Cohen's effect sizes are not appropriate for

applications in ecology, and potentially more broadly in biology.

I will illustrate the function for power and sample size calculation for the one sample t test, and for a 2 - sample t - test with equal or unequal sample sizes in the 'pwr'

package.

The function for a one sample, paired, or 2 - sample t - test with equal sample sizes is:

pwr.t.test(n = , d = , sig.level = , power = ,type = ,

alternative = )

The potential levels of the arguments "type=" and "alternative=" are:

type = "two.sample", "one.sample", "paired",

alternative = "two.sided", "less", "greater"

# compute power for same one - sample t - test as above using pwr package

# attach pwr package

library(pwr)

# compute power

pwr.t.test(n = 15, d =(3/4.6) , sig.level = 0.05, type ="one.sample",alternat

ive ="greater" )

6

##

##

##

##

##

##

##

##

One-sample t test power calculation

n

d

sig.level

power

alternative

=

=

=

=

=

15

0.6521739

0.05

0.7752053

greater

Note we get the same results as when we used the built in power.t.test function.

The function for power and sample size calculation for the t - test in the 'pwr' package is

very similar to the base function, but note that the options for the argument

"alternative=" differ.

In the case of a 2 - sample t - test with unequal sample sizes, the 'pwr' package offers

the pwr.t2n.test function. This function could also be used for equal sample sizes as

well. It has similar arguments, but requires that you specify both sample sizes and does

not have the "type=" argument.

pwr.t2n.test(n1 = , n2= , d = , sig.level =, power = ,

alternative = )

Suppose we have a control and treatment group and we know that the mean for the

control group is 12.4 and that the control group standard deviation is 8.7. What would

be the power of a one-tailed t – test performed at the α = 0.05 versus the alternative

hypothesis that the treatment group mean was 25% higher than the control group mean

with control group sample size of 10 and treatment group sample size of 20? Since we

assume that variances are equal the Cohen's effect size is the differences in means

divided by the estimated standard deviation for the control group.

# specify control group mean and calculate treatment group mean expected

# under alternative hypothesis

m1=12.4

m2=1.25 * 12.4

# specify preliminary estimate of s

s=8.7

# calculate Cohen's effect size measure - delta

delta=abs(m1-m2)/s

delta

## [1] 0.3563218

# compute power of t - test

pwr.t2n.test(n1=10,n2=15,d=delta,sig.level=0.05,alternative="greater")

##

##

t test power calculation

7

##

##

##

##

##

##

##

n1

n2

d

sig.level

power

alternative

=

=

=

=

=

=

10

15

0.3563218

0.05

0.2125491

greater

Using Non-Central Distributions for Power and Sample Size Analysis in R

Another approach to power and sample size calculation in R is based on using the

distribution functions available for the various test statistics such the t, F and 𝜒 2

distributions.

Recall that the distribution of the test statistic under the null hypothesis is said to be

centrally distributed about its expected value when the null hypothesis is true. For the t statistic its expected value is 0, for F it is 1, and for 𝜒 2 it is the degrees of freedom of

the test. The distribution of the test statistic when a specific quantitative alternative

hypothesis is articulated is the same distribution, but shifted so that its expected value is

now equal to what is called the "non-centrality parameter." The non-centrality parameter

is a measure of the "effect size." R has a set of functions for each of these distributions

that have an argument 'ncp=' which allows one to set the non-centrality parameter. For

a one -sample t - test or a paired t - test the non-centrality parameter (δ) is:

𝛿=

𝑥̅ 𝑎 − 𝜇

𝑠⁄√𝑛

which looks like a t - statistic, but 𝑥̅𝑎 is the mean expected under the alternative

hypothesis, and µ is the mean expected under the null hypothesis. Now using the R

function pt(tcrit, df,ncp) one can calculate the Type II error or power of a test for a

specific sample size

For example to calculate the power of a test, one must first determine the critical value

of t beyond which one would reject the null hypothesis. Clearly, if the null hypothesis is

rejected one cannot have made a Type II error. This can be accomplished by using the

R function qt(alpha, df) which will provide the t values associated with the alpha level

quantile of the t - distribution..

We can obtain the critical value of t for the one tailed one sample t – test we performed

above using power.t.test and prw.t.test:

#obtain critical t as the 95th percentile of the t - distribution with

specific df

n=15

tcrit=qt(0.95,df=n-1)

tcrit

8

## [1] 1.76131

Now we can calculate power to detect that the mean under the alternative hypothesis

exceeds the null hypothesized mean by 3. We first calculate δ, assuming that our

preliminary estimate of s = 4.6, then we calculate the Type II error probability, and then

power.

# calculate non-centrality parameter as: (ma - mo)/(s/sqrtr(n))

ncp=3/(4.6/sqrt(n))

# calculate Type II error

err2=pt(tcrit,df=14,ncp=ncp)

err2

## [1] 0.2247947

# calculate power

1-err2

## [1] 0.7752053

Note again that this estimate of power agrees with those we obtained using the

power.t.test and the pwr.t.test functions.

For a two-tailed test one must subtract from the Type II error calculation the lower

region of rejection as shown below.

# calculate 2 tailed version of same test

tcrit=qt(0.975,df=n-1)

tl=-1*tcrit

# calculate Type II error

err22=pt(tcrit,df=14,ncp=ncp)-pt(tl,df=14,ncp=ncp)

err22

## [1] 0.3481627

# calculate power

power=1-err22

power

## [1] 0.6518373

Note that power for the two-tailed test is lower than for the equivalent one-tailed since

more of the non-central t - distribution lies below the critical t - value (since the critical

upper t is associated with the 97.5th percentile rather than the 95% percentile of the t

distribution. Removal of the lower tail of the non-central t - distribution beyond the lower

critical t - value (-t) has a very small effect on the estimated power.

9

A similar process can be followed for a two-sample t - test, but with a modified noncentrality parameter (δ):

𝛿=

𝑥̅1 − 𝑥̅ 2

1

1

+

𝑛1 𝑛2

𝑆𝑝 �

which should look like a t - statistic for a two sample test. Since one is almost always

performing power calculations prior to conducting the research, it is customary to

assume that the variances in the two groups will be equal since the purpose of making

the calculation is to plan the experiment to have adequate power for a reasonable

alternative hypothesis.

Power and sample Size Analysis by Simulation in R

When the study design becomes more complicated, it is easy to outstrip the capabilities

of R's built in functions for making power or sample size calculations. In that case, one

can calculate power or sample size by programming R to perform a computer

simulation.

However, I will use a simple study design to illustrate the simulation approach, but its

basic principles apply to more complicated designs.

Part I - Estimate Type I error rate

1. First simulate data under the null hypothesis

2. Perform the test you plan to do to the simulated data at your chosen α level.

3. Record whether the test applied was significant.

4. Repeat this process many times (step 1 to 3).

5. Tabulate how many simulations showed a significant effect. This number divided by

the number of simulations will estimate your Type I error. It should be close to your

nominal α level.

Part II

1. Now simulate data under the alternative hypothesis.

2. Perform the same test on the simulated data.

10

3. Record whether the test was significant or not at your chosen α level.

4. Repeat steps 1-3 many times.

5. Tabulate how many simulations showed a significant effect. This estimate divided by

the number of simulations will be your estimate of power - the proportion of the

simulations in which you rejected a false null hypothesis.

Below I illustrate code to calculate the power of the one sample t - test illustrated above.

Note that the estimated power of 0.7808 is very close the estimates generated using

power.t.test, pwr.t.test, and the non-central t - distribution.

# From one-sample one-tailed t - test performed above

# set the number of simulations

#initialize counter variables

# set sample size n

numsims=10000

count=0

countp=0

n=15

# Simulate data under the null hypothesis as being normally distributed with

# mean = 0, and given values of standard deviation

for (i in 1:numsims){

dat=rnorm(n,0,4.6)

#perform the t - test

res=t.test(dat,mu=0,alternative="greater")

# check to see if t - test is significant at alpha =0.05 level and

# count how many times it is significant (count)

if(res$p.value<=0.05){count=count+1}

}

# calculate Type I error rate as number significant/number of simulations

type1=count/numsims

type1

## [1] 0.0522

# Simulate the data under the alternative hypothesis that the the mean

# exceeds the null hypothesized value by 3 units

for (i in 1:numsims){

datn=rnorm(n,3,4.6)

res2=t.test(datn,mu=0,alternative="greater")

if(res2$p.value<=0.05){countp=countp+1}

}

# power is the number of times null hypothesis rejected/number of simulation

11

power=countp/numsims

power

## [1] 0.7808

Power Curves

It is often useful to plot a curve that shows the estimated power as function of sample

size, effect size, or sd. Commonly power is plotted versus sample size with power on

the y - axis and sample size on the x - axis. To generate a power curve in R, one could

use a few lines of R code

# create a vector with the integers from 2 to 50

n=(2:50)

# determine the length of the vector (how many elements)

np=length(n)

# note the sample size n is vector values (has many values)

pwrt=power.t.test(n=n,delta=2,sd=2.82,type="one.sample",alternative="one.side

d")

# extract values of power from object "pwrt" and plot graph

plot(n,pwrt$power, xlab="Sample Size", ylab="Power", type ="l")

A lot more can be done in R to make your plot look better.

12

III. Exercises

Calculate power or sample size for the following tests: Use R, the program PS, or

another online power calculator (note PS gives power and sample size values assuming

a two tailed test, to get one - tailed results for a test at the α = 0.05 level, use an α =

0.1) to solve the following problems. Show your work (R syntax if you use R, or the

values you entered into PS or an online calculator), which calculator you used, and

interpret the results.

A. Given that the variance in arsenic concentration in drinking water is 8 ppb, what is

the power of a test based on 10 samples to determine if arsenic levels exceeds the

public health standard of 5 ppb by 2 ppb? Assume that the test is performed at α = 0.05.

What sample size would be necessary to have α = β = 0.05? Plot a curve for power

versus sample size for this example.

B. An ecologist is contemplating a study on the effects of ice plant on seedling growth in

a native plant. Two treatments are anticipated: treatment 1 will examine growth of the

native plant at locations where ice plant has not previously grown, and treatment 2 will

examine growth of the native at locations from which ice plant has been removed. Given

a preliminary estimate that plants reach an average height of 35 cm and have a

variance of 20 cm, what sample size is necessary to detect a 25% reduction in height

caused by ice plant with power of 80%? (Assume that the test is to be performed at the

α = 0.05 level). Would it be better to use an independent groups t - test or a paired t test? (hint: using the same preliminary estimates calculate the sample size required to

achieve the desired power for both a separate groups and a paired t - test). What

consequence would there be to the independent group t - test to using unequal sample

sizes? (Hint: use the Iowa calculator to answer this question.)

Perform the power and sample size calculations requested in parts A and B, and turn in

a brief write-up of your results.

Further Instructions on completing the Lab

Take note that the power calculator “PS” always assumes that you are performing a 2tailed test. Therefore, to perform a test with α = 0.05, you need to enter α = 0.1 in the

appropriate box for α. Also, the parameter m in “PS” is the ratio of sample size in the

two treatments when you are performing an independent groups t - test. You get

different results depending on which sample size you put in the numerator or

denominator. Therefore, avoid using “PS” to address questions about unequal samples

sizes. Use Russ Lenth’s the web-based power calculator at the University of Iowa.

When doing the problems read them carefully and extract information on variability,

effect size, Type I error rate, if the problem should be a one tailed test, etc. so that you

can enter values in the calculators to compute power or sample size. When completing

13

your write-up state which calculator you used and what values you entered so that I can

tell where you went wrong. Also, for part B of the exercise to determine the effect of

unequal sample sizes on power, keep the total sample size constant and vary allocation

between treatments. For example, if n1 = 4 and n2 = 4, compare the power to n1 = 6 and

n2 = 2, so the total sample size remains 8.

Links to Web Based Power and Sample Size Calculators for t -tests

1 - sample tests

http://stat.ubc.ca/~rollin/stats/ssize/index.html

2 - sample tests

http://stat.ubc.ca/~rollin/stats/ssize/index.html

1 and 2 sample tests

http://www.math.uiowa.edu/~rlenth/Power/index.html

14