* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Basic Probability and Information Theory: quick revision

Survey

Document related concepts

Transcript

Basic Probability and Information Theory:

quick revision

ML for NLP

Lecturer: S Luz

http://www.scss.tcd.ie/~luzs/t/cs4ll4/

February 17, 2015

In these notes we review the basics of probability theory and introduce the

information theoretic notions which are essential to many aspects of machine

learning in general, and to the induction of text classifiers in particular. We

will attempt to illustrate the abstract concepts introduced in these notes with

examples from a text categorization task (e.g. spam filtering). The reader

should note, however, that the techniques and results reported here apply to

a much wider domain of applications. The connection with TC will become

clearer next week when we will see feature selection and classifier induction.

Concise but useful introductions to probability theory can be found in

(Russell and Norvig, 1995, chapter 14) and (Manning and Schütze, 1999,

chapter 2). The latter also includes the basics of information theory, viewed

mainly from a natural language processing perspective. A very good general

introduction to probability theory is (Bertsekas and Tsitsiklis, 2002).

Why review Probability and Information Theory?

• Probability theory gives us a tool to model uncertainty

• Probabilistic approaches (e.g. naive Bayes) are used in TC.

• Information Theory plays an important role in various areas of machine

learning, in particular:

– Feature selection uses the information theoretic notions of information gain and mutual information

1

– Learning algorithms, such as the decision tree induction, use the

information theoretic concept of entropy to decide how to partition

the document space.

Information theory originated from Claude Shannon’s research on the capacity of noisy information channels. Information theory is concerned with

maximising the information one can transmit over an imperfect communication channel. The central concept of Information Theory is that of Entropy.

Entropy (which we will define formally below) measures “the amount of uncertainty” in a probability distribuition.

Reportedly, the term “entropy” was suggested to Shannon by John von

Newman : “You should call it entropy for two reasons: first, the function is

already in use in thermodynamics under the same name; second, and more

importantly, most people don’t know what entropy really is, and if you use

the word entropy in an argument you will win every time” (Hamming, 1991).

Probability theory: notation

Notation

Ω

ω

D

Set Jargon

collection of objects

element of Ω

subset of Ω

D̄

complement of D

D∩E

D∪E

D\E

D⊆E

∅

Ω

intersection

union

difference

inclusion

empty set

whole space

Probability jargon

sample space

elementary event

event that some outcome

in D occurs

event that no outcome in

D occurs

both D and E

D or E, or both

D but not E

if D then E

impossible event

certain event

A notational variant to the above which stresses the connection with logic

would treat set intersection as conjunction, set union as disjunction, etc. This

variant is summarised below:

Logic

P (A ∨ B)

P (A ∧ B)

P (f alse)

P (true)

Set theory

P (A ∪ B)

P (A ∩ B)

P (∅)

P (Ω)

2

Sample spaces

The set of all possible outcomes of an experiment is called

sample space

• in Text Categorisation, for instance, one could regard the set of documents being processed as the sample space:

Ω = D = {d1 , ..., d|Ω| }

However, one could alternatively take sets of documents rather than

sets (or multisets, lists etc) of words to be elementary events. In this

case, Ω = 2D would be the sample space.

• An “experiment” could be performed to determine, for instance, which

documents belong to category c (say, email that should be classified as

spam). The outcome of that experiment would be a subset of Ω.

Different ways of characterising sample spaces in TC will be presented in

the lecture on Naive Bayes Text Categorisation.

Here is an even more mundane example: the combinations of heads (H)

and tails (T) resulting from tossing a coin three times can be represented by

the following sample space:

Ω = {HHH, HHT, HT H, HT T, T HH, T T H, T HT, T T T }

Discrete Uniform Probability Law

• Now that we have characterised the sample space, we would like to be

able to quantify the likelihood of events.

• Discrete uniform probability law: If the sample space consists of n

possible outcomes which are equally likely, then the probability of any

event D is given by

P (D) =

No. of elements of D

n

• One can think of the probability of occurrence of an event D as the

proportion of times event D occurs in a large number of trials:

P (D) =

No. of occurrences of D

No. of trials

3

(1)

The view of probabilities implicit in the view adopted above has been

termed “frequentist”. It relies on the empirical observation that the ratio

between observed occurrences of an event and the number of trials appears

to converge to a limit as the number of trials increases. The frequentist

approach is inadequate in many ways, but a thorought discussion of its merits

and limitations is beyond the scope of this revision. For a very readable

discussion of the “philosophy” of probability theory, see (Hamming, 1991).

We may illustrate this approach by calculating the probability that a

document of corpus Ω is filed under category c as follows:

P (c) =

|D|

|Ω|

where D = {ω ∈ Ω : f (ω, c) = T }

Visualisating sample spaces

Sample spaces can be depicted in different ways.

For events described by two rolls of a

die, the sample space could be

depicted as a grid:

In experiments of a sequential nature

such as this, a tree representation is

also informative.

E2= {at least one roll is 5}

...

2nd roll

.

.

.

...

.

.

.

1st roll

E1= {same result in both rolls}

P (E1 ) =

1

,

6

P (E2 ) =

1st roll

11

36

2nd roll

(adapted from (Bertsekas and Tsitsiklis, 2002)).

Sample space, in somewhat more formal terms

• Defining σ-field:

4

A collection F of subsets of Ω is called a σ-field if it

satisfies the following conditions:

1. ∅ ∈ F

2. if D1 , ..., Dn ∈ F then

Sn

i=1

Di ∈ F

3. if D ∈ F then D̄ ∈ F

• Example:

– the smallest σ-field associated with Ω ... is the collection F =

{∅,Ω}.

Probability spaces

• We continue to add structure to our original set of events by defining

P as a probability measure:

A Probability Measure P on < Ω, F > is a function P : F →

[0, 1] satisfying:

1. P (Ω) = 1

2. if D1 , D2 , . . . is a collection of disjoint members of F, in

that Di ∩ Dj = ∅ for all i 6= j, then

P(

∞

[

Di ) =

i=1

∞

X

P (Di )

i=1

The triple < Ω, F, P > is called a Probability Space

In the definition of probability measure presented here, F is obviously to

be understood as a σ-field.

A probability measure is a special case of what is called in probability

theory simply a measure. A measure is a function µ : F → [0, ∞), satisfying

(2) as above and µ(∅) = 0. Some weight assignment functions, such as

the ones often used in the decision trees are measures, though they are not

probability measures.

5

Properties of probability spaces

• The following hold:

P (D̄) = 1 − P (D)

(2)

If E ⊇ D then P (E) = P (D) + P (E\D) ≥ P (D)

(3)

P (A ∪ B ∪ C) = P (A) + P (Ā ∩ B) + P (Ā ∩ B̄ ∩ C)

(4)

• Inclusion-exclusion principle:

P (D ∪ E) = P (D) + P (E) − P (D ∩ E)

(5)

or, more generally:

P(

n

[

i=1

Di ) =

X

P (Di ) −

i=1

+

X

P (Di ∩ Dj )

i<j

X

P (Di ∩ Dj ∩ Dk ) − . . .

i<j<k

n+1

+(−1)

P (D1 ∩ . . . Dn )

Proofs:

(2) D ∪ D̄ = Ω and D ∩ D̄ = ∅, so P (D ∪ D̄) = P (D) + P (D̄) = 1

(3) If E ⊇ D, then E = D ∪ (E\D), which is a union of disjoint sets.

Therefore P (E) = P (D) + P (E\D)

(5) The rationale for the Inclusion-exclusion principle is easy to visualise

by drawing a Venn diagram of (possibly intersecting) sets D and E.

Simply adding the probabilities of D and E is as if we are counting

the probability of the intersection twice, so the result needs to be readjusted by subtracting the intersection:

P (D ∪ E) = P ((D\E) ∪ (D ∩ E) ∪ (E\D))

= P (D\E) + P (D ∩ E) + P (E\D)

= P (D\E) + P (D ∩ E) + P (E\D)

+ P (D ∩ E) − P (D ∩ E)

= P ((D\E) ∪ (D ∩ E))

+ P ((E\D) ∪ (D ∩ E)) − P (D ∩ E)

= P (D) + P (E) − P (D ∩ E)

6

(set theory)

(disjoint sets)

(algebra)

(disjoint sets)

(set theory)

Conditional probability

• If P (E) > 0, then the conditional probability that D occurs given E is

defined to be:

P (D ∩ E)

P (D|E) =

P (E)

The diagram above illustrates conditional probabilities in terms of set size:

If you see probability measures as frequencies/proportion of occurrence, then

the conditional is given by

|D∩E|

|E|

=

|D∩E|

Ω

|E|

Ω

=

P (D∩E)

.

P (E)

Properties of conditional probabilities

1. For any events D and E s.t. 0 < P (E) < 1,

P (D) = P (D|E)P (E) + P (D|Ē)P (Ē)

2. More generally, if E1 , ..., En are partitions of Ω s.t. P (Ei ) > 0, then

P (D) =

n

X

P (D|Ei )P (Ei )

i=1

3. Chain rule:

P (D1 ∩ ... ∩ Dn ) = P (D1 )P (D2 |D1 )P (D3 |D2 ∩ D1 ) . . .

Proof:

1. D = (E ∩ D) ∪ (Ē ∩ D), which is a union of disjoint sets. Thus

P (D) = P (E ∩ D) + P (Ē ∩ D)

= P (D|E)P (E) + P (D|Ē)P (Ē)

7

Bayes’ rule

• Sometimes, as in the case of naive Bayes TC, it is easier to estimate

the conditional probability of E given D than the other way around.

• In such cases, Bayes’ rule can be used to simplify computation:

P (E ∩ D)

P (D)

P (D|E)P (E)

=

P (D)

P (E|D) =

(6)

Proof: It follows trivially from the definition of conditional probability

(slide 10) and the chain rule (slide 11).

Independence

• In general, the occurrence of an event E changes the probability that another

event D occurs. When this happens, the initial (prior) probability P (D) gets

“updated” to P (D|E). If the probability remains unchanged, i.e. P (D) =

P (D|E), then we call D and E independent:

Events D1 and Dn are called independent if

\ Y

Di =

P

P (Di )

∀S ⊆ {1, 2, . . . , n}

i∈S

i∈S

• E.g: Two fair coin tosses are independent.

• But note that, when we have more than two events, pairwise indepence does

not imply indepence:

– from P (C|A) = P (C) and P (C|B) = P (C) you cannot conclude P (A∩

B ∩ C) = P (A)P (B)P (C)

• Neither is the latter a sufficient condition for the indepence of A, B and C

Examples (verify that they are actually the case, as an exercise):

1. Pairwise indepence does not imply indepence:

A = { coin comes up heads on the first toss }

B = { coin comes up heads on the second toss }

C = { the two tosses have different results }

8

2. P (A ∩ B ∩ C) = P (A)P (B)P (C) is not sufficient for independence:

• Consider two throws of a fair die and the following events: A = {

first roll is 1, 2 or 3 }

B = { first roll is 3, 4, or 5 }

C = { the sum of the two rolls is 9 }

3. Similarly for a set of random variables S = {X1 , . . . , Xn }, P (Xi |∩Xj ∈S\{Xi }

Xj ) = P (Xi ) does not imply independence for S:

• Again, consider two throws of a fair die and the following events:

A = { first roll is 1, 3 or 4 }

B = { first roll is 1, 2, or 4 }

C = { the sum of the two rolls is 4 }

(show that P (A|B ∩ C) = P (A) and P (B|A ∩ C) = P (B) and

P (C|B ∩ A) = P (C) but P (A|B) 6= P (A) etc)

Conditional Independence

• Absolute independence (as described above) is a very strong requirement, which is seldom met.

• In practice, one often uses conditional independence:

P (A ∩ B|C) = P (A|C)P (B|C)

(7)

P (A|B ∩ C) = P (A|C)

(8)

or, equivalently:

• E.g.: Let A and B be two biased coins in that probab. of heads for A is .99 and

for B .01. Choose a coin randomly (with a .5 probab. of choosing each) and toss

it twice. The probabily of heads in the 2nd toss is not independent of the prob. of

heads in the 1st, but they are independent given the choice of coin.

Some exercises

1. The dangers of overtesting: Domingos (2012) review of ML used the following

example to caution readers against overtesting:

... a mutual fund that beats the market ten years in a row looks very impressive,

until you realize that, if there are 1000 funds and each has a 50% chance of beating

the market on any given year, its quite likely that one will succeed all ten times just

by luck.

9

Question: What is the actual probability that one mutual fund will succeed all 10

times by luck?

2. Monty Hall (from Wikipedia):

Suppose you’re on a game show, and you’re given the choice of three doors: Behind

one door is a car; behind the others, goats. You pick a door, say No. 1, and the

host, who knows what’s behind the doors, opens another door, say No. 3, which

has a goat. He then says to you, “Do you want to pick door No. 2?” Is it to your

advantage to switch your choice?

Question: The best strategy (as Wikipedia will tell you) is to switch. Give an

explanation of why that is the case based on conditional probabilities and Bayes’s

rule.

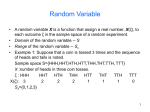

Random variables

• A random variable is a function X : Ω → R with the property that

{ω ∈ Ω : X(ω) ≤ x} ∈ F, for each x ∈ R

• Random variables offer a convenient way of abstracting over event

spaces. E.g.:

• The notation P (X = x) is used to indicate the probability that a

random variable X takes value x

• Another example: in categories over a, category can be seen as a random variable which returns the number of documents classified under

a given category.

Example (ctd.): Assume that 5 documents, out of a 20-document corpus

Ω have been classified as spam. We are now regarding subsets of Ω (possibly

the entire power set of Ω) defined by, for instance, the categories assigned to

their elements as a σ-field, and the resulting triple < Ω, F, P > as a probability space. Events in such probability space will be “things” that denote

10

elements of F, such as “the event that documents have been filed under category C”. Lets also assume that category spam denotes a set {d1 , . . . , d5 } ∈ F.

The probability associated with “the event that documents have been filed

under category C” is summarised in the random variable and is given by

P (C). The probability associated with “the event that documents have been

filed under category spam” is given by a specific value of the random variable (recall that what we are calling “variable” here is actually a function)

is P (C = spam) = 0.25.

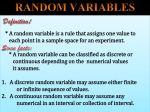

Discrete Random Variables

• A discrete random variable is a random variable whose range is finite

(or countably infinite)

• A discrete random variables are associated with a probability mass function (PMF)

– A PMF maps each numerical value that a random variable can

take to a probability

• A function of a discrete random variable defines another discrete random variable

– The PMF of this new random variable can be obtained from the

PMF of the original one.

• Thus a random variable can be conditioned on another random variable (or on an event), and the notions of independence and conditional

independence seen above also apply.

Probability mass functions

• The PMF of a random variable X is the function p : R → [0, 1], given by

p(x) = P (X = x)

• For a discrete random variable:

X

X

p(xi ) =

P (Axi ) = P (Ω) = 1

i∈N

i

where Axi = {ω ∈ Ω : X(ω) = xi }

• So, to calculate the PMF of X we add the probabilities of all events X = x

for each possible value x to get p(x).

11

• E.g.: If X is the number of heads obtained in two tosses of a fair coin, its

PMF is:

.25 if x = 0 or x = 2

p(x) = .5

if x = 1

0

otherwise

So the probability of at least one head is P (X > 0) = .75

Continuous Random Variables

• A continuous random variable is a random variable whose range is

continous (e.g. velocity, time intervals etc)

• Variable X is called continous if there is a function fX of X s.t., for

every subset B of R:

Z

fX (x)dx

P (X ∈ B) =

B

• E.g.: the probability that X falls within interval [a, b] is P (a ≤ X ≤

Rb

b) = a f (x)dx

• fX is called the probability density function (PDF) of X provided that

it is non-negative and has the normalisation property:

Z ∞

fX (x)dx = P (−∞X∞) = 1

−∞

Rv

Note that for a single value v, P (X = v) = v fX (x)dx = 0, so the

probability that X falls within interval [a, b] is the same as the probability

that X falls within [a, b), (a, b] or (a, b) (i.e. it makes no difference wheter the

endpoints are included or not. The probability that X falls within interval

[a, b] can be interpreted as the area under the PDF curve for the interval.

Cumulative Distribution Functions

• Cumulative Distribution Functions (CDF) subsume PDFs and PMFs

under a single concept.

• The CDF FX of X gives the probability P (X ≤ x) so that for every x:

X

p(k)

if X is discrete

k≤x

FX (x) = P (X ≤ x) = Z x

fX (t)dt if X is continuous

−∞

12

• Since X ≤ x is always an event (having therefore a well defined probaility), every random variable X associated with a given probability model

has a CDF.

Moments, expectation, mean, variance

• The expected value of a discrete random variable X with PMF p is given by

X

p(x)x

E[X] =

x

• For a continuous variable with PDF f we have

Z ∞

E[X] =

xf (x)dx

−∞

• This is AKA the expectation, mean or the “first moment” of X.

• In general, we define the nth moment as E[X n ]

• The variance of a random variable is defined as the expectation of the random variable (X − E[X])2 :

P

if X is discrete

x p(x)(x − E[X])2

2

var(X) = E[(X − E[X]) ] = R

∞

2

−∞ (x − E[X]) f (x)dx if X is continuous

Some Discrete Random Variables

• Bernoulli (parameter p): success (or failure) in a single trial:

(

p

if k = 1

E[X] = p, var(X) = p(1 − p)

p(k) =

1 − p if k = 0

• Binomial (parameters p and n): successes in n independent Bernoulli trials:

n k

p(k) =

p (1 − p)n−k , k = 0, 1, . . . , n

k

E[X] = np, var(X) = np(1 − p)

• Geometric (parameter p): number of trials until first success:

p(k) = (1 − 1)k−1 p,

k = 0, 1, . . .

E[X] =

1

1−p

, var(X) =

p

p2

• Poisson (parameter λ): approximation of binomial PMF when n is large, p is small

and λ = np:

p(k) = eλ k − 1

λk

k!

k = 0, 1, . . .

13

E[X] = λ, var(X) = λ

Some Continuous Random Variables

• Uniform (over interval [a, b]):

(

1

, if a ≤ x ≤ b

f (x) = b−a

0,

otherwise

E[X] =

(b − 2)2

a+b

, var(X) =

2

12

0.6

0.8

1.0

• Exponential (parameter λ): e.g. model time until some event occurs:

(

λe−λx , if x ≥ 0

f (x) =

0,

otherwise

0.0

0.2

0.4

Geometric CDF

Expoential CDF

E[X] = λ1 , var(X) =

1

λ2

0

x

• Normal or Gaussian (parameters µ and σ 2 > 0):

f (x) = √

1

2

2

e−(x−µ) /2σ

2πσ

E[X] = µ, var(X) = σ 2

Entropy

• Entropy, AKA self-information, measures the average amount of uncertainty in a probability mass distribution. In other words, entropy is

a measure of how much we learn when we observe an event occurring

in accordance with that distribution.

• The entropy of a random variable measures the amount of information in that variable (we will always be using log base 2 unless stated

14

otherwise):

H(X) = H(p) = −

X

p(x) log p(x)

x∈X

=

X

p(x) log

x∈X

1

p(x)

N.B.: We define 0 log(0) = 0

Example: suppose we have a set of documents D = {d1 , ..., dn }, each

classified according to whether it belongs or not to a certain category c, say,

spam. First, suppose you know that all documents in D are filed under spam

(we represent that as P (spam) = 1). How much information would we gain

if someone told us that a certain document di drawn randomly from corpus

D has been filed under “spam”? Answer: zero, since we already knew this

from the start! Now suppose that you know 80% of D (you incoming email

folder) is spam, and you randomly pick an email message from D and find

out that it is labelled “spam”. How much have you learned? Certainly more

than before, although less than you would have learned if the proportion

between spam and legitimate email were 50-50. In the former case there was

less uncertainty involved than in the latter.

Information gain

• We may also quantify the reduction of uncertainty of a random variable

due to knowing about another. This is known as Expected Mutual

Information:

I(X; Y ) = IG(X, Y ) =

X

p(x, y) log

x,y

p(x, y)

p(x)p(y)

(9)

• Entropies of different probability functions may also be compared by

calculating the so called Information Gain. In decision tree learning,

for instance:

n

X

G(D, F ) = H(t) −

pi H(ti )

(10)

i=1

where t is the distribution of the mother, ti the distribution of daughter

node i, and pi the proportion of texts passed to node i if term F is used

to split corpus D.

15

Information theory tries to quantify such uncertainties by assuming that

the amount of information learned from an event is inversely proportional to

the probability of its occurrence. So, in the case where there’s a 50-50 chance

that di will be spam, the amount learned from di would be

i(P (C = spam)) = log

1

1

= log

=1

P (di = spam)

0.5

i(.), as defined above, measures the uncertainty for a single value of random

variable C. How would we measure uncertainty for all possible values of C

(in this case, {spam, spam})? The answer is: we calculate the entropy of its

probability mass function:

H(C) = −(p(spam) log p(spam) + p(spam)) log p(spam)

= −(0.5 log 0.5 + 0.5 log 0.5) = 1

A more interesting corpus, where the probability of a document being labelled

as “spam” is, say 0.25, would have entropy of

H(C) = −(0.25 log 0.25 + 0.75 log 0.75) = 0.811

N.B.: There’s some confusion surrounding the related notions of Expected

Mutual Information and Information Gain. The definition in (9) corresponds

to what some call Information Gain (Sebastiani, 2002). For the purposes

of choosing where to split the instances in decision trees, the definition of

Information Gain used is the one in (10), as defined in (Manning and Schütze,

1999, ch. 2). We will reserve the term Expected Mutual Information I(X; Y )

for what Sebastiani (2002) call Information Gain, though we will sometimes

write it IG(X, Y ).

We will see details of information gain scores are used in decision tree

induction when we review the topic next week.

References

Bertsekas, D. and Tsitsiklis, J. (2002). Introduction to Probability. Athena

Scientific.

Domingos, P. (2012). A few useful things to know about machine learning.

Communications of the ACM, 55(10):78–87.

16

Hamming, R. W. (1991). The Art of Probability for Scientists and Engineers.

Addison-Wesley.

Manning, C. D. and Schütze, H. (1999). Foundations of Statistical Natural

Language Processing. The MIT Press, Cambridge, Massachusetts.

Russell, S. J. and Norvig, P. (1995). Artificial Intelligence. A Modern Approach. Prentice-Hall, Englewood Cliffs.

Sebastiani, F. (2002). Machine learning in automated text categorization.

ACM Computing Surveys, 34(1):1–47.

17