* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Conditional probability and Bayes` rule

Survey

Document related concepts

Transcript

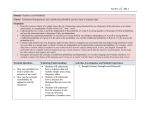

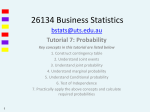

Lesson Four: PROBABILITY Probability is essentially a measure of the likelihood or chance of something happening. Some examples to illustrate the use of probability in everyday language are given below: There is a 50:50 chance that a head will turn up when you toss a coin; The probability of getting an even number on one toss of a die is 0,5; The odds of passing this exam are 3 to 1; There is a 30% chance of a child developing mumps when more that two children in the same class are infected; There is a chance in 10.000 that the new PC will breakdown in the first two years. If something is unlikely to happen, such as allergic reaction to medication or a PC breaking down, the probability is very small – on the other hand, when something is very likely to happen, the probability is large. But, in the context of probability what is large or small? The first step in answering this question is to clearly “probability” and how it is measured. Then, armed with a clear definition, it is possible to develop the study of probability further, deducing methods and rules for calculating the probability for more complex events, ultimately leading to the theory of sampling and statistical inference. BUT a word of warning – at all stages in the study of probability it is vitally important to be able to state, in words, the situation in question and what its probability means. Terminology The “something” that happen by chance is called an Event. An experiment is a repeatable process, circumstance or action that causes an event to happen (tossing a coin). A trial is a single execution of an experiment, such as a single toss of a die. An outcome is the result of a single trial as a six on a die. An event consists of a single outcome or one more outcomes, such as the event of a even number when a die is tossed: this event comprises the outcomes (2,4,6). The list of all possible outcomes of an experiment is called the “sample space” of the experiment, such as (1, 2, 3, 4, 5, 6), the list of all possible numbers that show when a die is tossed. An event space is the list of all outcomes that define the event, such as the list of even numbers. Outcomes are also referred to as “sample points”. A review of some essential maths for sets Sets are mathematical tool that is useful in explaining some concepts and rules in probability; hence a quick review on the essential is necessary at this stage. Sets are represented graphically by diagrams that are called Venn Diagrams. A set is a well defined collection of distinct items, numbers or variables. A set is simbolised by an upper case letter, as S, A, B. The members of the set are sometimes referred to as the elements or sample points of the set. If the elements of the set are listed, the list is normally enclosed in curly brackets. When two or more set (say A and B) are combined to include all elements that are either or both sets (with common elements appearing once only) the combined set is also a set called “A union B), written as “AUB”, sometimes called “A OR B”. The elements that are common to both sets constitute a set that is called “A intersection B”, written as “ A B, or “A AND B” or simply AB. The sample space, S, is referred to as the set of all possible outcomes of an experiment. The events A and 𝐴̅are called complementary events: The event 𝐴̅is the set of all outcomes in the sample space, S, that are not in A. Definitions of probability In the introduction, probability was described as the likelihood that an event will occur. For example, we may be interested in the probability or likelihood that a certain combination of numbers will turn in a lotto. There are three ways of assigning probability to an event: 1. Theoretical probability (classical probability). 2. Relative frequency or empirical probability. 3. Intuitive or subjective probability. Theoretical probability also called classical or “a priori” (before the fact): This is probability assigned to events without making any observations or measurements. It is used when all outcomes are known, particularly when all outcomes are equally likely. The probability of any event, A, when all outcomes are equally likely is written as P(A), where: 𝑃(𝐴) = 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑜𝑢𝑡𝑐𝑜𝑚𝑒𝑠 𝑤ℎ𝑖𝑐ℎ 𝑟𝑒𝑠𝑢𝑙𝑡 𝑖𝑛 𝑒𝑣𝑒𝑛𝑡 𝐴 𝑡𝑜𝑡𝑎𝑙 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑎𝑙𝑙 𝑝𝑜𝑠𝑠𝑖𝑏𝑙𝑒 𝑜𝑢𝑡𝑐𝑜𝑚𝑒𝑠 Relative frequency or empirical probability: This type of probability is estimated from a large number of observations. The probability of an event is estimated by the relative frequency with which the event occurs and is calculated by the equation: 𝑃(𝐴) = 𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑖𝑚𝑒𝑠 𝐴 ℎ𝑎𝑝𝑝𝑒𝑛𝑒𝑑 𝑓𝐴 = 𝑇𝑜𝑡𝑎𝑙 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑖𝑚𝑒𝑠 𝐴 𝑤𝑎𝑠 𝑜𝑏𝑠𝑒𝑟𝑣𝑒𝑑 𝑓𝑇 A practical difficulty with this measure of probability is that a large number of observations are required in order to obtain a measure of probability that allows for randomness and the various circumstances in which the event may occur. For example, suppose we attempt to estimate the probability that scheduled flights on a particular route are on time. Let A be the event of arriving on time. Records for one week show that 18 out of the 24 were on time: hence the probability is estimated as P(A) = 18/24 = 0,75. However, this probability is based on one particular week only: perhaps this was an holiday week and traffic was particularly even; perhaps there was a strike of same sort. A more reliable estimate of probability would require a much larger number of flights over a longer period of time so that various circumstances that affect air traffic are considered. A more reliable estimate of probability is given taking 500 records spanning a one year period. If 450 out of 500 were on time, then P(A) = 450/500 = 0,9. Intuitive or subjective probability This is the third type of probability, important in business and even in betting. It is a subjective estimate made by an individual or group of individuals on the likelihood of an event happening based on knowledge, experience and intuition. Different ways of stating the probability of an event. Probability is essentially the proportion of times an event is likely to happen. When a probability (proportion) is multiplied by 100, the probabilities may be quoted as a percentage. “The odds of passing this exam are 3 to 1”; this is another way of saying that there are 4 chances, 3 for passing and 1 for failing, so in term probability. The probability of passing = ¾ = 0,75. The axioms of probability The axioms of probability are the “self evident truths” that set out the fundamental properties of probability and are stated as follows: Axiom 1. Probability is a measure which lies between 0 and 1, inclusive. 0≤ P(A)≤ 1. Axiom 2. For any event A, the probability of event A happening plus the probability of event A not happening is one. ̅̅̅ = 1. 𝑃(𝐴) + 𝑃(𝐴) Multiplication and addition rules for probability When we say the event “A AND B” happens we mean that both A and B happen together at the same time. The probability that both A and B happen at the same time is written as P(A AND B) or P(AB). The rules for calculating P(AB) depend on whether the events A and B are independent, mutually exclusive or dependent. Independent events Events are said to be independent when the outcome of one event has no effect on the outcome of the other event. For example, if an experiment involves tossing two coins, then whatever turns up on the first coin has no effect on the outcome of the second coin. The probability of Heads or Tails on the second coin is the same irrespective of whether the first coin showed Heads or Tails. The rule is stated as “the probability that A and B happen together at the same time is the product of the two separate probabilities”. P(AB) = P(A) P(B). Mutually exclusive events Events are said to be mutually exclusive if the occurrence of one event excludes or prevents the other occurring. The probability of mutually exclusive events happening together at the same time is zero. If an event never happens, its probability is zero. Dependent events Events are said to be dependent when the occurrence of one event has an effect on the other. As an example, consider the experiment of drawing two cards from a pack, when the first card is not replaced before drawing the second. If the probability of getting two Aces is required, then let A = an Ace on the first drawn and B= an Ace on the second. The probability that the first card is an Ace is P(A) = 4/52. There are three Aces left in a total of 51 cards. The probability that the second card is an Ace is 3/51. The probability that the second card is an Ace has been changed by the occurrence of the first event. This second probability is calculated on the condition that the first card drawn was an Ace and is written P(B/A). Hence P(B/A) = 3/51. Finally, the probability that the first card and the second are Aces is calculated P(AB) = P(A) P(B/A) = (4/52) (3/51). Addition rules If the event “A OR B” happens then “A happens or B happens or AB happens”. Rule 1. P(AUB) = P(A) + P(B) for mutually exclusive events. Rule 2. P(AUB) = P(A) + P(B) – P(AB) for non mutually exclusive events. Further counting rules: factorials, permutations; combinations In simple probabilistic calculations it was necessary to count the number of outcomes. In more realistic probability calculations “counting” can became complex. The counting rules are used in these situations. Counting rule 1. If an experiment is executed in sequence of k steps with n1 possible outcomes at step one; n2 possible outcomes at step 2…nk possible outcomes at step k then the number of final outcomes is (n1) (n2)…(nk). Counting rule 2. There are n! arrangements of n different objects. Where n factorial is evaluated as n! = (n) (n-1) (n-2)…(2)(1) Of these objects, if n1 are identical, n2 are identical…nk are identical, then the number of different arrangements is 𝑛! 𝑛1 ! 𝑛2 ! … 𝑛𝑘 ! Counting rule 3 There nPr permutations (arrangements) of n different objects, taken r at a time. 𝑛 𝑃𝑟 = 𝑛! (𝑛 − 𝑟)! Counting rule 4 There nCr combinations of n different objects, taken r at a time where 𝑛 𝐶𝑟 == 𝑛! 𝑟!(𝑛−𝑟)! JOINT, MARGINAL AND CONDITIONAL PROBABILITY Calculating joint and marginal probabilities The simplest way to illustrate the “ideas” of joint, marginal and conditional probabilities is through a contingency table. A contingency table is a 2-way cross-tabulation for every combination of levels for two variables: the data in contingency tables are always count data. A marginal event refers to events associated with only one variable. Example of a contingency table Merit Economics Pass Fail Column totals Merit 120 100 20 240 MATHS Pass 10 190 10 210 Fail 0 30 20 50 Row totals 130 320 50 500 If the numbers are large, the frequencies in the contingency table may be converted to probabilities using the relative frequency definition 𝑓𝐴 𝑓𝑇 The joint probabilities are calculated by dividing each joint frequency in the body of the table by the total fT. The totality of thrse probabilities constitutes a “joint probability distribution” ( a probability distribution is a list of every possible outcome with the corresponding probability). The marginal probability are calculated by dividing the marginal frequencies by the total frequency. 𝑃(𝐴) = Joint and marginal probabilities Merit Economics Pass Fail Column totals Merit 0,24 0,20 0,04 0,48 MATHS Pass 0,02 0,38 0,02 0,42 Fail 0 0,06 0,04 0,10 Row totals 0,26 0,64 0,10 1,00 Conditional probability and conditional probability distributions The equation for conditional probability is 𝑃 ( 𝐵 ⁄𝐴 ) = 𝑃(𝐴𝐵) 𝑃(𝐴) Conditional probabilities may be calculated from the contingency table by considering the row or column which the condition refers as the reduced sample space. The required conditional probability is calculated from the reduced sample space. It is important to realize that when a condition is given this is an additional information. This additional information is used to reduce the sample space from that of the overall table to the group to which the condition refers. A conditional probability distribution is the set of probabilities for all the outcomes that satisfy a given condition. BAYES’ RULE Conditional probability and Bayes’ rule The final application of conditional probability is the Bayes’ rule. The applications of Bayes’ rule in this section will examine the inference that can be drawn from tests for conditions that are rare (viruses, diseases, presence of performance enhancing drugs, errors in accounts, bugs in software, etc.) when tested by tests that are less than 100% reliable. With Bayes’ rule we will calculate the probability that a rare condition is present given that the diagnostic test for the condition is positive. EXAMPLE An importer of smoke alarms has three suppliers: A1, A2 and A3. 20% of the smoke alarms are supplied by A1, 70% by A2 and 10% by A3. From historical data it has been established that the percentage of faulty alarms from supplier A1 is 12%, 3% from A2 and 2% from A3. a) If the total number is 1000, present the above information in terms of frequencies. Calculate the total number of faulty alarms. b) Calculate the probability of selecting a faulty alarm. What is a reduced sample space for faulty alarms? c) Given that a customer returns a faulty alarm, calculate the probability that the alarm was supplied by A1, A2, A3. a) Number of alarms from supplier A1 = 200 “ “ “ “ “ “ “ “ “ “ A2= 700 A3= 100 Total number of faulty alarms = (200 x 0,12) + (700 x 0,03) + (100 x 0,02)= 24 + 21 + 2 = 47. b) The probability of a faulty alarm is 47/1000 = 0,047 The condition “given that an alarm is faulty” reduces the sample space to the set of faulty alarms. N. of alarms N. of faulty alarms A1 200 24 A2 700 21 A3 100 2 Total 1000 47 c) Given the returned alarm is faulty, the probability that it was supplied by A1, A2, A3 is calculated from this reduced sample space. P(A1/F) = 24/47 = 0,5106 P(A2/F) = 21/47 = 0,4468 P(A3/F) = 2/47 = 0,0426. Bayes’ rule: calculations The probability of selecting a faulty alarm is called the total probability. An alarm is faulty if it was supplied by (supplier1 AND faulty) “OR” (supplier2 AND faulty) “OR” (supplier3 AND faulty). F = (A1 AND F) OR (A2 AND F) OR (A3 AND F) Hence, taking probabilities P(F) = P(A1 AND F) + P(A2 AND F) + P(A3 AND F) … addition rule P(F) = P(A1) P(F/A1) + P(A2) P(F/A2) + P(A3) P(F/A3) … multiplication rule This is the sum of the probabilities in row 3 of the table below. Calculate the “revised” probability conditional on the given characteristic That is calculate a. P(A1/F); b. P(A2/F); c. P(A3/F) These calculations are based on the equation𝑃(𝐴⁄𝐵) = Hence 𝑃(𝐴𝑖 ⁄𝐹) = 𝑃(𝐴𝑖 𝐹) 𝑃(𝐹) = 𝑃(𝐴𝐵) 𝑃(𝐵) 𝑃(𝐴𝑖 )𝑃(𝐹 ⁄𝐴𝑖 ) 𝑃(𝐹) i = 1, 2, 3 Hence, divide each probability from row 3 of the table below by the total probability P(F). 𝑃(𝐴1 𝐹 ) 0,024 𝑃(𝐴1 ⁄𝐹) = = = 0,5146 𝑃(𝐹 ) 0,047 𝑃(𝐴2 ⁄𝐹) = 𝑃(𝐴2 𝐹 ) 0,021 = = 0,4468 𝑃(𝐹 ) 0,047 𝑃(𝐴3 ⁄𝐹) = Probability from supplier Ai Probability faulty (given supplier Ai) Probability “from supplier Ai and faulty” A1 P(A1) = 0,2 𝑃(𝐴3 𝐹 ) 0,002 = = 0,0426 𝑃(𝐹 ) 0,047 Supplier A2 A3 P(A2) = 0,7 P(A3) = 0,1 Total 1 P(F/A1) = 0,12 P(F/A2) = 0,03 P(F/A3) = 0,02 P(A1)P(F/A1)= 0,2 x 0,12 = 0,024 = P(A1 AND F) P(A2)P(F/A2)= 0,7 x 0,03 = 0,021 = P(A2 AND F) P(A3)P(F/A3)= P(F)= 0,047 0,1 x 0,02 = 0,002 = P(A3 AND F) Bayes’rule is a generalization of the conditional probabilities above 𝑃(𝐴𝑖 ⁄𝐹) = 𝑃(𝐴𝑖 𝐹) 𝑃(𝐹) 𝑃(𝐴𝑖 ⁄𝐹) = 𝑃(𝐴𝑖 )𝑃(𝐹 ⁄𝐴𝑖 ) 𝑃(𝐴1 )𝑃(𝐹 ⁄𝐴1 ) + 𝑃(𝐴2 )𝑃(𝐹 ⁄𝐴2 ) + 𝑃(𝐴3 )𝑃(𝐹 ⁄𝐴3 ) 𝑃(𝐴𝑖 ⁄𝐹) = 𝑃(𝐴𝑖 )𝑃(𝐹 ⁄𝐴𝑖 ) ∑3𝑗=1 𝑃(𝐴𝑗 )𝑃(𝐹 ⁄𝐴𝑗 )