Process State

... Thread Libraries Thread library provides programmer with API for creating and managing threads Two primary ways of implementing Library entirely in user space Kernel-level library supported by the OS ...

... Thread Libraries Thread library provides programmer with API for creating and managing threads Two primary ways of implementing Library entirely in user space Kernel-level library supported by the OS ...

Course Home

... Process Synchronization [5L]: background, critical section problem, critical region, synchronization hardware, classical problems of synchronization, semaphores. Deadlocks [4L]: system model, deadlock characterization, methods for handling deadlocks, deadlock prevention, deadlock ...

... Process Synchronization [5L]: background, critical section problem, critical region, synchronization hardware, classical problems of synchronization, semaphores. Deadlocks [4L]: system model, deadlock characterization, methods for handling deadlocks, deadlock prevention, deadlock ...

Chapter 4: Threads

... Responsiveness – may allow continued execution if part of process is blocked, especially important for user interfaces ...

... Responsiveness – may allow continued execution if part of process is blocked, especially important for user interfaces ...

Threads - Wikispaces

... Each LWP is attached to a kernel thread How many LWPs to create? Scheduler activations provide upcalls - a communication mechanism from the kernel to the upcall handler in the thread library ...

... Each LWP is attached to a kernel thread How many LWPs to create? Scheduler activations provide upcalls - a communication mechanism from the kernel to the upcall handler in the thread library ...

Processes

... – Iterative server: the server itself handles the request and, if necessary, returns a response to the requesting client. – Concurrent server: it does not handle the request itself, but passes it to a separate thread or another process, after which it immediately waits for the next incoming request. ...

... – Iterative server: the server itself handles the request and, if necessary, returns a response to the requesting client. – Concurrent server: it does not handle the request itself, but passes it to a separate thread or another process, after which it immediately waits for the next incoming request. ...

Figure 15.1 A distributed multimedia system

... may allow a program to continue running even if part of it is blocked or is performing a lengthy operation, thereby increasing responsiveness to the user. • Resource Sharing: Threads share the memory and the resources of the process to which they belong. • Economy: Allocating memory and resources fo ...

... may allow a program to continue running even if part of it is blocked or is performing a lengthy operation, thereby increasing responsiveness to the user. • Resource Sharing: Threads share the memory and the resources of the process to which they belong. • Economy: Allocating memory and resources fo ...

Threads

... Responsiveness – may allow continued execution if part of process is blocked, especially important for user interfaces ...

... Responsiveness – may allow continued execution if part of process is blocked, especially important for user interfaces ...

Processes

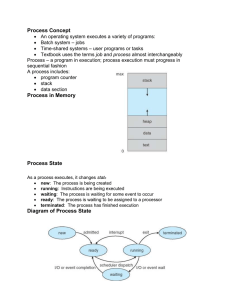

... Process Concept Process Scheduling Operations on Processes Interprocess Communication Communication in Client-Server Systems (Reading Materials) ...

... Process Concept Process Scheduling Operations on Processes Interprocess Communication Communication in Client-Server Systems (Reading Materials) ...

Multicores

... 8, of 16 lanes per slot. Each lane has two pairs of wires from the switch to the device — one pair sends data, and the other pair receives data. This determines the transfer rate of the data. These lanes fan out from the switch directly to the devices where the data is to go. The PCI - E is a replac ...

... 8, of 16 lanes per slot. Each lane has two pairs of wires from the switch to the device — one pair sends data, and the other pair receives data. This determines the transfer rate of the data. These lanes fan out from the switch directly to the devices where the data is to go. The PCI - E is a replac ...

Scalable Apache for Beginners

... • resource pool: application-level data structure to allocate and cache resources – allocate and free memory in the application instead of using a system call – cache files, URL mappings, recent responses – limits critical functions to a small, well-tested part of code ...

... • resource pool: application-level data structure to allocate and cache resources – allocate and free memory in the application instead of using a system call – cache files, URL mappings, recent responses – limits critical functions to a small, well-tested part of code ...

Operating Systems ECE344

... • Safari: multithreading (no longer the case in the latest version) • one webpage can crash entire Safari ...

... • Safari: multithreading (no longer the case in the latest version) • one webpage can crash entire Safari ...

Chapter 4

... context switching among threads are faster than among processes. • Creating a new thread also requires less overhead than spawning a new process, in part because the system does not need to create an entirely new address space. Therefore, multitasking based on threads is more eefficient than multita ...

... context switching among threads are faster than among processes. • Creating a new thread also requires less overhead than spawning a new process, in part because the system does not need to create an entirely new address space. Therefore, multitasking based on threads is more eefficient than multita ...

Operating Systems I: Chapter 4

... A traditional or heavyweight process is equal to a task with one thread ...

... A traditional or heavyweight process is equal to a task with one thread ...

Threads and Events

... While a thread is running, it may request a resource, e.g. open a file or connect to a database. This is done by calls to the operating system. These calls may take a significant amount of time for a couple of reasons. The resource may be slow by nature, e.g. reading from disk drives takes thousands ...

... While a thread is running, it may request a resource, e.g. open a file or connect to a database. This is done by calls to the operating system. These calls may take a significant amount of time for a couple of reasons. The resource may be slow by nature, e.g. reading from disk drives takes thousands ...

the thread - Bilkent University Computer Engineering Department

... Re-entrency Thread specific data ...

... Re-entrency Thread specific data ...

A1_OS Review

... several processes/threads at a time on a single CPU. The OS keeps several jobs in memory simultaneously. It selects a job from the ready state and starts executing it. When that job needs to wait for some event the CPU is switched to another job. Primary objective: eliminate CPU idle time Time shari ...

... several processes/threads at a time on a single CPU. The OS keeps several jobs in memory simultaneously. It selects a job from the ready state and starts executing it. When that job needs to wait for some event the CPU is switched to another job. Primary objective: eliminate CPU idle time Time shari ...

Lecture #6

... Benefits Responsiveness: Continue even if part of an application is blocked due to I/O ...

... Benefits Responsiveness: Continue even if part of an application is blocked due to I/O ...

Thread (computing)

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. Multiple threads can exist within the same process, executing concurrently (one starting before others finish) and share resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its instructions (executable code) and its context (the values of its variables at any given moment).On a single processor, multithreading is generally implemented by time slicing (as in multitasking), and the central processing unit (CPU) switches between different software threads. This context switching generally happens frequently enough that the user perceives the threads or tasks as running at the same time (in parallel). On a multiprocessor or multi-core system, multiple threads can be executed in parallel (at the same instant), with every processor or core executing a separate thread simultaneously; on a processor or core with hardware threads, separate software threads can also be executed concurrently by separate hardware threads.Threads made an early appearance in OS/360 Multiprogramming with a Variable Number of Tasks (MVT) in 1967, in which they were called ""tasks"". Process schedulers of many modern operating systems directly support both time-sliced and multiprocessor threading, and the operating system kernel allows programmers to manipulate threads by exposing required functionality through the system call interface. Some threading implementations are called kernel threads, whereas lightweight processes (LWP) are a specific type of kernel thread that share the same state and information. Furthermore, programs can have user-space threads when threading with timers, signals, or other methods to interrupt their own execution, performing a sort of ad hoc time-slicing.