Explanation-Based Generalization: A Unifying View

... examples, they also require that the learner possess knowledge of the domain and of the concept under study. It seems clear that for a large number of generalization problems encountered by intelligent agents, this required knowledge is available to the learner. In this paper we present and analyze ...

... examples, they also require that the learner possess knowledge of the domain and of the concept under study. It seems clear that for a large number of generalization problems encountered by intelligent agents, this required knowledge is available to the learner. In this paper we present and analyze ...

Time Series Prediction and Online Learning

... our knowledge, the only exception is the recent work of Kuznetsov and Mohri (2015), who analyzed the general non-stationary and non-mixing scenario and gave high-probability generalization bounds for this framework. The on-line learning scenario requires no distributional assumption. In on-line lear ...

... our knowledge, the only exception is the recent work of Kuznetsov and Mohri (2015), who analyzed the general non-stationary and non-mixing scenario and gave high-probability generalization bounds for this framework. The on-line learning scenario requires no distributional assumption. In on-line lear ...

Integrating Planning and Learning: The PRODIGY Architecture

... to describe techniques for learning control rules.) This simplified domain represents operations of a drill press. The drill press uses two types of drill bits: a spot drill and a twist drill. A spot drill is used to make a small spot on the surface of a part. This spot is needed to guide the moveme ...

... to describe techniques for learning control rules.) This simplified domain represents operations of a drill press. The drill press uses two types of drill bits: a spot drill and a twist drill. A spot drill is used to make a small spot on the surface of a part. This spot is needed to guide the moveme ...

Expert systems

... Expert systems (In a classical sense these are systems that contain expert knowledge, obtained through a series of conversations between the knowledge-base engineer and a domain expert) ...

... Expert systems (In a classical sense these are systems that contain expert knowledge, obtained through a series of conversations between the knowledge-base engineer and a domain expert) ...

Preference Learning with Gaussian Processes

... classification problem. Based on our recent work on ordinal regression (Chu & Ghahramani, 2004), we further develop the Gaussian process algorithm for preference learning tasks. Although the basic techniques we used in these two works are similar, the formulation proposed in this paper is new, more g ...

... classification problem. Based on our recent work on ordinal regression (Chu & Ghahramani, 2004), we further develop the Gaussian process algorithm for preference learning tasks. Although the basic techniques we used in these two works are similar, the formulation proposed in this paper is new, more g ...

Structured machine learning: the next ten years

... Automatic bias-revision: The last few years have seen the emergence within ILP of systems which use feedback from the evaluation of sections of the hypothesis space (DiMaio and Shavlik 2004), or related hypothesis spaces (Reid 2004), to estimate promising areas for extending the search. These approa ...

... Automatic bias-revision: The last few years have seen the emergence within ILP of systems which use feedback from the evaluation of sections of the hypothesis space (DiMaio and Shavlik 2004), or related hypothesis spaces (Reid 2004), to estimate promising areas for extending the search. These approa ...

Learning the Matching Function

... The matching problems, such as dense stereo reconstruction or optical flow, typically uses as an underlying similarity measure between pixels the distance between patchfeatures surrounding the pixels. In many scenarios, for examples when high quality rectified images are available, this approach is ...

... The matching problems, such as dense stereo reconstruction or optical flow, typically uses as an underlying similarity measure between pixels the distance between patchfeatures surrounding the pixels. In many scenarios, for examples when high quality rectified images are available, this approach is ...

error backpropagation algorithm1

... Since the weights are adjusted in proportion to the f’(net), the weights that are connected to the midrange are changed the most. Since the error signals are computed with f’(net) as multiplier, the back propagated errors are large for only those neurons which are in the steep thresholding mode. The ...

... Since the weights are adjusted in proportion to the f’(net), the weights that are connected to the midrange are changed the most. Since the error signals are computed with f’(net) as multiplier, the back propagated errors are large for only those neurons which are in the steep thresholding mode. The ...

Intelligent Agent for Information Extraction from Arabic Text without

... More formally, each feature Xi has a discrete binary value of 0 or 1. If Xi = 0, this means the word i has not been found. However, these terms need to be refined (using Stop and Stemming techniques) since they are mentioned in the domain knowledge ...

... More formally, each feature Xi has a discrete binary value of 0 or 1. If Xi = 0, this means the word i has not been found. However, these terms need to be refined (using Stop and Stemming techniques) since they are mentioned in the domain knowledge ...

A Bucket Elimination Approach for Determining Strong

... uncertainty will be able to satisfy all required constraints is by verifying its strong controllability, i.e., checking whether there is a precomputable assignment to the controllable variables that is robust to all possible outcomes of uncontrollable events. However, the algorithm for determining s ...

... uncertainty will be able to satisfy all required constraints is by verifying its strong controllability, i.e., checking whether there is a precomputable assignment to the controllable variables that is robust to all possible outcomes of uncontrollable events. However, the algorithm for determining s ...

Content identification: machine learning meets coding 1

... A set of training instances are given which contain features x(i) ∈ RN and their labels g(x(i))’s belonging to the M classes. These labels are assumed to be generated through an unknown hypothetical mapping function g : RN 7−→ {1, 2, ..., M } that we want to learn and approximate based on the given ...

... A set of training instances are given which contain features x(i) ∈ RN and their labels g(x(i))’s belonging to the M classes. These labels are assumed to be generated through an unknown hypothetical mapping function g : RN 7−→ {1, 2, ..., M } that we want to learn and approximate based on the given ...

pdf

... freedom to the learner makes it much harder to prove lower bounds in this model. Concretely, it is not clear how to use standard reductions from NP hard problems in order to establish lower bounds for improper learning (moreover, Applebaum et al. [2008] give evidence that such simple reductions do n ...

... freedom to the learner makes it much harder to prove lower bounds in this model. Concretely, it is not clear how to use standard reductions from NP hard problems in order to establish lower bounds for improper learning (moreover, Applebaum et al. [2008] give evidence that such simple reductions do n ...

Decentralized reinforcement learning control of a robotic manipulator

... results that is beneficial for the system. Conflicting goals, interagent communication, and incomplete agent views over the process, are issues that may also play a role. ...

... results that is beneficial for the system. Conflicting goals, interagent communication, and incomplete agent views over the process, are issues that may also play a role. ...

More data speeds up training time in learning halfspaces over sparse vectors,

... freedom to the learner makes it much harder to prove lower bounds in this model. Concretely, it is not clear how to use standard reductions from NP hard problems in order to establish lower bounds for improper learning (moreover, Applebaum et al. [2008] give evidence that such simple reductions do n ...

... freedom to the learner makes it much harder to prove lower bounds in this model. Concretely, it is not clear how to use standard reductions from NP hard problems in order to establish lower bounds for improper learning (moreover, Applebaum et al. [2008] give evidence that such simple reductions do n ...

X t

... the algorithm to decide which weights are small?? This approach is just naïve. It overlooks that classifiers must have good generalization properties. A large network can result in small errors for the training set, since it can learn the particular details of the training set. On the other hand, it ...

... the algorithm to decide which weights are small?? This approach is just naïve. It overlooks that classifiers must have good generalization properties. A large network can result in small errors for the training set, since it can learn the particular details of the training set. On the other hand, it ...

lift - Hong Kong University of Science and Technology

... class, which isn't a possible annotation) 7:10pm subject timestamps end of general_exercise 9:25pm subject timestamps beginning of watching_tv 11:30pm subject timestamps end of watching_tv 12:01am subject timestamps beginning of lying_down 7:30am subject timestamps end of lying_down and ...

... class, which isn't a possible annotation) 7:10pm subject timestamps end of general_exercise 9:25pm subject timestamps beginning of watching_tv 11:30pm subject timestamps end of watching_tv 12:01am subject timestamps beginning of lying_down 7:30am subject timestamps end of lying_down and ...

Well-Posed Learning Problems

... What is the best strategy for choosing a useful next training experience? What is the best way to reduce the learning task to one or more function approximation systems? How can the learner automatically alter its representation to represent and learn the target function? ...

... What is the best strategy for choosing a useful next training experience? What is the best way to reduce the learning task to one or more function approximation systems? How can the learner automatically alter its representation to represent and learn the target function? ...

mining on car database employing learning and clustering algorithms

... the existing volume of data which is quite large.Data mining algorithms are of various types of which clustering algorithms are also one of the type .Basically, Clustering can be considered the most important unsupervised learning problem; so, it deals with finding a structure in a collection of unl ...

... the existing volume of data which is quite large.Data mining algorithms are of various types of which clustering algorithms are also one of the type .Basically, Clustering can be considered the most important unsupervised learning problem; so, it deals with finding a structure in a collection of unl ...

Learning Macro-Actions in Reinforcement Learning

... McCallum, 1995; Hansen, Barto & Zilberstein, 1997; Burgard et aI., 1998). In this work we are not specially concerned with non-Markov problems. However the results in this paper suggest that some methods for partially observable MDP could be applied to MDPs and result in faster learning. The difficu ...

... McCallum, 1995; Hansen, Barto & Zilberstein, 1997; Burgard et aI., 1998). In this work we are not specially concerned with non-Markov problems. However the results in this paper suggest that some methods for partially observable MDP could be applied to MDPs and result in faster learning. The difficu ...

Dagstuhl-Seminar

... results show that it can very reliably escape local optima that trap the standard EM algorithm. Results also show that local optima can have a severe impact on performance on real problems such as digit classification and image compression, which SMEM can overcome. The second problem is model select ...

... results show that it can very reliably escape local optima that trap the standard EM algorithm. Results also show that local optima can have a severe impact on performance on real problems such as digit classification and image compression, which SMEM can overcome. The second problem is model select ...

ConditionalRandomFields2 - CS

... • Data was generated from a simple HMM which encodes a noisy version of the finite-state network (“rib/ rob”) • Each state emits its designated symbol with probability 29/32 and any of the other symbols with probability 1/32 • We train both an MEMM and a CRF • The observation features are simply the ...

... • Data was generated from a simple HMM which encodes a noisy version of the finite-state network (“rib/ rob”) • Each state emits its designated symbol with probability 29/32 and any of the other symbols with probability 1/32 • We train both an MEMM and a CRF • The observation features are simply the ...

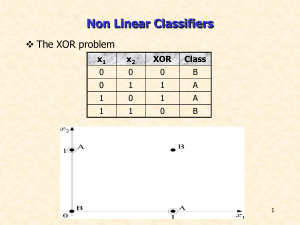

Artificial Intelligence

... is performed. The blue line represents the error that drops through time (iterations). – Observe how there are now two line to separate the categories (from the two hidden neurons) – The input pairs ([0,0], [0,1], [1,0], [1,1]) are classified correctly after the training. ...

... is performed. The blue line represents the error that drops through time (iterations). – Observe how there are now two line to separate the categories (from the two hidden neurons) – The input pairs ([0,0], [0,1], [1,0], [1,1]) are classified correctly after the training. ...

Slide 1

... Universal approximator – applicable to both classification and regression problems Learning – weights adjustments (e.g. back-propagation) ...

... Universal approximator – applicable to both classification and regression problems Learning – weights adjustments (e.g. back-propagation) ...

Indexing Time Series

... Disadvantages of Transformation Rules • Subsequent computations (such as the indexing problem) become more complicated • We will see later why! ...

... Disadvantages of Transformation Rules • Subsequent computations (such as the indexing problem) become more complicated • We will see later why! ...