A general agnostic active learning algorithm

... in terms of a parameter called the disagreement coefficient. Another thread of work focuses on agnostic learning of thresholds for data that lie on a line; in this case, a precise characterization of label complexity can be given [4, 5]. These previous results either make strong distributional assum ...

... in terms of a parameter called the disagreement coefficient. Another thread of work focuses on agnostic learning of thresholds for data that lie on a line; in this case, a precise characterization of label complexity can be given [4, 5]. These previous results either make strong distributional assum ...

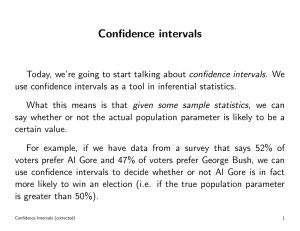

Lecture 33 - Confidence Intervals Proportion

... have no idea how close we can expect them to be to the parameter. That is, we have no idea of how large the error may be. ...

... have no idea how close we can expect them to be to the parameter. That is, we have no idea of how large the error may be. ...

An unpublished statistics book

... There is something called “The Rule of 72” regarding interest rates. If you want to determine how many years it would take for your money to double if it were invested at a particular interest rate, compounded annually, divide the interest rate into 72 and you’ll have a close approximation. To take ...

... There is something called “The Rule of 72” regarding interest rates. If you want to determine how many years it would take for your money to double if it were invested at a particular interest rate, compounded annually, divide the interest rate into 72 and you’ll have a close approximation. To take ...

PROBABILITY THEORY - PART 1 MEASURE THEORETICAL

... disjoint, then P(∪An ) = P(An ) (countable additivity) and such that P(Ω) = 1. P(A) is called the probability of A. By definition, we talk of probabilities only of measurable sets. It is meaningless to ask for the probability of a subset of Ω that is not measurable. Typically, the sigma-algebra will ...

... disjoint, then P(∪An ) = P(An ) (countable additivity) and such that P(Ω) = 1. P(A) is called the probability of A. By definition, we talk of probabilities only of measurable sets. It is meaningless to ask for the probability of a subset of Ω that is not measurable. Typically, the sigma-algebra will ...

II. Probability - UCLA Cognitive Systems Laboratory

... to have more compact representations of these factors than representations based on tables [Zhang and Poole 1996], leading to a more efficient implementation of the elimination process. One example of this would be the use of Algebraic Decision Diagrams [R.I. Bahar et al. 1993] and associated operat ...

... to have more compact representations of these factors than representations based on tables [Zhang and Poole 1996], leading to a more efficient implementation of the elimination process. One example of this would be the use of Algebraic Decision Diagrams [R.I. Bahar et al. 1993] and associated operat ...

Consequences of the Log Transformation

... inference is that our inferences are being made about the median in the original scales vs. the mean. When comparing two (or more) populations where the variable of interest has a right-skewed distribution the log transformation again is frequently used. The consequences of the log transformation on ...

... inference is that our inferences are being made about the median in the original scales vs. the mean. When comparing two (or more) populations where the variable of interest has a right-skewed distribution the log transformation again is frequently used. The consequences of the log transformation on ...