Step8-9-10

... •XML tags enable access of meta data across any type of data •The initial tagging of all meta data with XML storage through standardized categorization and tagging of meta tags is a manual and laborious process. Plus, data components. XML tagging cannot be used for all meta data. •Meta data never ha ...

... •XML tags enable access of meta data across any type of data •The initial tagging of all meta data with XML storage through standardized categorization and tagging of meta tags is a manual and laborious process. Plus, data components. XML tagging cannot be used for all meta data. •Meta data never ha ...

DATA WAREHOUSING AND DATA MINING

... Basic data warehouse (DW) implementation phases are [1]: Current situation analysis Selecting data interesting for analysis, out of existing database Filtering and reducing data Extracting data into staging database Selecting fact table, dimensional tables and appropriate schemes Selecti ...

... Basic data warehouse (DW) implementation phases are [1]: Current situation analysis Selecting data interesting for analysis, out of existing database Filtering and reducing data Extracting data into staging database Selecting fact table, dimensional tables and appropriate schemes Selecti ...

Unified Query for Big Data Management Systems

... The value of unified query to enterprises that wish to extract maximum benefit from existing data warehouses and the growing set of Big Data tools is clear. The ability to exploit existing skills and processes to manage all data saves tremendous cost and can directly speed innovation. However, to tr ...

... The value of unified query to enterprises that wish to extract maximum benefit from existing data warehouses and the growing set of Big Data tools is clear. The ability to exploit existing skills and processes to manage all data saves tremendous cost and can directly speed innovation. However, to tr ...

Database Management

... packages as well as many minicomputer and mainframe systems • Data elements within the database are stored in the form of simple tables which are related if they contain common fields • DBMS packages based on the relational model can link data elements from various tables to provide information to u ...

... packages as well as many minicomputer and mainframe systems • Data elements within the database are stored in the form of simple tables which are related if they contain common fields • DBMS packages based on the relational model can link data elements from various tables to provide information to u ...

Ultimate Skills Checklist for Your First Data Analyst Job

... Database systems (SQL-based and NO SQL based) - Databases act as a central hub to store information ...

... Database systems (SQL-based and NO SQL based) - Databases act as a central hub to store information ...

Big Data SQL Data Sheet

... enormous possibilities of Big Data, there can also be enormous complexity. Integrating Big Data systems to leverage these vast new data resources with existing information estates can be challenging. Valuable data may be stored in a system separate from where the majority of business-critical operat ...

... enormous possibilities of Big Data, there can also be enormous complexity. Integrating Big Data systems to leverage these vast new data resources with existing information estates can be challenging. Valuable data may be stored in a system separate from where the majority of business-critical operat ...

bigdatainhealthcare

... plenty today without big data, including meeting most of their analytics and reporting needs. We haven’t come close to stretching the limits of what healthcare analytics can accomplish with traditional relational databases—and using these databases effectively is a more valuable focus than worrying ...

... plenty today without big data, including meeting most of their analytics and reporting needs. We haven’t come close to stretching the limits of what healthcare analytics can accomplish with traditional relational databases—and using these databases effectively is a more valuable focus than worrying ...

data warehousing and olap technology

... provides facilities for summarization and aggregation, and store and manages information at different levels of granularity. These features make the data easier to use in informed decision making. An OLAP system typically adopts either a star or a snowflake model and a subjectoriented database desig ...

... provides facilities for summarization and aggregation, and store and manages information at different levels of granularity. These features make the data easier to use in informed decision making. An OLAP system typically adopts either a star or a snowflake model and a subjectoriented database desig ...

Chapter 13

... required to manage all of the related records. It is no wonder that Wal-Mart has one of the largest databases of any business organization in the world. The Wal-Mart database continually grows with new transactions. Some estimate that Wal-Mart adds 1 billion rows of data per day. In addition to the ...

... required to manage all of the related records. It is no wonder that Wal-Mart has one of the largest databases of any business organization in the world. The Wal-Mart database continually grows with new transactions. Some estimate that Wal-Mart adds 1 billion rows of data per day. In addition to the ...

NDS Database Housekeeping Document

... The above stated three tables contain data regarding messages in the Calls and Messages panel and the NDS deals respectively. As there are numerous messages going into the above three tables, the number of records in these tables affect the response time of the database. Higher the number of records ...

... The above stated three tables contain data regarding messages in the Calls and Messages panel and the NDS deals respectively. As there are numerous messages going into the above three tables, the number of records in these tables affect the response time of the database. Higher the number of records ...

Data Representation

... storage is discussed in Chapter 10. All data (and remember that program is treated as data) is stored as a collection of files managed by the operating file-system. The file system provides a uniform, logical view of information storage. The operating system abstracts from the physical properties of ...

... storage is discussed in Chapter 10. All data (and remember that program is treated as data) is stored as a collection of files managed by the operating file-system. The file system provides a uniform, logical view of information storage. The operating system abstracts from the physical properties of ...

Data Warehouse Technologies

... Tool should only access to objects which are used for analysis Consistent reporting performance (performance should not decrease tremendously with the increase of dimensions) Client/Server Architecture Each dimension should be equally structural and operational. Dynamic Matrix handling Multi user su ...

... Tool should only access to objects which are used for analysis Consistent reporting performance (performance should not decrease tremendously with the increase of dimensions) Client/Server Architecture Each dimension should be equally structural and operational. Dynamic Matrix handling Multi user su ...

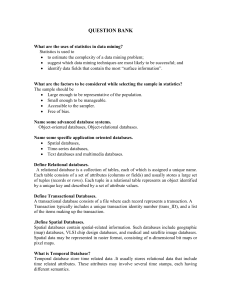

Question Bank

... Data quality is important in a data warehouse environment to facilitate decision-making. In order to support decision-making, the stored data should provide information from a historical perspective and in a summarized manner. How can data visualization help in decision-making? Data visualization h ...

... Data quality is important in a data warehouse environment to facilitate decision-making. In order to support decision-making, the stored data should provide information from a historical perspective and in a summarized manner. How can data visualization help in decision-making? Data visualization h ...

Data Preprocessing Techniques for Data Mining

... Data pre-processing is an often neglected but important step in the data mining process. The phrase "Garbage In, Garbage Out" is particularly applicable to data mining and machine learning. Data gathering methods are often loosely controlled, resulting in out-ofrange values (e.g., Income: -100), imp ...

... Data pre-processing is an often neglected but important step in the data mining process. The phrase "Garbage In, Garbage Out" is particularly applicable to data mining and machine learning. Data gathering methods are often loosely controlled, resulting in out-ofrange values (e.g., Income: -100), imp ...

Ch 4 - Data Resource

... PRINCIPLES IN MANAGING DATA 3. Application Software should be separate from the database • Application independence = separation or decoupling of data from application systems - Raw data captured and stored - When needed, data are retrieved but not consumed - Data are transferred to other parts of ...

... PRINCIPLES IN MANAGING DATA 3. Application Software should be separate from the database • Application independence = separation or decoupling of data from application systems - Raw data captured and stored - When needed, data are retrieved but not consumed - Data are transferred to other parts of ...

Resources | 1010data

... Given the uniqueness of 1010data, it is interesting that the genesis and evolution of the technology has been primarily customer-driven. The software engineers, Joel Kaplan and Sandy Steier, who built 1010data, entered the software industry from the IT user’s side. Originally they were employed by t ...

... Given the uniqueness of 1010data, it is interesting that the genesis and evolution of the technology has been primarily customer-driven. The software engineers, Joel Kaplan and Sandy Steier, who built 1010data, entered the software industry from the IT user’s side. Originally they were employed by t ...

GDT-ETL Part 1

... Each record in a file consists of 1 or more fields and it may contain hierarchy of sub records as denoted by the OCCURS clause. Each field has a location in a record as well as an associated COBOL data type. Group name and redefines are ignored. ...

... Each record in a file consists of 1 or more fields and it may contain hierarchy of sub records as denoted by the OCCURS clause. Each field has a location in a record as well as an associated COBOL data type. Group name and redefines are ignored. ...

Why Be Normal? Constructing SAS Based Tools to Facilitate Database Design

... The first issue of importance as we make this shift, an issue that many ad hoc users of SAS have never really felt the need to consider before now, is what exactly constitutes a database. For analysis purposes, most SAS data set libraries contained whatever data sets the analyst was working on: subs ...

... The first issue of importance as we make this shift, an issue that many ad hoc users of SAS have never really felt the need to consider before now, is what exactly constitutes a database. For analysis purposes, most SAS data set libraries contained whatever data sets the analyst was working on: subs ...

Data warehouse on Manpower Employment for Decision Support

... companies to extract and analyze useful information from very large database for decision making. Real-time and active database technologies are used in controlling industrial and manufacturing processes. Furthermore, database search techniques are being applied to the World Wide Web (WWW) to improv ...

... companies to extract and analyze useful information from very large database for decision making. Real-time and active database technologies are used in controlling industrial and manufacturing processes. Furthermore, database search techniques are being applied to the World Wide Web (WWW) to improv ...

View/Download-PDF - International Journal of Computer Science

... Data warehouses exist to facilitate complex, data-intensive and frequent adhoc queries [11]. The purpose of the Data Warehouse mostly is to integrate corporate data in an organisation. It contains the "single version of truth" for the organization that has been carefully constructed from data stored ...

... Data warehouses exist to facilitate complex, data-intensive and frequent adhoc queries [11]. The purpose of the Data Warehouse mostly is to integrate corporate data in an organisation. It contains the "single version of truth" for the organization that has been carefully constructed from data stored ...

How to address top problems in test data management

... compromising test data privacy in the testing process. Data masking goes by various names, including data obfuscation, de-identification, depersonalisation, scrubbing, scrambling or cleansing. By any name, it allows you to hide, remove or randomize sensitive information. However, there is a catch. W ...

... compromising test data privacy in the testing process. Data masking goes by various names, including data obfuscation, de-identification, depersonalisation, scrubbing, scrambling or cleansing. By any name, it allows you to hide, remove or randomize sensitive information. However, there is a catch. W ...

Data transfer, storage and analysis for data mart enlargement

... serves to the support of the objective and qualified decision making and management of a company. That is the reason why it has been applied in many different establishments. Each information system goes through a certain development during its life cycle. Very often the requests for the enlargement ...

... serves to the support of the objective and qualified decision making and management of a company. That is the reason why it has been applied in many different establishments. Each information system goes through a certain development during its life cycle. Very often the requests for the enlargement ...

CS163_Topic3

... – may not be reasonable if a large quantity of instances of an ordered_list are formed – is not required if the size of the data is known up-front at compile time (and is the same for each instance of the class) ...

... – may not be reasonable if a large quantity of instances of an ordered_list are formed – is not required if the size of the data is known up-front at compile time (and is the same for each instance of the class) ...

Database Vs Data Warehouse

... slow, special ad-hoc, mainly in the case of unpredicted criteria, while in data warehouses is usually. These queries, specific to economic analysis, may significantly compromise the performance of the operational system, due to the lack of predictable indexes, as is the case of data warehouses. 3) A ...

... slow, special ad-hoc, mainly in the case of unpredicted criteria, while in data warehouses is usually. These queries, specific to economic analysis, may significantly compromise the performance of the operational system, due to the lack of predictable indexes, as is the case of data warehouses. 3) A ...

DataMIME: Component Based Data mining System Architecture

... implemented using Java. Another distributed data mining suite based on Java is PaDDMAS [RWL+00], a component-based tool set that integrates pre-developed or custom packages (that can be sequential or parallel) using a dataflow approach. JAM [SPT97] is an agent-based distributed data mining system th ...

... implemented using Java. Another distributed data mining suite based on Java is PaDDMAS [RWL+00], a component-based tool set that integrates pre-developed or custom packages (that can be sequential or parallel) using a dataflow approach. JAM [SPT97] is an agent-based distributed data mining system th ...

Big data

Big data is a broad term for data sets so large or complex that traditional data processing applications are inadequate. Challenges include analysis, capture, data curation, search, sharing, storage, transfer, visualization, and information privacy. The term often refers simply to the use of predictive analytics or other certain advanced methods to extract value from data, and seldom to a particular size of data set. Accuracy in big data may lead to more confident decision making. And better decisions can mean greater operational efficiency, cost reduction and reduced risk.Analysis of data sets can find new correlations, to ""spot business trends, prevent diseases, combat crime and so on."" Scientists, business executives, practitioners of media and advertising and governments alike regularly meet difficulties with large data sets in areas including Internet search, finance and business informatics. Scientists encounter limitations in e-Science work, including meteorology, genomics, connectomics, complex physics simulations, and biological and environmental research.Data sets grow in size in part because they are increasingly being gathered by cheap and numerous information-sensing mobile devices, aerial (remote sensing), software logs, cameras, microphones, radio-frequency identification (RFID) readers, and wireless sensor networks. The world's technological per-capita capacity to store information has roughly doubled every 40 months since the 1980s; as of 2012, every day 2.5 exabytes (2.5×1018) of data were created; The challenge for large enterprises is determining who should own big data initiatives that straddle the entire organization.Work with big data is necessarily uncommon; most analysis is of ""PC size"" data, on a desktop PC or notebook that can handle the available data set.Relational database management systems and desktop statistics and visualization packages often have difficulty handling big data. The work instead requires ""massively parallel software running on tens, hundreds, or even thousands of servers"". What is considered ""big data"" varies depending on the capabilities of the users and their tools, and expanding capabilities make Big Data a moving target. Thus, what is considered ""big"" one year becomes ordinary later. ""For some organizations, facing hundreds of gigabytes of data for the first time may trigger a need to reconsider data management options. For others, it may take tens or hundreds of terabytes before data size becomes a significant consideration.""