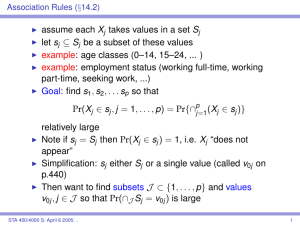

assume each Xj takes values in a set Sj let sj ⊆ Sj be a subset of

... each center identify training points closer to it than to any other center, compute the means of the new clusters to use as cluster centers for the next iteration for classification: do this on the training data separately for each of the K classes the cluster centers are now called prototypes assig ...

... each center identify training points closer to it than to any other center, compute the means of the new clusters to use as cluster centers for the next iteration for classification: do this on the training data separately for each of the K classes the cluster centers are now called prototypes assig ...

gSOM - a new gravitational clustering algorithm based on the self

... G = (1 − ΔG) · G. When two points are close enough, i.e. ||d|| is lower than parameter α, they are merged into a single point with mass equal to 1, which is rather strange, but doing so, clusters with greater density do not affect ones with smaller density. The experiments presented in the Section 3 ...

... G = (1 − ΔG) · G. When two points are close enough, i.e. ||d|| is lower than parameter α, they are merged into a single point with mass equal to 1, which is rather strange, but doing so, clusters with greater density do not affect ones with smaller density. The experiments presented in the Section 3 ...

k-Attractors: A Partitional Clustering Algorithm for umeric Data Analysis

... clusters in a given data set. The work of Jing et al. (Jing, Ng and Zhexue, 2007) provides a new clustering algorithm called EWKM which is a k-means type subspace clustering algorithm for high-dimensional sparse data. Patrikainen and Meila present a framework for comparing subspace clusterings (Patr ...

... clusters in a given data set. The work of Jing et al. (Jing, Ng and Zhexue, 2007) provides a new clustering algorithm called EWKM which is a k-means type subspace clustering algorithm for high-dimensional sparse data. Patrikainen and Meila present a framework for comparing subspace clusterings (Patr ...

LO3120992104

... can be determined by examining the path from nodes and branches to the terminating leaf. If all classes of an instance belong to the same class then the leaf node is labeled with that class. Otherwise the decision tree algorithm uses divide and conquer method which is used to divide the training ins ...

... can be determined by examining the path from nodes and branches to the terminating leaf. If all classes of an instance belong to the same class then the leaf node is labeled with that class. Otherwise the decision tree algorithm uses divide and conquer method which is used to divide the training ins ...

Nearest-neighbor chain algorithm

In the theory of cluster analysis, the nearest-neighbor chain algorithm is a method that can be used to perform several types of agglomerative hierarchical clustering, using an amount of memory that is linear in the number of points to be clustered and an amount of time linear in the number of distinct distances between pairs of points. The main idea of the algorithm is to find pairs of clusters to merge by following paths in the nearest neighbor graph of the clusters until the paths terminate in pairs of mutual nearest neighbors. The algorithm was developed and implemented in 1982 by J. P. Benzécri and J. Juan, based on earlier methods that constructed hierarchical clusterings using mutual nearest neighbor pairs without taking advantage of nearest neighbor chains.