Multiplying and Factoring Matrices

... A = LU is a permutation of a lower triangular matrix. This corresponds exactly to the usual “P A = LU ” factorization for any invertible matrix [3]. That was partial pivoting. In complete pivoting, the first pivot is the largest entry aij in the whole matrix A. Now row i of A is uT 1 and column j (d ...

... A = LU is a permutation of a lower triangular matrix. This corresponds exactly to the usual “P A = LU ” factorization for any invertible matrix [3]. That was partial pivoting. In complete pivoting, the first pivot is the largest entry aij in the whole matrix A. Now row i of A is uT 1 and column j (d ...

Matrices Lie: An introduction to matrix Lie groups

... each is a matrix Lie group (In the next section we will prove that each is actually a group under matrix multiplication). Now let us examine the two conditions on the orthogonal and unitary groups. In general an isometry is not necessarily a linear transformation. For our purposes however, we only w ...

... each is a matrix Lie group (In the next section we will prove that each is actually a group under matrix multiplication). Now let us examine the two conditions on the orthogonal and unitary groups. In general an isometry is not necessarily a linear transformation. For our purposes however, we only w ...

Projective structures and contact forms

... determines a family of local Lie algebras on M (i.e., a family of Lie brackets on C ~ ( M ) that satisfy the localization condition). As was shown in Kirillov's paper [4], a nondegenerate local Lie algebra is determined by a contact or a symplectic structure on the manifold. The relation between pro ...

... determines a family of local Lie algebras on M (i.e., a family of Lie brackets on C ~ ( M ) that satisfy the localization condition). As was shown in Kirillov's paper [4], a nondegenerate local Lie algebra is determined by a contact or a symplectic structure on the manifold. The relation between pro ...

Lecture 3: Fourth Order BSS Method

... goal is to recover s(t) under the assumption that ε(t) is Gaussian white noise independent of s. For any diagonal matrix Λ and any permutation matrix P , s̃(t) = P Λs(t) is also independent. We can write y(t) = (AΛ−1 P −1 )(P Λs(t)) + ε(t) = Ãs̃ + ε(t), where à = AΛ−1 P −1 . So without loss of gen ...

... goal is to recover s(t) under the assumption that ε(t) is Gaussian white noise independent of s. For any diagonal matrix Λ and any permutation matrix P , s̃(t) = P Λs(t) is also independent. We can write y(t) = (AΛ−1 P −1 )(P Λs(t)) + ε(t) = Ãs̃ + ε(t), where à = AΛ−1 P −1 . So without loss of gen ...

A SURVEY OF COMPLETELY BOUNDED MAPS 1. Introduction and

... The above tensor norm is called the Haagerup tensor norm in honor of U. Haagerup who was the first to notice the above identification. We write Mk,n ⊗h Mn,k to denote the tensor product endowed with this norm and note that we have just proved that: Theorem 20 (Haagerup). The map Γ : Mk,n ⊗h Mn,k → C ...

... The above tensor norm is called the Haagerup tensor norm in honor of U. Haagerup who was the first to notice the above identification. We write Mk,n ⊗h Mn,k to denote the tensor product endowed with this norm and note that we have just proved that: Theorem 20 (Haagerup). The map Γ : Mk,n ⊗h Mn,k → C ...

Normal Forms and Versa1 Deformations of Linear

... Those who are familiar with the classics of this subject, such as Moser and Siegel 1241and Birkhoff 171,might object to the extensive lists we have presented. We have two explanations to offer. Birkhoff deals with only purely semisimple infinitesimally symplectic transformations without nilportent p ...

... Those who are familiar with the classics of this subject, such as Moser and Siegel 1241and Birkhoff 171,might object to the extensive lists we have presented. We have two explanations to offer. Birkhoff deals with only purely semisimple infinitesimally symplectic transformations without nilportent p ...

Group Theory Summary

... Definition 2.12 (Conjugacy classes). The set of all elements that are conjugated to each other is called a conjugacy class. ...

... Definition 2.12 (Conjugacy classes). The set of all elements that are conjugated to each other is called a conjugacy class. ...

The matrix of a linear operator in a pair of ordered bases∗

... matrix (2) has m rows and n columns. Because of this, we say that it has the order m × n. The matrix (2) may be written in an abbreviated form as A = (aij ). Example 1.. Let D : P 3 → P 2 be a linear operator that assigns to each polynomial its derivative, (e) = (x3 , x2 , x, 1) the basis for P 3 an ...

... matrix (2) has m rows and n columns. Because of this, we say that it has the order m × n. The matrix (2) may be written in an abbreviated form as A = (aij ). Example 1.. Let D : P 3 → P 2 be a linear operator that assigns to each polynomial its derivative, (e) = (x3 , x2 , x, 1) the basis for P 3 an ...

The solutions to the operator equation TXS − SX T = A in Hilbert C

... abbreviate to L(X ), is a C∗ -algebra. The identity operator on X is denoted by 1X or 1 if there is no ambiguity. Let M be closed submodule of a Hilbert A-module X , then PM is orthogonal projection onto M, in the sense that PM is self adjoint idempotent operator. Theorem 1.1. [4, Theorem 3.2] Suppo ...

... abbreviate to L(X ), is a C∗ -algebra. The identity operator on X is denoted by 1X or 1 if there is no ambiguity. Let M be closed submodule of a Hilbert A-module X , then PM is orthogonal projection onto M, in the sense that PM is self adjoint idempotent operator. Theorem 1.1. [4, Theorem 3.2] Suppo ...

M-MATRICES SATISFY NEWTON`S INEQUALITIES 1. Introduction

... The condition (6) follows simply from the fact that tr(Ak ) is the kth moment of the eigenvalue sequence of A, while the condition (7) is due to Loewy and London [10] and, independently, Johnson [7]. Newton’s inequalities proven above result in a third set of conditions necessary for realizability o ...

... The condition (6) follows simply from the fact that tr(Ak ) is the kth moment of the eigenvalue sequence of A, while the condition (7) is due to Loewy and London [10] and, independently, Johnson [7]. Newton’s inequalities proven above result in a third set of conditions necessary for realizability o ...

Operators on Hilbert space

... established. This allows the introduction of self-adjoint operators (corresonding to symmetric (or Hermitean matrices) which together with diagonalisable operators (corresonding to diagonalisable matrices) are the subject of section 4.4. In section 4.5 we define unitary operators (corresponding to o ...

... established. This allows the introduction of self-adjoint operators (corresonding to symmetric (or Hermitean matrices) which together with diagonalisable operators (corresonding to diagonalisable matrices) are the subject of section 4.4. In section 4.5 we define unitary operators (corresponding to o ...

Suggested problems

... You can verify this by calculating the side lengths in the Euclidean metric using the Pythagorean theorem/distance formula- the length of 2 marked on the hypotenuse is the Euclidean length, not the Taxicab length. (a) AB ∗ = 2, BC ∗ = |1 − 0| + |0 − ...

... You can verify this by calculating the side lengths in the Euclidean metric using the Pythagorean theorem/distance formula- the length of 2 marked on the hypotenuse is the Euclidean length, not the Taxicab length. (a) AB ∗ = 2, BC ∗ = |1 − 0| + |0 − ...

SOME PROPERTIES OF N-SUPERCYCLIC OPERATORS 1

... T ⊕ I : `2 ⊕ C → `2 ⊕ C. It’s not difficult to prove that T ⊕ I is supercyclic with supercyclic vector h ⊕ 1, where h is any hypercyclic vector for T (see [11, Lemma 3.2]). Note that 1 is a simple eigenvalue for T ⊕ I with corresponding eigenvector 0 ⊕ 1. (Thus, the adjoint of a supercyclic operator ...

... T ⊕ I : `2 ⊕ C → `2 ⊕ C. It’s not difficult to prove that T ⊕ I is supercyclic with supercyclic vector h ⊕ 1, where h is any hypercyclic vector for T (see [11, Lemma 3.2]). Note that 1 is a simple eigenvalue for T ⊕ I with corresponding eigenvector 0 ⊕ 1. (Thus, the adjoint of a supercyclic operator ...

MATHEMATICAL CONCEPTS OF EVOLUTION ALGEBRAS IN NON

... Example 7.1. Let E be generated by e1 , e2 , where e1 e1 = e1 , e2 e2 = e1 . Then hhe1 ii = Ke1 , hhe2 ii = E. An evolution algebra E is evolutionary semisimple if it is a direct sum of some of its evolutionary simple evolution subalgebras. Note that every evolutionary simple evolution subalgebra of ...

... Example 7.1. Let E be generated by e1 , e2 , where e1 e1 = e1 , e2 e2 = e1 . Then hhe1 ii = Ke1 , hhe2 ii = E. An evolution algebra E is evolutionary semisimple if it is a direct sum of some of its evolutionary simple evolution subalgebras. Note that every evolutionary simple evolution subalgebra of ...

Multiplying and Factoring Matrices

... This product A = QR or QT A = R confirms that every rij = q T i aj (row times column). The mysterious matrix R just contains inner products of q’s and a’s. R is triangular because q i does not involve aj for j > i. Gram-Schmidt uses only a1 , . . . , ai to construct q i . The next vector q 2 is the ...

... This product A = QR or QT A = R confirms that every rij = q T i aj (row times column). The mysterious matrix R just contains inner products of q’s and a’s. R is triangular because q i does not involve aj for j > i. Gram-Schmidt uses only a1 , . . . , ai to construct q i . The next vector q 2 is the ...

A. Holt McDougal Algebra 1 1-9

... You can solve a proportion involving similar triangles to find a length that is not easily measured. This method of measurement is called indirect measurement. If two objects form right angles with the ground, you can apply indirect measurement using their shadows. ...

... You can solve a proportion involving similar triangles to find a length that is not easily measured. This method of measurement is called indirect measurement. If two objects form right angles with the ground, you can apply indirect measurement using their shadows. ...

GROUPS AND THEIR REPRESENTATIONS 1. introduction

... its horizontal axis. Of course, “doing nothing” is the identity symmetry of the square. Of course, any two symmetries can be composed to produce a third. For example, rotation though a quarter-turn followed by reflection over the horizontal axis has the same effect on the square as reflection over o ...

... its horizontal axis. Of course, “doing nothing” is the identity symmetry of the square. Of course, any two symmetries can be composed to produce a third. For example, rotation though a quarter-turn followed by reflection over the horizontal axis has the same effect on the square as reflection over o ...

Matrices with a strictly dominant eigenvalue

... theorem (for another proof of this theorem cf. e.g. [6]): Theorem 3.1 The state vectors of a regular Markov chain converge to the unique right eigenvector of the corresponding transition matrix with component sum 1 corresponding to the eigenvalue 1. Proof. Assume A to be the transition matrix corres ...

... theorem (for another proof of this theorem cf. e.g. [6]): Theorem 3.1 The state vectors of a regular Markov chain converge to the unique right eigenvector of the corresponding transition matrix with component sum 1 corresponding to the eigenvalue 1. Proof. Assume A to be the transition matrix corres ...

Title On certain cohomology groups attached to

... Ao(T, X, p) defines canonically a ^-linear alternating form on g c with values in the vector space 3 of all C°°-functions on Y\G this form determines y° and is characterized among 3 -valued ^-linear alternating forms on gc as the one satisfying (1.2) for all Xef c when Θ(X) and i(X) are interpreted ...

... Ao(T, X, p) defines canonically a ^-linear alternating form on g c with values in the vector space 3 of all C°°-functions on Y\G this form determines y° and is characterized among 3 -valued ^-linear alternating forms on gc as the one satisfying (1.2) for all Xef c when Θ(X) and i(X) are interpreted ...

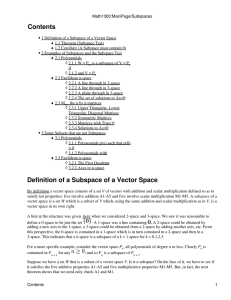

Contents Definition of a Subspace of a Vector Space

... By definition a vector space consists of a set V of vectors with addition and scalar multiplication defined so as to satisfy ten properties: five involve addition A1-A5 and five involve scalar multiplication M1-M5. A subspace of a vector space is a set W which is a subset of V which, using the same ...

... By definition a vector space consists of a set V of vectors with addition and scalar multiplication defined so as to satisfy ten properties: five involve addition A1-A5 and five involve scalar multiplication M1-M5. A subspace of a vector space is a set W which is a subset of V which, using the same ...

Symmetric Spaces

... Rk+l = Rk ⊕ Rl , where the distinguished element p = Rk takes up the first k coordinates in Rk+l and is endowed with its natural positive orientation. Let us first identify M as a homogeneous space. Observe that O(k+1) acts on Rk+l . As such, it maps k-dimensional subspaces to k-dimensional subspaces, ...

... Rk+l = Rk ⊕ Rl , where the distinguished element p = Rk takes up the first k coordinates in Rk+l and is endowed with its natural positive orientation. Let us first identify M as a homogeneous space. Observe that O(k+1) acts on Rk+l . As such, it maps k-dimensional subspaces to k-dimensional subspaces, ...

About Lie Groups

... Each of these maps is smooth, and in fact they are all diffeomorphisms. The inverses of Lg , Rg , and Ψg are Lg−1 , Rg−1 , and Ψg−1 , respectively. They are also group homomorphisms, so they are Lie group isomorphisms. Also, note that the left and right multiplication maps commute with each other. F ...

... Each of these maps is smooth, and in fact they are all diffeomorphisms. The inverses of Lg , Rg , and Ψg are Lg−1 , Rg−1 , and Ψg−1 , respectively. They are also group homomorphisms, so they are Lie group isomorphisms. Also, note that the left and right multiplication maps commute with each other. F ...

Hodge Theory

... where J ] is the derivation induced by J on the exterior algebra, i.e. J ] (v1 ∧ · · · ∧ vp ) = Jv1 ∧ v2 ∧ · · · ∧ vp + v1 ∧ Jv2 ∧ · · · ∧ vp + · · · + v1 ∧ · · · ∧ Jvp . Let VC := V ⊗ C denote the complexification of V and extend all maps from V to VC or from ∧V to ∧VC so as to be complex linear. F ...

... where J ] is the derivation induced by J on the exterior algebra, i.e. J ] (v1 ∧ · · · ∧ vp ) = Jv1 ∧ v2 ∧ · · · ∧ vp + v1 ∧ Jv2 ∧ · · · ∧ vp + · · · + v1 ∧ · · · ∧ Jvp . Let VC := V ⊗ C denote the complexification of V and extend all maps from V to VC or from ∧V to ∧VC so as to be complex linear. F ...