Linear Algebra for Theoretical Neuroscience (Part 2) 4 Complex

... Note that these two eigenvectors are two orthonormal eigenvectors in a two-dimensional complex vector space, and hence form a complete orthonormal basis for the space. That is, any twodimensional complex vector v – including of course any real two-dimensional vector v – can be expanded v = v0 e0 + v ...

... Note that these two eigenvectors are two orthonormal eigenvectors in a two-dimensional complex vector space, and hence form a complete orthonormal basis for the space. That is, any twodimensional complex vector v – including of course any real two-dimensional vector v – can be expanded v = v0 e0 + v ...

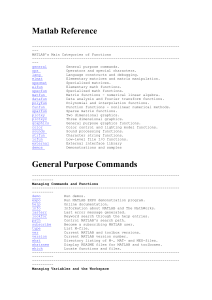

Matlab Reference

... Smallest floating-point number -------------------------------------------------------------------------------------------------------------Time and Dates ...

... Smallest floating-point number -------------------------------------------------------------------------------------------------------------Time and Dates ...

Matlab Notes for Student Manual What is Matlab?

... COS(X) is the cosine of the elements of X. Another source of help in Matlab is under Help > Examples and Demos. Here you will find demonstrations of how to perform various commands. In the Lecture Support and Lab Support sections in WebCT, you will also find ...

... COS(X) is the cosine of the elements of X. Another source of help in Matlab is under Help > Examples and Demos. Here you will find demonstrations of how to perform various commands. In the Lecture Support and Lab Support sections in WebCT, you will also find ...

Mathcad Professional

... Another important type of computation that Mathhcad performs automatically is the determination of eigenvalues and eigenvectors of a matrix. These quantities enter into many types of fundamental theory (the Schrodinger Eq., normal modes of vibration, etc.). For any square matrix A, the internal rou ...

... Another important type of computation that Mathhcad performs automatically is the determination of eigenvalues and eigenvectors of a matrix. These quantities enter into many types of fundamental theory (the Schrodinger Eq., normal modes of vibration, etc.). For any square matrix A, the internal rou ...

diagnostic tools in ehx

... ► The logged messages can be exported to a file using the ‘Export’ option. The exported file from EHX is an XML file. This can be imported into Excel as a delimited file using the “ “ (speech quotes) as a separator Note: Status messages are logged automatically once the EHX s/w is started ...

... ► The logged messages can be exported to a file using the ‘Export’ option. The exported file from EHX is an XML file. This can be imported into Excel as a delimited file using the “ “ (speech quotes) as a separator Note: Status messages are logged automatically once the EHX s/w is started ...

Text S2 - PLoS ONE

... let p 2, i be the probability that he travels two legs, and let p 3, i be the probability that he travels three or more legs. Then the total probability of travel from city i to city j will be given by Dij p1, i Aij p 2, i Aik Bkj p3, i Ail Blk C kj . We define D as the n n matrix with ...

... let p 2, i be the probability that he travels two legs, and let p 3, i be the probability that he travels three or more legs. Then the total probability of travel from city i to city j will be given by Dij p1, i Aij p 2, i Aik Bkj p3, i Ail Blk C kj . We define D as the n n matrix with ...

Gaussian Elimination

... Gaussian and Gauss-Jordan Elimination Gaussian Elimination is a method for solving linear systems of equations. To solve a linear system by Gaussian Elimination, you form a matrix from the matrix of coefficients and the vector of constant terms (this is called the augmented matrix). Then you transfe ...

... Gaussian and Gauss-Jordan Elimination Gaussian Elimination is a method for solving linear systems of equations. To solve a linear system by Gaussian Elimination, you form a matrix from the matrix of coefficients and the vector of constant terms (this is called the augmented matrix). Then you transfe ...

Fixed Point

... Conversely, it is also possible to show that for every ε > 0 there exists a norm k·kε on Rn such that the induced matrix norm k·kε on Rn×n satisfies kAkε ≤ ρ(A) + ε (this is a rather tedious construction involving real versions of the Jordan normal form of A). Together, these results imply that ther ...

... Conversely, it is also possible to show that for every ε > 0 there exists a norm k·kε on Rn such that the induced matrix norm k·kε on Rn×n satisfies kAkε ≤ ρ(A) + ε (this is a rather tedious construction involving real versions of the Jordan normal form of A). Together, these results imply that ther ...

Fall 2012 Midterm Answers.

... is also I so the claim is TRUE. (11) If the dimension of span(v 1 , . . . , v k ) is k, then {v 1 , . . . , v k } is linearly independent. True. [44,20,34] Comments: Serious gaps in people’s understanding of this question and the next. You should know that the dimension of span(v 1 , . . . , v k ) i ...

... is also I so the claim is TRUE. (11) If the dimension of span(v 1 , . . . , v k ) is k, then {v 1 , . . . , v k } is linearly independent. True. [44,20,34] Comments: Serious gaps in people’s understanding of this question and the next. You should know that the dimension of span(v 1 , . . . , v k ) i ...

1. Introduction

... RYSZARD SZWARC AND GRZEGORZ WIDERSKI We study the relaxed Kaczmarz algorithm in Hilbert space. The connection with non relaxed algorithm is examined. In particular we give su cient conditions when relaxation leads to the convergence of the algorithm independently of the relaxation coe cients. ...

... RYSZARD SZWARC AND GRZEGORZ WIDERSKI We study the relaxed Kaczmarz algorithm in Hilbert space. The connection with non relaxed algorithm is examined. In particular we give su cient conditions when relaxation leads to the convergence of the algorithm independently of the relaxation coe cients. ...

Structured Multi—Matrix Variate, Matrix Polynomial Equations

... determinental polynomial. Since factorization of the corresponding polynomial matrix doesnot necessarily hold true ( as in the uni-matrix variate case ), there can be zeroes of the determinental polynomial which are not necessarily the eigenvalues of unknown matrices. Remark 3: Since all diagonaliza ...

... determinental polynomial. Since factorization of the corresponding polynomial matrix doesnot necessarily hold true ( as in the uni-matrix variate case ), there can be zeroes of the determinental polynomial which are not necessarily the eigenvalues of unknown matrices. Remark 3: Since all diagonaliza ...

Matrices and RRE Form Notation. R is the real numbers, C is the

... Theorem 0.6. Suppose that A is the (augmented) matrix of a linear system of equations, and B is obtained from A by a sequence of elementary row operations. Then the solutions to the system of linear equations corresponding to A and the system of linear equations corresponding to B are the same. To p ...

... Theorem 0.6. Suppose that A is the (augmented) matrix of a linear system of equations, and B is obtained from A by a sequence of elementary row operations. Then the solutions to the system of linear equations corresponding to A and the system of linear equations corresponding to B are the same. To p ...

Fiedler`s Theorems on Nodal Domains 7.1 About these notes 7.2

... So, this matrix clearly has one zero eigenvalue, and as many negative eigenvalues as there are negative wu,v . Proof of Theorem 7.4.1. Let Ψ k denote the diagonal matrix with ψ k on the diagonal, and let λk be the corresponding eigenvalue. Consider the matrix M = Ψ k (LP − λk I )Ψ k . The matrix LP ...

... So, this matrix clearly has one zero eigenvalue, and as many negative eigenvalues as there are negative wu,v . Proof of Theorem 7.4.1. Let Ψ k denote the diagonal matrix with ψ k on the diagonal, and let λk be the corresponding eigenvalue. Consider the matrix M = Ψ k (LP − λk I )Ψ k . The matrix LP ...