SECOND-ORDER VERSUS FOURTH

... the array output covariance matrix and C in place of the signal covariance. Since only 4th-order cumulants are used, the properties of the measurement noise do not appear in the expression of the exact statistics. Q (M) is rank defective, with a rank equal to the rank of C, and its range is spanned ...

... the array output covariance matrix and C in place of the signal covariance. Since only 4th-order cumulants are used, the properties of the measurement noise do not appear in the expression of the exact statistics. Q (M) is rank defective, with a rank equal to the rank of C, and its range is spanned ...

18th International Mathematics Conference

... Larger value of Λ means that the corresponding element has higher importance. It is usual to use one or two elements of F and G. Then these elements are used for various plots. For pictorial representation either columns or rows are plotted in and ordered form or biplots is used to find possible ass ...

... Larger value of Λ means that the corresponding element has higher importance. It is usual to use one or two elements of F and G. Then these elements are used for various plots. For pictorial representation either columns or rows are plotted in and ordered form or biplots is used to find possible ass ...

Eigenvalues, diagonalization, and Jordan normal form

... so that v1 , . . . , vm0 are the last elements of the chains C10 , . . . , Cm 0 . For i = 1, . . . , q, let zi be a vector in V such that g(zi ) = vi . Let C1 , . . . , Cq be the chains obtained from C10 , . . . , Cq0 by adding last elements z1 , . . . , zq . Let Ci = Ci0 for i = q + 1, . . . , m0 . ...

... so that v1 , . . . , vm0 are the last elements of the chains C10 , . . . , Cm 0 . For i = 1, . . . , q, let zi be a vector in V such that g(zi ) = vi . Let C1 , . . . , Cq be the chains obtained from C10 , . . . , Cq0 by adding last elements z1 , . . . , zq . Let Ci = Ci0 for i = q + 1, . . . , m0 . ...

REVIEW FOR MIDTERM I: MAT 310 (1) Let V denote a vector space

... i=1 (ai − xbi )vi ; which implies that ai − xbi = 0 for all i (since S is an independent set). Thus ai = xbi for all i; so a = xb, showing that a and b are linearly dependent. Similarly, if a and b are linearly dependent, you can reverse the argument just given to show that v and w are dependent. (2 ...

... i=1 (ai − xbi )vi ; which implies that ai − xbi = 0 for all i (since S is an independent set). Thus ai = xbi for all i; so a = xb, showing that a and b are linearly dependent. Similarly, if a and b are linearly dependent, you can reverse the argument just given to show that v and w are dependent. (2 ...

MATLAB TOOLS FOR SOLVING PERIODIC EIGENVALUE

... transformations of the original data and thus attain numerical backward stability, which enables good accuracy even for small eigenvalues. The prospective toolbox combines the efficiency and robustness of library-style Fortran subroutines based on state-of-the-art algorithms with the convenience of ...

... transformations of the original data and thus attain numerical backward stability, which enables good accuracy even for small eigenvalues. The prospective toolbox combines the efficiency and robustness of library-style Fortran subroutines based on state-of-the-art algorithms with the convenience of ...

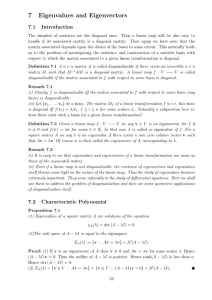

7 Eigenvalues and Eigenvectors

... Definition 7.9 We say A is triangularizable if there exists an invertible matrix C such that C −1 AC is upper triangular. Remark 7.7 Obviously all diagonalizable matrices are triangularizable. The following result says that triangularizability causes least problem: Proposition 7.9 Over the complex n ...

... Definition 7.9 We say A is triangularizable if there exists an invertible matrix C such that C −1 AC is upper triangular. Remark 7.7 Obviously all diagonalizable matrices are triangularizable. The following result says that triangularizability causes least problem: Proposition 7.9 Over the complex n ...

Non-negative matrix factorization

NMF redirects here. For the bridge convention, see new minor forcing.Non-negative matrix factorization (NMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.NMF finds applications in such fields as computer vision, document clustering, chemometrics, audio signal processing and recommender systems.