Inference for the Regression Coefficient

... Inference for the Regression Coefficient • Recall, b0 and b1 are the estimates of the slope β1 and intercept β0 of population regression line. • We can shows that b0 and b1 are the unbiased estimates of β1 and β0 and furthermore that b0 and b1 are Normally distributed with means β1 and β0 and standa ...

... Inference for the Regression Coefficient • Recall, b0 and b1 are the estimates of the slope β1 and intercept β0 of population regression line. • We can shows that b0 and b1 are the unbiased estimates of β1 and β0 and furthermore that b0 and b1 are Normally distributed with means β1 and β0 and standa ...

Standard Deviation of Means Calculator

... An important task in finding the power or sample size in an ANOVA-type design is specifying the effect size, which, in this case, is the standard deviation of the means. We often denote this value as Sm or σm. Since the standard deviation does not have an obvious, physical interpretation, PASS provi ...

... An important task in finding the power or sample size in an ANOVA-type design is specifying the effect size, which, in this case, is the standard deviation of the means. We often denote this value as Sm or σm. Since the standard deviation does not have an obvious, physical interpretation, PASS provi ...

Oneway ANOVA

... which we can specify more than 2 conditions. If we only specify 2 conditions, however, the results will be exactly the same as the t-test. The calculations are somewhat different, but the resulting “p-value” will be the same, and therefore the research conclusion will always be the same. ...

... which we can specify more than 2 conditions. If we only specify 2 conditions, however, the results will be exactly the same as the t-test. The calculations are somewhat different, but the resulting “p-value” will be the same, and therefore the research conclusion will always be the same. ...

Class3 - MIT Media Lab

... Grouping variable= differentiate the 2 groups you’re comparing. Example, you have a variable called sex and the values can be 1 or 2 corresponding to male and female. ...

... Grouping variable= differentiate the 2 groups you’re comparing. Example, you have a variable called sex and the values can be 1 or 2 corresponding to male and female. ...

STATISTICAL DESIGNS IN NUTRITIONAL EXPERIMENTS

... equal variance assumption. • The power of the test is largest when sample sizes are equal. Why to work with unbalanced designs: Balanced designs produce unbalanced data when something goes wrong. (e.g. the animal dies or you get some negative values in your ...

... equal variance assumption. • The power of the test is largest when sample sizes are equal. Why to work with unbalanced designs: Balanced designs produce unbalanced data when something goes wrong. (e.g. the animal dies or you get some negative values in your ...

Document

... • Paired t-test= Same group, two different times or measurements • Can be used as a post-hoc or planned contrast after conducting ANOVA analyses • Beware the number of t-tests done reduces confidence level so use Scheffe’s, Duncan multiple range etc. ...

... • Paired t-test= Same group, two different times or measurements • Can be used as a post-hoc or planned contrast after conducting ANOVA analyses • Beware the number of t-tests done reduces confidence level so use Scheffe’s, Duncan multiple range etc. ...

R workshop #2

... • No fan shape in Residuals vs fitted plot • No upward (or downward) trend in Scale-location plot ...

... • No fan shape in Residuals vs fitted plot • No upward (or downward) trend in Scale-location plot ...

Session 2 -IRB, Purpose, and Method

... common between the two groups) is statistically held constant when the comparison is calculated. • For example: comparing the achievement level of different schools with SES held constant ...

... common between the two groups) is statistically held constant when the comparison is calculated. • For example: comparing the achievement level of different schools with SES held constant ...

Statistical Guide - St. Cloud State University

... Multiple: Forces all predictors (2 or more) into equation to build best representation of relationship between the dependent variable and the independent variables in the equation. Stepwise: Builds a step-by-step regression model to predict the DV by examining the set of IVs and using the most signi ...

... Multiple: Forces all predictors (2 or more) into equation to build best representation of relationship between the dependent variable and the independent variables in the equation. Stepwise: Builds a step-by-step regression model to predict the DV by examining the set of IVs and using the most signi ...

Read the textbook

... observed ratio of mean squares greater than 3.48, with 4 and 10 degrees of freedom in the numerator and denominator, respectively, we would have statistically significant evidence to conclude that the population means are not equal. In other words, the distribution under the null hypothesis of equal ...

... observed ratio of mean squares greater than 3.48, with 4 and 10 degrees of freedom in the numerator and denominator, respectively, we would have statistically significant evidence to conclude that the population means are not equal. In other words, the distribution under the null hypothesis of equal ...

9.3 Tests for a Single Mean - LISA (Virginia Tech`s Laboratory for

... • ANOVA is used to determine whether three or more ...

... • ANOVA is used to determine whether three or more ...

Psychology 610: Standard Error Handout SE#, p. 1 Prof. Moore I

... students). If we did the experiment again with another set of participants, then we almost certainly would get a different mean! What we really want to know is "how good is our estimate of the mean?” not "how much variation was found around this particular estimate of the mean?" We can do this by ca ...

... students). If we did the experiment again with another set of participants, then we almost certainly would get a different mean! What we really want to know is "how good is our estimate of the mean?” not "how much variation was found around this particular estimate of the mean?" We can do this by ca ...

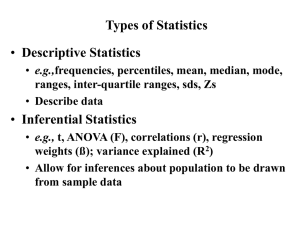

Analysis of variance

Analysis of variance (ANOVA) is a collection of statistical models used to analyze the differences among group means and their associated procedures (such as ""variation"" among and between groups), developed by statistician and evolutionary biologist Ronald Fisher. In the ANOVA setting, the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether or not the means of several groups are equal, and therefore generalizes the t-test to more than two groups. As doing multiple two-sample t-tests would result in an increased chance of committing a statistical type I error, ANOVAs are useful for comparing (testing) three or more means (groups or variables) for statistical significance.