caCORE Runtime Architecture

... The caCORE SDK is the technology foundation for caBIG™ compliant applications. The SDK is based on a software development paradigm that starts with an abstract model of the entities represented in a particular application. Real-world examples of such entities include identified peptides in an MS2 ru ...

... The caCORE SDK is the technology foundation for caBIG™ compliant applications. The SDK is based on a software development paradigm that starts with an abstract model of the entities represented in a particular application. Real-world examples of such entities include identified peptides in an MS2 ru ...

Internetwork

... • Error notification alerts upper-layer protocols that a transmission error has occurred, and the sequencing of data frames reorders frames that are transmitted out of sequence. • Finally, flow control moderates the transmission of data so that the receiving device is not overwhelmed with more traff ...

... • Error notification alerts upper-layer protocols that a transmission error has occurred, and the sequencing of data frames reorders frames that are transmitted out of sequence. • Finally, flow control moderates the transmission of data so that the receiving device is not overwhelmed with more traff ...

Life Under your Feet: A Wireless Soil Ecology Sensor Network

... several processing steps before being suitable for analysis. The raw data must be converted into scientifically meaningful, calibrated measurements [Szalay06]. Interpolation techniques must be applied to handle missing data. Results must be further aggregated and gridded to support typical analytic ...

... several processing steps before being suitable for analysis. The raw data must be converted into scientifically meaningful, calibrated measurements [Szalay06]. Interpolation techniques must be applied to handle missing data. Results must be further aggregated and gridded to support typical analytic ...

M183-2-7688

... World and Canadian Minerals Deposits in 2007 and was updated in 2008. Resource estimates for a few deposits in northern Canada were updated in 2014, but otherwise the data remain current to early 2008. Index level excerpts of the database were used for the map: World distribution of tin and tungsten ...

... World and Canadian Minerals Deposits in 2007 and was updated in 2008. Resource estimates for a few deposits in northern Canada were updated in 2014, but otherwise the data remain current to early 2008. Index level excerpts of the database were used for the map: World distribution of tin and tungsten ...

Accessing multidimensional Data Types in Oracle 9i Release 2

... exist. In typical Warehouse situations, especially if more advanced analytics was needed, an additional database had to be chosen to store and analyze the data. These databases offered a lot functionality for advanced analytics like what-if analysis, statistical queries and so on and an excellent qu ...

... exist. In typical Warehouse situations, especially if more advanced analytics was needed, an additional database had to be chosen to store and analyze the data. These databases offered a lot functionality for advanced analytics like what-if analysis, statistical queries and so on and an excellent qu ...

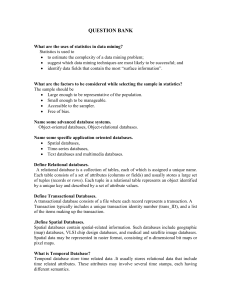

Question Bank

... Transaction typically includes a unique transaction identity number (trans_ID), and a list of the items making up the transaction. .Define Spatial Databases. Spatial databases contain spatial-related information. Such databases include geographic (map) databases, VLSI chip design databases, and medi ...

... Transaction typically includes a unique transaction identity number (trans_ID), and a list of the items making up the transaction. .Define Spatial Databases. Spatial databases contain spatial-related information. Such databases include geographic (map) databases, VLSI chip design databases, and medi ...

Database Modeling in UML

... main artefact produced to represent the logical structure of a software system. It captures the both the data requirements and the behaviour of objects within the model domain. The techniques for discovering and elaborating that model are outside the scope of this article, so we will assume the exis ...

... main artefact produced to represent the logical structure of a software system. It captures the both the data requirements and the behaviour of objects within the model domain. The techniques for discovering and elaborating that model are outside the scope of this article, so we will assume the exis ...

Chapter 11: Data, Knowledge, and Decision Support

... Data Management difficulties Data volume exponentially increases with time Many methods and devices used to collect data Raw data stored many places and ways only small portions of data are relevant for specific situations More and more external data Different legal requirements relati ...

... Data Management difficulties Data volume exponentially increases with time Many methods and devices used to collect data Raw data stored many places and ways only small portions of data are relevant for specific situations More and more external data Different legal requirements relati ...

Automated Drug Safety Signal Detection with Guided Analysis

... residual score, for detecting drug-event pairs with unusually large values that may appear as potential safety signals. The utility of this algorithm is the ability to identify outliers that help point reviewers to the source and the underlying patient reporting population for further investigation. ...

... residual score, for detecting drug-event pairs with unusually large values that may appear as potential safety signals. The utility of this algorithm is the ability to identify outliers that help point reviewers to the source and the underlying patient reporting population for further investigation. ...

Data Warehousing and Data Mining in Business Applications

... Abstract—Information technology is now required in all aspect of our lives that helps in business and enterprise for the usage of applications like decision support system, query and reporting online analytical processing, predictive analysis and business performance management. This paper focuses o ...

... Abstract—Information technology is now required in all aspect of our lives that helps in business and enterprise for the usage of applications like decision support system, query and reporting online analytical processing, predictive analysis and business performance management. This paper focuses o ...

data

... Completeness, Timeliness, Consistency With the advent of networks, sources increase dramatically, and data become often “found data”. Federated data, where many disparate data are integrated, are highly valued Data collection and analysis are frequently ...

... Completeness, Timeliness, Consistency With the advent of networks, sources increase dramatically, and data become often “found data”. Federated data, where many disparate data are integrated, are highly valued Data collection and analysis are frequently ...

ppt

... their original publication is handling of Block labels, which were replaced by more symmetrical notation. JOIN Operator: Joins table in the FROM clause Joins row in the WHERE clause Queries are processed in the following order: Tuples are selected by the WHERE clause Groups are formed by the ...

... their original publication is handling of Block labels, which were replaced by more symmetrical notation. JOIN Operator: Joins table in the FROM clause Joins row in the WHERE clause Queries are processed in the following order: Tuples are selected by the WHERE clause Groups are formed by the ...

A prototype of Quality Data Warehouse in Steel Industry

... • Refused: data related to the refused products for quality reasons. Each subject is detailed in fact tables and the related dimensions using a star schema representation in which some dimensions (i.e. coil identifier (coil, client) are shared between more than one subjects. The data model design wi ...

... • Refused: data related to the refused products for quality reasons. Each subject is detailed in fact tables and the related dimensions using a star schema representation in which some dimensions (i.e. coil identifier (coil, client) are shared between more than one subjects. The data model design wi ...

Database Tool Window

... Most of the functions in this window are accessed by means of the toolbar icons or context menu commands. (If the toolbar is not currently shown, click on the title bar and select Show T oolbar.) Many of the commands have keyboard shortcuts. The Synchronize and Console commands can additionally be a ...

... Most of the functions in this window are accessed by means of the toolbar icons or context menu commands. (If the toolbar is not currently shown, click on the title bar and select Show T oolbar.) Many of the commands have keyboard shortcuts. The Synchronize and Console commands can additionally be a ...

Release Notes - CONNX Solutions

... CONNX 8.8 expands the database support of CONNX to include VSAM files on the VSE platform. A new component called the CONNX Data Synchronization Tool has been added to the CONNX family of products. This component provides a fast and easy method of creating data marts and data warehouses. Direct supp ...

... CONNX 8.8 expands the database support of CONNX to include VSAM files on the VSE platform. A new component called the CONNX Data Synchronization Tool has been added to the CONNX family of products. This component provides a fast and easy method of creating data marts and data warehouses. Direct supp ...

Extraction, Transformation, Loading (ETL) and Data Cleaning

... Abstract: Extraction, Transformation and Loading (ETL) is a process of enterprise data warehouse where process data is transferred from one or many data sources to the data warehouse. This research paper discusses the problems of the ETL process and focuses on the cleaning problem of ETL. Extraction ...

... Abstract: Extraction, Transformation and Loading (ETL) is a process of enterprise data warehouse where process data is transferred from one or many data sources to the data warehouse. This research paper discusses the problems of the ETL process and focuses on the cleaning problem of ETL. Extraction ...

Considerations in the Submission of Exposure Data in an SDTM-Compliant Format

... more granular representation of the treatments within the Elements of an Arm, and this was not considered to be a valid approach. The number of injections could have been submitted in SUPPEX if desired, but sponsors should be cautious in submitting superfluous data in SUPP- datasets. This situation ...

... more granular representation of the treatments within the Elements of an Arm, and this was not considered to be a valid approach. The number of injections could have been submitted in SUPPEX if desired, but sponsors should be cautious in submitting superfluous data in SUPP- datasets. This situation ...

ppt - Columbia University

... Insulation between Programs and Data, and Data Abstraction – program-data independence – The characteristic that allows program-data independence and program-operation independence is called data abstraction – A DBMS provides users with a conceptual representation of data that does not include many ...

... Insulation between Programs and Data, and Data Abstraction – program-data independence – The characteristic that allows program-data independence and program-operation independence is called data abstraction – A DBMS provides users with a conceptual representation of data that does not include many ...

Data Mining - UCD School of Computer Science and

... Waterfall: structured and systematic analysis at each step before proceeding to the next Spiral: rapid generation of increasingly functional systems, short turn around time, quick turn around ...

... Waterfall: structured and systematic analysis at each step before proceeding to the next Spiral: rapid generation of increasingly functional systems, short turn around time, quick turn around ...

CUSTOMER_CODE SMUDE DIVISION_CODE SMUDE

... development of a combined set of concepts for the use of application developers. The training of temporal database is designed by application developers and designers. There are numerous applications where time is an important factor in storing the information. For example: Insurance, Healthcare, Re ...

... development of a combined set of concepts for the use of application developers. The training of temporal database is designed by application developers and designers. There are numerous applications where time is an important factor in storing the information. For example: Insurance, Healthcare, Re ...

What is Data Warehouse?

... - Some users may wish to submit queries to the database which, using conventional multidimensional reporting tools, cannot be expressed within a simple star schema. This is particularly common in data mining of customer databases, where a common requirement is to locate common factors between custom ...

... - Some users may wish to submit queries to the database which, using conventional multidimensional reporting tools, cannot be expressed within a simple star schema. This is particularly common in data mining of customer databases, where a common requirement is to locate common factors between custom ...

data mining and warehousing

... Management. Since its inception, It has continued to build on its unique software architecture to make the integration process easier to learn and use, faster to implement and maintain, and operate at the best performance possible- in other words, Simply Faster Integration. Relational database manag ...

... Management. Since its inception, It has continued to build on its unique software architecture to make the integration process easier to learn and use, faster to implement and maintain, and operate at the best performance possible- in other words, Simply Faster Integration. Relational database manag ...

Distributing near-real time data

... If client pulls data from server, delays result from repeatedly asking server “is new data available yet?” If server pushes data to clients, server must maintain connection information and state for each client FTP can be slow for sending many small data products In spite of these shortcomings, FTP ...

... If client pulls data from server, delays result from repeatedly asking server “is new data available yet?” If server pushes data to clients, server must maintain connection information and state for each client FTP can be slow for sending many small data products In spite of these shortcomings, FTP ...

Dimensions of Database Quality

... While data quality has been the focus of a substantial amount of research, a standard definition does not exist in the literature (Wang & Madnick, 2000). The International Organization for Standardization (ISO) supplies an acceptable definition of data quality using accepted terminology from the qua ...

... While data quality has been the focus of a substantial amount of research, a standard definition does not exist in the literature (Wang & Madnick, 2000). The International Organization for Standardization (ISO) supplies an acceptable definition of data quality using accepted terminology from the qua ...

Data model

A data model organizes data elements and standardizes how the data elements relate to one another. Since data elements document real life people, places and things and the events between them, the data model represents reality, for example a house has many windows or a cat has two eyes. Computers are used for the accounting of these real life things and events and therefore the data model is a necessary standard to ensure exact communication between human beings.Data models are often used as an aid to communication between the business people defining the requirements for a computer system and the technical people defining the design in response to those requirements. They are used to show the data needed and created by business processes.Precise accounting and communication is a large expense and organizations traditionally paid the cost by having employees translate between themselves on an ad hoc basis. In critical situations such as air travel, healthcare and finance, it is becoming commonplace that the accounting and communication must be precise and therefore requires the use of common data models to obviate risk.According to Hoberman (2009), ""A data model is a wayfinding tool for both business and IT professionals, which uses a set of symbols and text to precisely explain a subset of real information to improve communication within the organization and thereby lead to a more flexible and stable application environment.""A data model explicitly determines the structure of data. Data models are specified in a data modeling notation, which is often graphical in form.A data model can be sometimes referred to as a data structure, especially in the context of programming languages. Data models are often complemented by function models, especially in the context of enterprise models.