Normal modes for the general equation Mx = −Kx

... Some extra notes on orthogonality: One way of thinking about the new orthogonality rules (19,20) is this: we are using the eigenvectors as our basis vectors, but they are not orthogonal; when our basis vectors are not orthogonal, we need to distinguish between the original vector space, in which the ...

... Some extra notes on orthogonality: One way of thinking about the new orthogonality rules (19,20) is this: we are using the eigenvectors as our basis vectors, but they are not orthogonal; when our basis vectors are not orthogonal, we need to distinguish between the original vector space, in which the ...

A proof of the Jordan normal form theorem

... Av = µv for some 0 6= v ∈ Ker(A − λ Id)k , then (A − λ Id)v = (µ − λ)v, and 0 = (A − λ Id)k v = (µ − λ)k v, so µ = λ), and the dimension of Im(A − λ Id)k is less than dim V (if λ is an eigenvalue of A), so we can apply the induction hypothesis for A acting on the vector space V 0 = Im(A − λ Id)k . T ...

... Av = µv for some 0 6= v ∈ Ker(A − λ Id)k , then (A − λ Id)v = (µ − λ)v, and 0 = (A − λ Id)k v = (µ − λ)k v, so µ = λ), and the dimension of Im(A − λ Id)k is less than dim V (if λ is an eigenvalue of A), so we can apply the induction hypothesis for A acting on the vector space V 0 = Im(A − λ Id)k . T ...

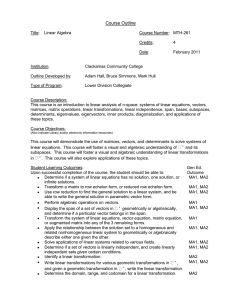

Document

... defined: addition, and multiplication by scalars. If the following axioms are satisfied by all objects u, v, w in V and all scalars k and m, then we call V a vector space and we call the objects in V vectors 1. If u and v are objects in V, then u + v is in V. ...

... defined: addition, and multiplication by scalars. If the following axioms are satisfied by all objects u, v, w in V and all scalars k and m, then we call V a vector space and we call the objects in V vectors 1. If u and v are objects in V, then u + v is in V. ...

Common Patterns and Pitfalls for Implementing Algorithms in Spark

... Or simply use the Spark API doubleRDD.variance() ...

... Or simply use the Spark API doubleRDD.variance() ...