Learning Objective Thinking Challenge Random Variables

... the trials are not independent, and where: x = number of successes n = number of trials f(x) = probability of x successes in n trials N = number of elements in the population r = number of elements in the population labeled success ...

... the trials are not independent, and where: x = number of successes n = number of trials f(x) = probability of x successes in n trials N = number of elements in the population r = number of elements in the population labeled success ...

Lecture 2: Random variables in Banach spaces

... to check the almost sure convergence of sums of independent symmetric random variables and will play an important role in the forthcoming lectures. The proof of the Itô-Nisio theorem is based on a uniqueness property of Fourier transforms (Theorem 2.8). From this lecture onwards, we shall always as ...

... to check the almost sure convergence of sums of independent symmetric random variables and will play an important role in the forthcoming lectures. The proof of the Itô-Nisio theorem is based on a uniqueness property of Fourier transforms (Theorem 2.8). From this lecture onwards, we shall always as ...

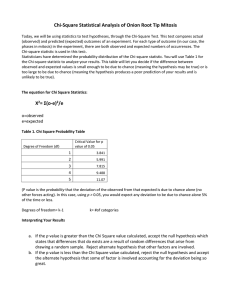

Appendix D Chi-square Analysis and Exercises

... this big a deviation from the expected results. By convention, when the likelihood of a deviation’s being due to chance alone is (or less than) five chances in one hundred, it is considered a significant deviation. If this is so, then some factors are probably operating other than those on which th ...

... this big a deviation from the expected results. By convention, when the likelihood of a deviation’s being due to chance alone is (or less than) five chances in one hundred, it is considered a significant deviation. If this is so, then some factors are probably operating other than those on which th ...

(pdf)

... p < pc , open clusters are almost surely finite, and we can ask questions about their size (Section 4.2). When p > pc , there is almost surely an infinite open cluster, and we can ask questions about how many such infinite open clusters there are (Section 4.3). Combining results from both phases, we ...

... p < pc , open clusters are almost surely finite, and we can ask questions about their size (Section 4.2). When p > pc , there is almost surely an infinite open cluster, and we can ask questions about how many such infinite open clusters there are (Section 4.3). Combining results from both phases, we ...

Topic 4

... there are various difficulties that stand in the way of this answer. The most evident, perhaps, is that there are presumably an infinite number of possible worlds. So what sense can one make, for example, of the claim that p is true in, say, 75% of those worlds? Another, less familiar and less obvio ...

... there are various difficulties that stand in the way of this answer. The most evident, perhaps, is that there are presumably an infinite number of possible worlds. So what sense can one make, for example, of the claim that p is true in, say, 75% of those worlds? Another, less familiar and less obvio ...

STOCHASTIC PROCESSES Basic notions

... Discrete time stochastic processes are also called random sequences. ...

... Discrete time stochastic processes are also called random sequences. ...

COS513 LECTURE 8 STATISTICAL CONCEPTS 1. M .

... The expression implies that θ is a random variable and requires us specify its marginal probability p(θ), which is known as the prior. As a result we can compute the conditional probability of the parameter set given the data p(θ|~x) (known as the posterior). Thus, Bayesian inference results not in ...

... The expression implies that θ is a random variable and requires us specify its marginal probability p(θ), which is known as the prior. As a result we can compute the conditional probability of the parameter set given the data p(θ|~x) (known as the posterior). Thus, Bayesian inference results not in ...

Probability

... of symmetry and random mixing. These are simplified representations of concrete, physical reality. When we use a coin toss as an example, we are assuming that the coin is perfectly symmetric and the toss is sufficiently chaotic to guarantee equal probabilities for both outcomes (head or tail). From ...

... of symmetry and random mixing. These are simplified representations of concrete, physical reality. When we use a coin toss as an example, we are assuming that the coin is perfectly symmetric and the toss is sufficiently chaotic to guarantee equal probabilities for both outcomes (head or tail). From ...

Probabilistic Reasoning

... – We may have a model for how causes lead to effects (P(X | Y)) – We may also have prior beliefs (based on experience) about the frequency of occurrence of effects (P(Y)) – Which allows us to reason abductively from effects to causes (P(Y | X)). ...

... – We may have a model for how causes lead to effects (P(X | Y)) – We may also have prior beliefs (based on experience) about the frequency of occurrence of effects (P(Y)) – Which allows us to reason abductively from effects to causes (P(Y | X)). ...

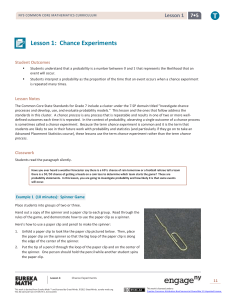

Lesson 1 7•5

... standards in this cluster. A chance process is any process that is repeatable and results in one of two or more welldefined outcomes each time it is repeated. In the context of probability, observing a single outcome of a chance process is sometimes called a chance experiment. Because the term chanc ...

... standards in this cluster. A chance process is any process that is repeatable and results in one of two or more welldefined outcomes each time it is repeated. In the context of probability, observing a single outcome of a chance process is sometimes called a chance experiment. Because the term chanc ...

Luby`s Algorithm

... X0 , …, Xn-1 are chosen mutually independently, such that on that average a set of value for this random variable is good. A vertex is good if ...

... X0 , …, Xn-1 are chosen mutually independently, such that on that average a set of value for this random variable is good. A vertex is good if ...

Improved Class Probability Estimates from Decision Tree Models

... probabilities P(k|x). How can we obtain such estimates from decision trees? Existing decision tree algorithms estimate P(k|x) separately in each leaf of the decision tree by computing the proportion of training data points belonging to class k that reach that leaf. There are three difficulties with ...

... probabilities P(k|x). How can we obtain such estimates from decision trees? Existing decision tree algorithms estimate P(k|x) separately in each leaf of the decision tree by computing the proportion of training data points belonging to class k that reach that leaf. There are three difficulties with ...

Probability - James Scott

... a feisty battle. Galois died at age 20 from wounds suffered in a duel related to a broken love affair. Brahe also dueled at age 20—allegedly over a mathematical theory—and lost the tip of his nose in the process. He died at age 56; scholars remain unsure whether he suffered kidney failure after a ni ...

... a feisty battle. Galois died at age 20 from wounds suffered in a duel related to a broken love affair. Brahe also dueled at age 20—allegedly over a mathematical theory—and lost the tip of his nose in the process. He died at age 56; scholars remain unsure whether he suffered kidney failure after a ni ...

Ars Conjectandi

Ars Conjectandi (Latin for The Art of Conjecturing) is a book on combinatorics and mathematical probability written by Jakob Bernoulli and published in 1713, eight years after his death, by his nephew, Niklaus Bernoulli. The seminal work consolidated, apart from many combinatorial topics, many central ideas in probability theory, such as the very first version of the law of large numbers: indeed, it is widely regarded as the founding work of that subject. It also addressed problems that today are classified in the twelvefold way, and added to the subjects; consequently, it has been dubbed an important historical landmark in not only probability but all combinatorics by a plethora of mathematical historians. The importance of this early work had a large impact on both contemporary and later mathematicians; for example, Abraham de Moivre.Bernoulli wrote the text between 1684 and 1689, including the work of mathematicians such as Christiaan Huygens, Gerolamo Cardano, Pierre de Fermat, and Blaise Pascal. He incorporated fundamental combinatorial topics such as his theory of permutations and combinations—the aforementioned problems from the twelvefold way—as well as those more distantly connected to the burgeoning subject: the derivation and properties of the eponymous Bernoulli numbers, for instance. Core topics from probability, such as expected value, were also a significant portion of this important work.