(Click here for full document.)

... displayed in tables, graphs, and formulas is an essential quantitative skill for success. This course is an introduction to applied statistics. Topics include descriptive statistics, elementary probability, discrete and continuous distributions, interval and point estimation, hypothesis testing, reg ...

... displayed in tables, graphs, and formulas is an essential quantitative skill for success. This course is an introduction to applied statistics. Topics include descriptive statistics, elementary probability, discrete and continuous distributions, interval and point estimation, hypothesis testing, reg ...

Simple Regression

... The formula method computes b and a such that the sum of squares of the differences between Y's and Y-hats is smaller than it would be for any other values. Called a least squares solution. The mean is a least squares measure. The variance (and standard deviation) about the mean is smaller than it i ...

... The formula method computes b and a such that the sum of squares of the differences between Y's and Y-hats is smaller than it would be for any other values. Called a least squares solution. The mean is a least squares measure. The variance (and standard deviation) about the mean is smaller than it i ...

5 Omitted and Irrelevant variables

... I.e. correlated with error term from previous time periods 5. Explanatory variables are fixed observe normal distribution of y for repeated fixed values of x 6. No linear relationship between RHS variables I.e. no “multicolinearity” It is important, then, before we attempt to interpret or generalise ...

... I.e. correlated with error term from previous time periods 5. Explanatory variables are fixed observe normal distribution of y for repeated fixed values of x 6. No linear relationship between RHS variables I.e. no “multicolinearity” It is important, then, before we attempt to interpret or generalise ...

Overview of Supervised Learning

... ith observation from the N -vector xj consisting of all the observations on variable Xj . Since all vectors are assumed to be column vectors, the ith row of X is xTi , the vector transpose of xi . For the moment we can loosely state the learning task as follows: given the value of an input vector X, ...

... ith observation from the N -vector xj consisting of all the observations on variable Xj . Since all vectors are assumed to be column vectors, the ith row of X is xTi , the vector transpose of xi . For the moment we can loosely state the learning task as follows: given the value of an input vector X, ...

cjt765 class 7

... data (observed covariances) were drawn from this population. Most forms are simultaneous. The fitting function is related to discrepancies between observed covariances and those predicted by the model. Typically iterative, deriving an initial solution then improves is through various calculations. G ...

... data (observed covariances) were drawn from this population. Most forms are simultaneous. The fitting function is related to discrepancies between observed covariances and those predicted by the model. Typically iterative, deriving an initial solution then improves is through various calculations. G ...

Chapter 18 Seemingly Unrelated Regression Equations Models

... A basic nature of multiple regression model is that it describes the behaviour of a particular study variable based on a set of explanatory variables. When the objective is to explain the whole system, there may be more than one multiple regression equations. For example, in a set of individual line ...

... A basic nature of multiple regression model is that it describes the behaviour of a particular study variable based on a set of explanatory variables. When the objective is to explain the whole system, there may be more than one multiple regression equations. For example, in a set of individual line ...

y 1,T , y 2,T y 1,T+1 , y 2,T+1 y 1,T+1 , Y 2,T+1 y 1,T+2 - Ka

... Call it scenario analysis or contingency analysis – based on some assumed h-step-ahead value of the exogenous variables. ...

... Call it scenario analysis or contingency analysis – based on some assumed h-step-ahead value of the exogenous variables. ...

Detecting Structural Change Using SAS ETS Procedures

... The analysis of time series is an important statistical methodology with principal contributions developed by academic disciplines such as biometrics, economics and sociology. Recent developments in arima modeling, spectral analysis and X12 methodology have added immensely to the usefulness of the m ...

... The analysis of time series is an important statistical methodology with principal contributions developed by academic disciplines such as biometrics, economics and sociology. Recent developments in arima modeling, spectral analysis and X12 methodology have added immensely to the usefulness of the m ...

Regression

... * Note this statement provides a general sense of what a confidence interval does for us in concise language for ease of understanding. The specific statistical interpretation is that if many independent samples are taken where the levels of the predictor variable are the same as in the data set, an ...

... * Note this statement provides a general sense of what a confidence interval does for us in concise language for ease of understanding. The specific statistical interpretation is that if many independent samples are taken where the levels of the predictor variable are the same as in the data set, an ...

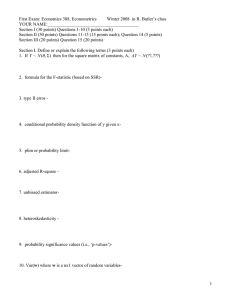

e388_08_Win_Exam1

... 13. For the simpliest regression (one slope variable, no intercept in the model), we have yi xi i , and the following picture for our particular sample, where length of the y-vector is 8 as indicated, and the length of the x vector is 5. If the angle between the x vector and the y-vector is 4 ...

... 13. For the simpliest regression (one slope variable, no intercept in the model), we have yi xi i , and the following picture for our particular sample, where length of the y-vector is 8 as indicated, and the length of the x vector is 5. If the angle between the x vector and the y-vector is 4 ...

GEODA DIAGNOSTICS FOR

... [Note: In the present case, ˆ ( ˆ0 , ˆ1,ˆ 2 , ˆ) , so this should be k 4 ]. Intuitively, AIC is an “adjusted log likelihood” that is penalized for the number of parameter fitted, in a manner analogous to adjusted R-Square. A second criterion that incorporates sample size (n ) into the penal ...

... [Note: In the present case, ˆ ( ˆ0 , ˆ1,ˆ 2 , ˆ) , so this should be k 4 ]. Intuitively, AIC is an “adjusted log likelihood” that is penalized for the number of parameter fitted, in a manner analogous to adjusted R-Square. A second criterion that incorporates sample size (n ) into the penal ...

Additional Exercises

... that needs to be inverted to perform GLS? 4-12 Consider regression of y on x when data (y; x) take values ( 1; 2), (0; 1), (0; 0), (0; 1) and (1; 2). (a) Using an appropriate statistical package obtain the OLS estimate of the slope coe¢ cient and the least absolute deviations regression estimate of ...

... that needs to be inverted to perform GLS? 4-12 Consider regression of y on x when data (y; x) take values ( 1; 2), (0; 1), (0; 0), (0; 1) and (1; 2). (a) Using an appropriate statistical package obtain the OLS estimate of the slope coe¢ cient and the least absolute deviations regression estimate of ...

Elements of Statistical Learning Printing 10 with corrections

... most useful encoding - dummy variables, e.g. vector of K bits to for K-level qualitative measurement or more efficient version ...

... most useful encoding - dummy variables, e.g. vector of K bits to for K-level qualitative measurement or more efficient version ...

5 Multiple Linear Regression 81

... delete or impute cases with missing values, then multiple predictors will lead to a higher rate of case deletion or imputation. – Parsimony is an important property of good models. We obtain more insight into the influence of predictors in models with few parameters. – Estimates of regression coeffi ...

... delete or impute cases with missing values, then multiple predictors will lead to a higher rate of case deletion or imputation. – Parsimony is an important property of good models. We obtain more insight into the influence of predictors in models with few parameters. – Estimates of regression coeffi ...

Scalable Look-Ahead Linear Regression Trees

... The principle of fitting models to the branches of candidate splits is not new. For more than a decade, Karalic’s RETIS [6] model tree algorithm optimizes the overall RSS, simultaneously optimizing the split and the models in the leaf nodes to minimize the global RSS. However, this algorithm is ofte ...

... The principle of fitting models to the branches of candidate splits is not new. For more than a decade, Karalic’s RETIS [6] model tree algorithm optimizes the overall RSS, simultaneously optimizing the split and the models in the leaf nodes to minimize the global RSS. However, this algorithm is ofte ...

Statistical Models for Probabilistic Forecasting

... informative to forecast the actual temperature or windspeed, rather than a threshold probability. • This is not true if, as often happens, only a single predicted value is given. • It would be true if we use distributional assumptions about the error term, e, to construct a probability distribution ...

... informative to forecast the actual temperature or windspeed, rather than a threshold probability. • This is not true if, as often happens, only a single predicted value is given. • It would be true if we use distributional assumptions about the error term, e, to construct a probability distribution ...

Statistics: A Brief Overview Part I

... • Dependent Variable: variable you believe may be influenced / modified by treatment or exposure. May represent variable you are trying to predict. Sometimes referred to as response or outcome variable. ...

... • Dependent Variable: variable you believe may be influenced / modified by treatment or exposure. May represent variable you are trying to predict. Sometimes referred to as response or outcome variable. ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.