Statistics Day 1

... • The distribution of a variable tells us what values the variable takes and how often it takes these variables. ...

... • The distribution of a variable tells us what values the variable takes and how often it takes these variables. ...

PPT - Cambridge University Press

... 2. Use White’s heteroscedasticity consistent standard error estimates. The effect of using White’s correction is that in general the standard errors for the slope coefficients are increased relative to the usual OLS standard errors. This makes us more “conservative” in hypothesis testing, so that we ...

... 2. Use White’s heteroscedasticity consistent standard error estimates. The effect of using White’s correction is that in general the standard errors for the slope coefficients are increased relative to the usual OLS standard errors. This makes us more “conservative” in hypothesis testing, so that we ...

Application of Statistical Methods for Gas Turbine Plant Operation

... The simple statistical measure represents a univariate approach to data analysis, which lacks the ability to constructively analyse large, multivariate data sets as the interactions between variables are ignored (Martin et a1., 1996). In contrast, multivariate statistical analysis describes methods ...

... The simple statistical measure represents a univariate approach to data analysis, which lacks the ability to constructively analyse large, multivariate data sets as the interactions between variables are ignored (Martin et a1., 1996). In contrast, multivariate statistical analysis describes methods ...

Multilevel Regression Models

... • n individuals observed from each of K schools (total of nK observations) • if Yik= Yjk for all i and j in school k, then knowing k completely determines Y, so there are really only K unique observations • In this case, we can just treat each school as a single observation (with outcome Y.k), and u ...

... • n individuals observed from each of K schools (total of nK observations) • if Yik= Yjk for all i and j in school k, then knowing k completely determines Y, so there are really only K unique observations • In this case, we can just treat each school as a single observation (with outcome Y.k), and u ...

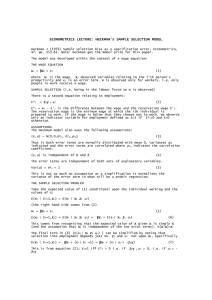

PDF

... some cases minimize mean absolute error by using moments up to seventh order+ Interest will often focus on a function that depends on GMM estimates and other estimates obtained from the same data+ Such functions include those giving the coefficients on the partialled-out regressors and that giving t ...

... some cases minimize mean absolute error by using moments up to seventh order+ Interest will often focus on a function that depends on GMM estimates and other estimates obtained from the same data+ Such functions include those giving the coefficients on the partialled-out regressors and that giving t ...

Using Propensity Scores to Adjust for Treatment Selection Bias

... Estimating the effect of drug treatment on outcomes requires adjusting for many observed factors, particularly those influencing drug selection. This paper demonstrates the use of PROC LOGISTIC in creating propensity scores to address such potential treatment selection bias. In this example using a ...

... Estimating the effect of drug treatment on outcomes requires adjusting for many observed factors, particularly those influencing drug selection. This paper demonstrates the use of PROC LOGISTIC in creating propensity scores to address such potential treatment selection bias. In this example using a ...

Estimation of the marginal rate of substitution in

... Singleton (1932, 1933), Dunn and Singleton (1933, 1936), Grossman an Shiller (1931), and Mankiw and Shapiro (1934). have been generally negative: ...

... Singleton (1932, 1933), Dunn and Singleton (1933, 1936), Grossman an Shiller (1931), and Mankiw and Shapiro (1934). have been generally negative: ...

108 pages - Pearson Schools and FE Colleges

... generate points and plot graphs of simple quadratic functions, then more general quadratic functions ...

... generate points and plot graphs of simple quadratic functions, then more general quadratic functions ...

Count Regression Models in SAS®

... positive contagion and unobserved heterogeneity. To address these issues, one extension of the Poisson model is to include an unobserved specific effect ε i into the μ i parameter; this specific effect can be treated as random or fixed. In ...

... positive contagion and unobserved heterogeneity. To address these issues, one extension of the Poisson model is to include an unobserved specific effect ε i into the μ i parameter; this specific effect can be treated as random or fixed. In ...

algebra 1 hs

... Use formulas Real-world application Methods for solving inequalities in onevariable Write inequalities that model a given problem situation Assign a variable and restrictions to EA-1.3 the variable EA-1.4 A.CED.1 Solve absolute value inequalities EA-1.5 A.REI.1 Include fractions and decima ...

... Use formulas Real-world application Methods for solving inequalities in onevariable Write inequalities that model a given problem situation Assign a variable and restrictions to EA-1.3 the variable EA-1.4 A.CED.1 Solve absolute value inequalities EA-1.5 A.REI.1 Include fractions and decima ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.