Mediation Analysis with Two Waves

... models or a single structural equation model. This approach to mediation with two waves may not always be the most appropriate. In some cases, the XM relationship or the MY relationship may be hypothesized to be more immediate. In which case, the lag time of the study may not be best match for one ...

... models or a single structural equation model. This approach to mediation with two waves may not always be the most appropriate. In some cases, the XM relationship or the MY relationship may be hypothesized to be more immediate. In which case, the lag time of the study may not be best match for one ...

The method of least squares

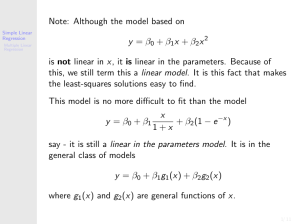

... where random variables U1, U2, …, Un have zero expectation and variance σ 2 Method of Least Squares: Choose a value for α and β such that S(α,β)=( ∑ ( y − α− β x )) is minimal. ...

... where random variables U1, U2, …, Un have zero expectation and variance σ 2 Method of Least Squares: Choose a value for α and β such that S(α,β)=( ∑ ( y − α− β x )) is minimal. ...

AP Statistics - Linear Regression : Interpreting

... Check the context of the situation. Often, there can be no such value. In this case, it is not possible to have a volume that is negative nor is it possible to have a height of zero. Height/Slope --- 1.5433 This is the coefficient of the explanatory variable, thus it is the slope. This entire line ...

... Check the context of the situation. Often, there can be no such value. In this case, it is not possible to have a volume that is negative nor is it possible to have a height of zero. Height/Slope --- 1.5433 This is the coefficient of the explanatory variable, thus it is the slope. This entire line ...

Inference about the Slope and Intercept

... • Note, the above predicted value is the same as the estimate of E(Y) at Xh,new but it should have larger variance. • The predicted value Yˆh, new has two sources of variability. One is due to the regression line being estimated by b0+b1X. The second one is due to εh,new i.e., points don’t fall exac ...

... • Note, the above predicted value is the same as the estimate of E(Y) at Xh,new but it should have larger variance. • The predicted value Yˆh, new has two sources of variability. One is due to the regression line being estimated by b0+b1X. The second one is due to εh,new i.e., points don’t fall exac ...

PDF, Normal Distribution and Linear Regression

... then: • 68% of students will have scores between 450 and 550 • 95% will be between 400 and 600 • 99.7% will be between 350 and 650 ...

... then: • 68% of students will have scores between 450 and 550 • 95% will be between 400 and 600 • 99.7% will be between 350 and 650 ...

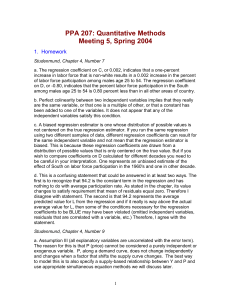

PPA 207: Quantitative Methods

... a. The regression coefficient on C, or 0.002, indicates that a one-percent increase in labor force that is non-white results in a 0.002 increase in the percent of labor force participation among males age 25 to 54. The regression coefficient on D, or -0.80, indicates that the percent labor force par ...

... a. The regression coefficient on C, or 0.002, indicates that a one-percent increase in labor force that is non-white results in a 0.002 increase in the percent of labor force participation among males age 25 to 54. The regression coefficient on D, or -0.80, indicates that the percent labor force par ...

Regression - gozips.uakron.edu

... In many situations and exact relationship does not exist. Often there are many y’s that can be observed for a given x. This type of relationship is called a statistical relationship. Ex: Consider the relationship between square footage in a house and mortgage cost. For a given square footage, there ...

... In many situations and exact relationship does not exist. Often there are many y’s that can be observed for a given x. This type of relationship is called a statistical relationship. Ex: Consider the relationship between square footage in a house and mortgage cost. For a given square footage, there ...

document

... The slope of 1.487 means for each increase of one unit in X, the Y is estimated to increase 1.487units. For each increase of 1 square foot in the size of the store, the model predicts that the expected annual sales are estimated to increase by $1487. ...

... The slope of 1.487 means for each increase of one unit in X, the Y is estimated to increase 1.487units. For each increase of 1 square foot in the size of the store, the model predicts that the expected annual sales are estimated to increase by $1487. ...

Chapter 14: Inference for Regression

... relation between mean salaries for assistant professors (junior faculty) and full professors (senior faculty) at a given university. In particular, do universities pay (relatively) high salaries to both assistant and full professors, or are full professors treated much better than assistant professo ...

... relation between mean salaries for assistant professors (junior faculty) and full professors (senior faculty) at a given university. In particular, do universities pay (relatively) high salaries to both assistant and full professors, or are full professors treated much better than assistant professo ...

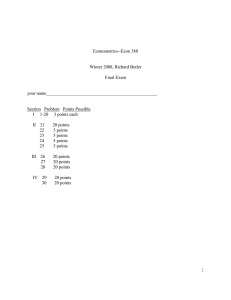

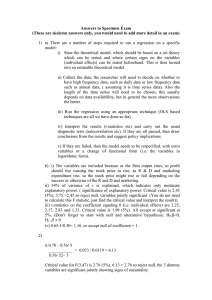

e388_08_Win_Final

... z* and so that E ( | z*) 0 (and is uncorrelated with X, z, and z*). Indicate whether or not there is “simultaneous equation” bias if Y is regressed on X and z* (as always, you are only graded on your explanation, not on your guess as to the right answer). ...

... z* and so that E ( | z*) 0 (and is uncorrelated with X, z, and z*). Indicate whether or not there is “simultaneous equation” bias if Y is regressed on X and z* (as always, you are only graded on your explanation, not on your guess as to the right answer). ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.