Fast Clustering and Classification using P

... based on them. Two types of classification algorithms as well as one clustering algorithm that use ideas from traditional algorithms, adapt them to the P-tree setting, and introduce new improvements are described. All algorithms are fundamentally based on kerneldensity estimates that can be seen as ...

... based on them. Two types of classification algorithms as well as one clustering algorithm that use ideas from traditional algorithms, adapt them to the P-tree setting, and introduce new improvements are described. All algorithms are fundamentally based on kerneldensity estimates that can be seen as ...

Excercise

... want either to find the location LOC of ITEM in the array DATA, or to send some message, such as LOC = 0, to indicate that ITEM does not appear in DATA. The linear search algorithm solves this problem by comparing ITEM, one by one, with each element in DATA. That is, we compare ITEM with DATA[1], the ...

... want either to find the location LOC of ITEM in the array DATA, or to send some message, such as LOC = 0, to indicate that ITEM does not appear in DATA. The linear search algorithm solves this problem by comparing ITEM, one by one, with each element in DATA. That is, we compare ITEM with DATA[1], the ...

Program Design

... • Program documentation should not be listed as the last step in the program development process, it is really an ongoing task from the initial definition of the problem to the final test result • Documentation involves both external documentation (such as hierarchy charts) and internal documentatio ...

... • Program documentation should not be listed as the last step in the program development process, it is really an ongoing task from the initial definition of the problem to the final test result • Documentation involves both external documentation (such as hierarchy charts) and internal documentatio ...

lect1 - University of South Carolina

... Why you want to study Algorithms? • Viterbi algorithm conceived by Andrew Viterbi in 1967 as an error-correction scheme for noisy digital communication links, finding universal application in decoding the convolutional codes used in both • Viterbi algorithm is a standard component of tens of millio ...

... Why you want to study Algorithms? • Viterbi algorithm conceived by Andrew Viterbi in 1967 as an error-correction scheme for noisy digital communication links, finding universal application in decoding the convolutional codes used in both • Viterbi algorithm is a standard component of tens of millio ...

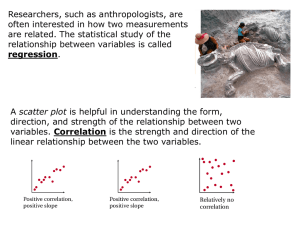

notes

... Sometimes the dependent variable is also binary (employed or unemployed) Linear Probability Model (LPM) estimates the response probability as linear in the ...

... Sometimes the dependent variable is also binary (employed or unemployed) Linear Probability Model (LPM) estimates the response probability as linear in the ...

Slide 1

... Sometimes the dependent variable is also binary (employed or unemployed) Linear Probability Model (LPM) estimates the response probability as linear in the ...

... Sometimes the dependent variable is also binary (employed or unemployed) Linear Probability Model (LPM) estimates the response probability as linear in the ...

15-451 Homework 1 Jan 20, 2008

... (a) What is the probability that X (the outcome) is 1? (b) What is the probability that X is even? (c) What is the probability that X ≥ 5? 2. Given two independent, fair 6-sided dice: (a) What is the probability that X1 = 1 and X2 = 1? (b) What is the probability that X1 = 1 or X2 = 1? (c) What is t ...

... (a) What is the probability that X (the outcome) is 1? (b) What is the probability that X is even? (c) What is the probability that X ≥ 5? 2. Given two independent, fair 6-sided dice: (a) What is the probability that X1 = 1 and X2 = 1? (b) What is the probability that X1 = 1 or X2 = 1? (c) What is t ...

Expectation–maximization algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method for finding maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the E step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step.