* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Chapter 4, General Vector Spaces Section 4.1, Real Vector Spaces

Survey

Document related concepts

Perron–Frobenius theorem wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Cross product wikipedia , lookup

Matrix multiplication wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Exterior algebra wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

System of linear equations wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Euclidean vector wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Vector space wikipedia , lookup

Transcript

Chapter 4, General Vector Spaces

Section 4.1, Real Vector Spaces

In this chapter we will call objects that satisfy a set of axioms as vectors. This can be

thought as generalizing the idea of vectors to a class of objects.

Vector space axioms:

Definition: Let V be an arbitrary nonempty set of objects on which two operations

are defined on which two operations are defined addition, and multiplication by scalars.

By addition we mean a rule for associating with each pair of objects u and v in V an

object u+v, called the sum of u and v;

By scalar multiplication we mean a rule for associating with each scalar k and each object

u in V an object ku, called scalar multiplication of u by k.

If the following axioms are satisfied by all objects u,v,w in V and all scalars k and m, then

we call V a vector space and we call the objects in V vectors.

1. If u and v are vectors in V, then u+v is in V.

2. u+v=v+u

3. u+(v+w) = (u+v)+w

4. There is an object 0 in V, called a zero vector for V, such that 0 + u = u + 0 = u

for all u in V.

5. For each u in V, there is an object -u in V, called a negative of u such that u+(−u) =

(−u) + u = 0

6. If k is any scalar and u is any object in V, then ku is in V.

7. k(u+v)=ku+kv

8. (k+m)u=ku+mu

9. k(mu)=(km)u

10. 1u=u

Note:

• When the scalars are real numbers, V is called a real vector space.

• When the scalars are complex numbers, V is called a complex vector space.

• Addition and scalar multiplication do not need to be the operations we defined

before.

1

Examples:

1. Zero vector space: V = {0}, 0+0=0, k0=0

2. The set of all n × m matrices with real entries, with

addition= matrix addition , and scalar multiplication= scalar matrix multiplication

forms a real vector space.

(a) If A and B are n × m matrices then A+B is also a n × m matrix

(b) A+B=B+A

(c) A+(B+C)=(A+B)+C

(d) There is zero matrix 0, such that A+0=0+A=A

(e) For each matrix A we have -A such that A+(-A)=0

(f) If k is any real scalar, then kA is in V

(g) k(A+B)=kA+kB

(h) (k+m)A=kA+mA

(i) k(mA)=(km)A

(j) 1A=A, here 1 is the scalar 1.

These properties hold by Theorem 1.4.1 and Theorem 1.4.2.

3. Every plane through the origin is a vector space:

Here our set is the set of points of the plane, addition and scalar multiplications are

the usual ones.

Then we have 0 in the plane, If ax + by + cz = 0 is the plane equation, then for

any u = (u1 , u2 , u3 ), v = (v1 , v2 , v3 ) on the plane, then u+v will be on the plane as

well. Other properties can easily be checked.

4. The set of all real-valued functions defined on the entire real line (−∞, ∞) with

addition (f + g)(x) = f (x) + g(x)

scalar multiplication (kf )(x) = kf (x)

is a real vector space. We have 1= identity function, 0=zero function

Example: Let V be the set of positive real numbers. Show that V is a vector space with

addition: u + v = uv, and scalar multiplication: ku = uk

Solution: We need to check that vector space axioms are satisfied by the objects of V.

1. Let u and v be objects in V, this means u and v are positive real numbers, then

u+v=uv must also be in V as uv will be positive real number.

2. u+v=v+u due to property of real numbers

2

3. u+(v+w)=(u+v)+w this also holds as u,v,w are real numbers

4. There must be a zero vector 0 in V, such that 0+u=u+0=u,

Let us denote this zero vector by a, then we want u=a+u=au, dividing by u gives

a=1. That is zero vector of V is 1.

5. For each u in V there must be an object -u in V such that u+(-u)=(-u)+u=0. In

our vector space 0=1, so we want (-u) such that u+(−u) = u(−u) = 1 ⇒ −u = u1

6. if k is any scalar and u is an object in V, then ku must be in V. We have ku = uk

and since u is positive real number uk will be also a positive real number.

7. k(u+v)=ku+kv; Note that k(u + v) = (uv)k = uk v k = ku + kv

8. (k + m)u = uk+m = uk um = ku + km

9. k(mu) = k(um ) = (um )k = umk = (km)u

10. 1u = u1 = u

Examples that are not vector spaces:

1. Let V = R2 and define addition as v + w = (v1 + w1 , v2 + w2 ) and scalar multiplication as kv = (kv1 , 0).

Now consider 1v = (v1 , 0) 6= (v1 , v2 ). Hence V cannot be a vector space.

a 1

2. Consider the set of all 2 × 2 matrices of the form

with the standard matrix

1 b

addition and scalar multiplication. Consider

a1 1

a2 1

a1

a2

2

+

=

1 b1

1 b2

2 b1 + b2

this sum is not in the set so this set with the standart matrix addition and scalar

multiplication is not a vector space.

Theorem 4.1.1: Let V be a vector, u a vector in V, and k a scalar; then:

(a) 0u=0

(b) k0=0

(c) (-1)u=-u

(d) If ku=0, then k=0 or u=0.

3

Section 4.2 Subspaces

Definition: A subset W of a vector space V is called a subspace of V if W is itself

a vector space under the addition and scalar multiplication defined on V.

• There is a quick way to decide if a subset of a vector space is a subspace or not.

Theorem 4.2.1: If W is a set of one or more vectors from a vector space V, then

W is a subspace of V if and only if the following conditions hold

(a) If u and v are vectors in W, then u+v is in W

(b) If k is any scalar and u is any vector in W, then ku is in W.

• If (a) is satisfied we call W to be closed under addition

• If (b) is satisfied we call W to be closed under scalar multiplication

Example:

1. {0} is a subspace of Rn , since 0 + 0 = 0 (closed under addition), k0 = 0(closed

under scalar multiplication)

2. Rn is a subspace of Rn

• {0} and Rn are called trivial subspaces of Rn

3. Let W be a plane through origin determined by two vectors v1 and v2 that is we

can express any vector x in W as x = t1 v1 + t2 v2 . Then W is a subspace of Rn .

Note: Every subspace of Rn must contain the vector 0. Why?

Example: Determine whether the given sets are subspaces of R3 . If it is not indicate

which closure properties fail.

(a) All vectors of the form (a, 0, 0)

(b) All vectors with integer coefficients

(c) All vectors (a, b, c) for which b = a + c

Solution: For each set we need to check for any two vectors v, w in the set, if v + w

in the set and kv is in the set for any scalar k ∈ R.

(a) Let v = (a, 0, 0), w = (b, 0, 0) then v + w = (a + b, 0, 0), kv = (ka, 0, 0) and these

are both in the set hence it is a subspace of R3 .

(b) Let v = (a1 , a2 , a3 ), w = (b1 , b2 , b3 ) where ai , bi ∈ Z, then v + w = (a1 + b1 , a2 +

b2 , a3 + b3 ), kv = (ka1 , ka2 , ka3 ). kv will not be in the set if k ∈ R − Z. Hence this

set is not a subspace of R3 .

4

(c) Let v = (a1 , b1 , c1 ), w = (a2 , b2 , c2 ) we have v+w and kv for any real number k are

in the set. Hence this set is a subspace of R3 .

Theorem 4.2.3: If S = {w1 , w2 , . . . , wr } is a nonempty set of vectors in a vector

space V, then:

1. The set W of all possible linear combinations of the vectors in S is a subspace of V

2. The set W in part (a) is the smallest subspace of V that contains all of the vectors

in S in the sense that any other subspace that contains those vectors contains W.

Idea of the proof: Let us form a subspace from the vectors v1 , . . . , vr . For a subspace we need a subset that is closed under scalar multiplication and addition. So if vi is

in the set where i = 1, . . . , r, then kvi must be in the set for any scalar k, so we need

t1 v1 , t2 v2 , . . . , tr vr to be in the set. Hence t1 v1 + t2 v2 + . . . + tr vr must be in the set.

Definition: If S = {v1 , . . . , vr } is a set of vectors in a vector space V, then the

subspace W of V consisting of all linear combinations of the vectors in S is called the

space spanned by v1 , . . . , vr and we say that the vectors v1 , . . . , vr span W. We denote

this by W=span(S) or W=span{v1 , . . . , vr }.

Example:

1. {0} = Span{0}

2. Rn = Span{e1 , e2 , . . . , en }

3. Line passing through origin and parallel to the vector v = Span{v}

4. Plane passing through origin and parallel to the noncolliner vectors v1 , v2 =Span{v1 , v2 }

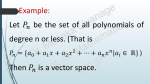

5. Set of polynomials with degree less than or equal to n, denoted by Pn with ususal

polynomial addition and scalar multiplication forms a vector space and Pn =Span{1, x, x2 , x3 , . . . , xn }

Example: Determine whether the polynomials p1 = 1 − x + 2x2 , p2 = 3 + x, p3 =

5 − x + 4x2 , p4 = −2 − 2x + 2x2 span P2 ?

Solution: If these polynomials span P2 then any arbitrary vector ax2 + bx + c in P2

can be expressed as a linear combination of p1 , p2 , p3 , p4 . That is

ax2 + bx + c = k1 p1 + k2 p2 + k3 p3 + k4 p4 where k1 , k2 , k3 , k4 ∈ R

Expressing this equation in terms of components we get

a = 2k1 + 4k3 + 2k4

b = −k1 + k2 − k3 − 2k4

c = k1 + 3k2 + 5k3 − 2k4

5

Hence our question is

a,b,c values. Form the

2 0

−1 1

1 3

reduced to determining whether

augmented matrix;

1 0

4

2 a

Row operations

0 1

−1 −2 b

→

5 −2 c

0 0

this system is consistent for all

2 1

1 −1

0 0

a

2

b + a2

c−2a−3b

3

From the last row we see that linear system is consistent if c = 3b + 2a which gives restrictions on the scalars a,b,c. Hence p1 , p2 , p3 , p4 cannot span P2 . For example x2 +x+6

is in P2 but not in the span of p1 , p2 , p3 , p4 .

Theorem 4.2.4: The solution set of a homogenous linear system Ax = 0 in n unknowns is a subspace of Rn .

Exercise: Prove this theorem

Example: Find a general solution of the linear system and list a set of vectors that

span the solution space

x1 + 6x2 + 2x3 − 5x4 = 0

−x1 − 6x2 − x3 − 3x4 = 0

2x1 + 12x2 + 5x3 − 18x4 = 0

Solution:

1

6

2 −5 0

1 6 2 −5 0

−1 −6 −1 −3 0 Row operations

0 0 1 −8 0

→

2 12 5 −18 0

0 0 0 0 0

x1 , x3 are leading variables, x2 , x4 are free variables, let x2 = s, x4 = t ⇒ x3 = 8t, x1 =

−6s − 11t

x1

−6s − 11t

−6

−11

x2

s

=

= s 1 + t 0

x3

0

8

8t

x4

t

0

1

−11

−6

1 0

⇒ Solution space=Span{

0 , 8 }

1

0

Theorem 4.2.5: If S = {v1 , v2 , . . . , vr } and S 0 = {w1 , w2 , . . . , wk } are two sets of

vectors in a vector space V, then

span {v1 , v2 , . . . , vr } = span {w1 , w2 , . . . , wk }

if and only if each vector in S is a linear combination of those in S’ and each vector in S’

is a linear combination of those in S.

Importance: Different number of vectors and different vectors can span the same

space. We will see that one needs a minimum number of such vectors and that number

is unique. For that we need linear independency.

6